Data Warehouse

Learn why data warehouses are crucial in a modern tech stack, and how to choose the best one for your needs.

Learn why data warehouses are crucial in a modern tech stack, and how to choose the best one for your needs.

A data warehouse, or enterprise data warehouse (EDW), is a system to aggregate your data from multiple sources so it’s easy to access and analyze. Data warehouses typically store large amounts of historical data that can be queried by data engineers and business analysts for the purpose of business intelligence.

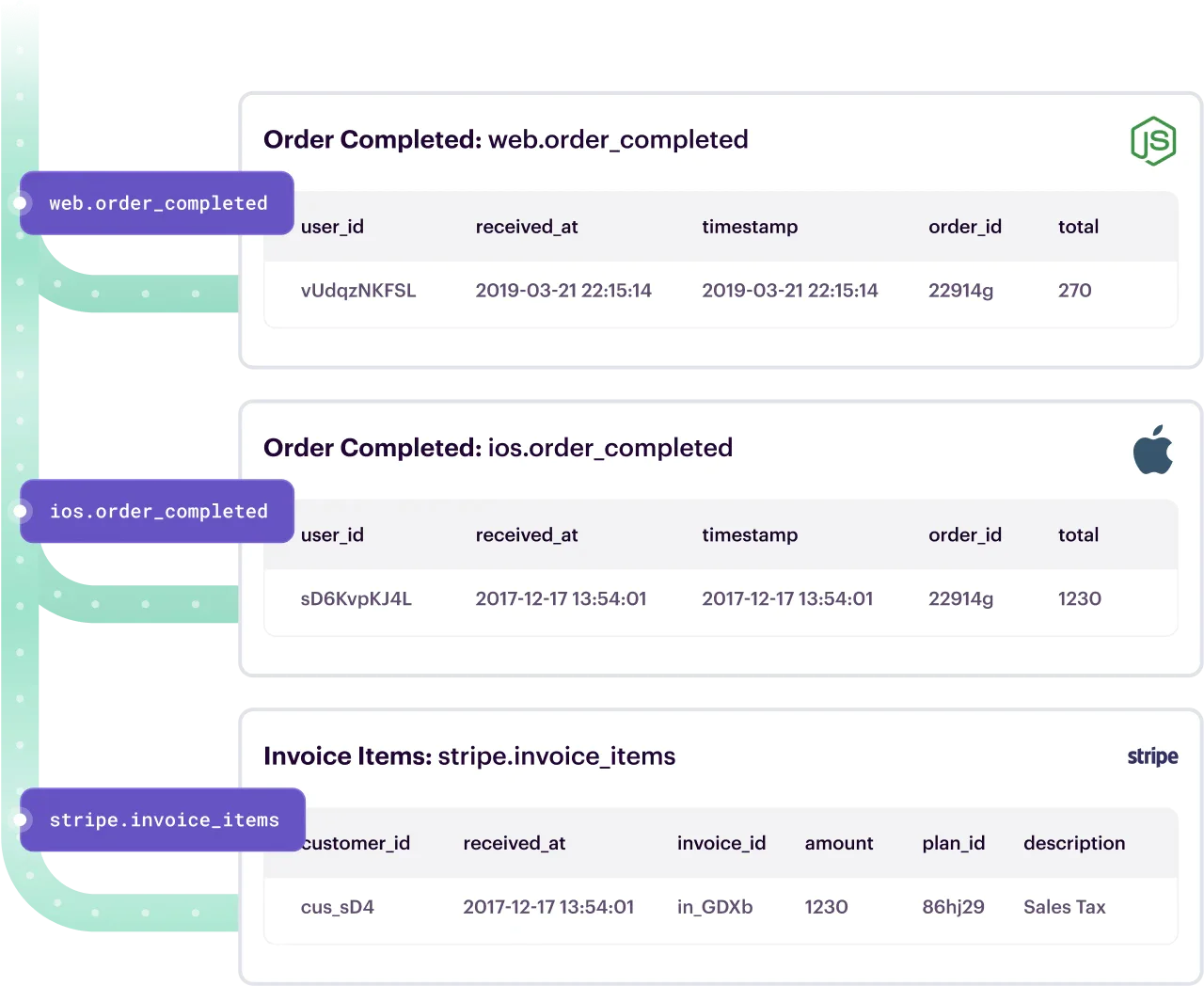

Instead of only having access to your data in individual sources, a data warehouse will funnel all your data from disparate sources (like transactional systems, relational databases, and operational databases) into one place. Once it’s in the warehouse, it’s accessible and usable across the business to get a holistic view of your customers. From there, you can analyze related data from different sources, make better predictions, and ultimately make better business decisions.

A single platform to collect, unify, and connect your customer data

There are a few ways to go about implementing a new data warehouse. It can be on-premise, or designed and maintained by your team at a physical location. Then there are cloud-based data warehouses, which live entirely online and don't require any physical hardware. Cloud data warehouse architecture makes it easier to implement and scale, and they’re typically less expensive than on-premise data warehouse systems. (Or, you can opt for a hybrid approach, which is a blend of both on-prem and cloud-based.)

Data warehouses integrate, organize, and store vast amounts of structured or semi-structured data. This is done through a process called ETL, or Extract, Transform, and Load. Here’s a quick rundown of how that works:

Data is ingested from various different sources and databases (e.g., spreadsheets, CRMs, etc.).

After data is extracted, it’s transformed to ensure it's both consistent (e.g., standardized naming conventions), and compatible with its target system (i.e., the data warehouse, which would have a relational schema design).

Data is then loaded into the warehouse, usually via batch processing (at timed intervals). You could also load data in real-time, but not on a 24/7 basis (as that could degrade query performance and hinder analysis).

Let’s go over some of the main components of a data warehouse.

The staging area is where business data is cleaned and organized before it’s loaded into the data warehouse. This is where you ensure that the data entering the warehouse is of the highest quality, free from errors and inconsistencies.

The staging area is crucial because the quality of your data directly impacts the insights derived from it. Poor data quality can result in unreliable analysis and misguided decision-making, which can have detrimental effects on your business.

Sandboxes are secure playgrounds where data scientists and analysts can experiment with data without affecting the central data warehouse. They offer a risk-free environment for data exploration, fostering a culture of innovation and experimentation.

In a sandbox, data professionals can test hypotheses, try out different algorithms, and uncover valuable insights, all without affecting the integrity of the primary data. This freedom to experiment can lead to discovering novel solutions and strategies to drive business growth.

The hub-and-spoke model is a type of data warehouse architecture where the hub is the main data warehouse, and the spokes are data marts.

Hub-and-spoke data warehouses quickly scale as you can add new spokes as your business grows and data needs to evolve. This structure also makes data more accessible since each department has its own data mart for easy analysis.

Metadata is data about data. It provides information about the source, format, and characteristics of the data in the warehouse. This information is invaluable for businesses as it enables them to understand their data better and ensure accurate and effective analysis.

You should consider a data warehouse if you want to want to create a central repository for all your customer and business data, gain greater insight by querying data directly via SQL, and democratize data between teams by allowing simultaneous access to the same dataset.

Now you know the benefits of a data warehouse—but how do you go about picking one? Consider these factors when figuring out which data warehouse will best suit your business needs.

There are three types of data that you might want to store for your business: structured, unstructured, and semi-structured. Most data warehouses support structured and semi-structured data management, but unstructured data is a better fit for data lakes.

Structured data is quantifiable data that can be organized neatly into rows and columns (e.g., sales records or customer contacts).

Unstructured data is data that can’t be easily managed and analyzed. Think written content (like blog posts or answers to open-ended survey questions), images, videos, audio files, and PDFs. If you’re looking to store purely unstructured data, you should consider a data lake instead of a data warehouse.

Semi-structured data is a mix of structured and unstructured data. Take an email, for example. The content of that email is unstructured, but there are quantifiable aspects to the email, such as who sent it, when they sent it, when it was opened, etc. Similarly, an image itself is unstructured, but you also often have access to structured data like the time the photo was taken, device type, photo size, geotags, etc.

If semi-structured data is important to you, BigQuery and Snowflake are two data warehouses known for having the best infrastructure to support storage and queries for semi-structured data.

Most data warehouses typically allow you to store massive amounts of data without much overhead cost. You probably won’t need more than what they offer, especially if analytics is the primary use case.

However, you’ll want to consider how a particular warehouse scales data storage during times of demand. For example, Amazon Redshift will require you to manually add more nodes (the basic structures in data warehousing that store data and execute queries) when you need more storage and computing power. On the other hand, Snowflake offers an auto-scale function that adds and removes clusters of nodes dynamically as needed.

The performance of a data warehouse refers to how fast your queries can run and how you maintain that speed in times of high demand. As you can imagine, scaling for performance and data storage are closely connected. Like storage, performance will increase as you scale up the nodes in your warehouse.

These days, speed is a non-issue. Every warehouse is about as fast as the others. What you really want to consider in regards to performance is how much control you want over your speed.

Similar to how a data warehouse’s storage scales, you can add and remove nodes for faster queries. For some warehouses, like Redshift, you need to do that manually, but you’ll be able to tune it as precisely as you like. For others, like Snowflake, it will happen automatically for a hands-off experience.

You likely want your engineers focused on building and maintaining your products instead of worrying about ETL pipelines and day-to-day management of your warehouse—especially if you have a small team. In that case, you’ll want a data warehouse that is self-optimizing like BigQuery, Snowflake, or IBM Db2.

However, by maintaining your warehouse manually, experienced data warehouse architects can have greater control and flexibility to optimize it precisely for your company’s needs. If you want this level of control over your warehouse’s performance and cost, Redshift and PostgreSQL are your best options.

Consider using a data warehouse that is within the ecosystem of the applications you already use. For example, Azure Synapse Analytics is in the ecosystem of Microsoft products, Redshift within AWS, and BigQuery within the Google Cloud ecosystem. This will simplify implementation since you already have an infrastructure in place.

Otherwise, you’ll need your engineers to develop multiple custom ETL pipelines to get your data where it needs to be. You may still need to write a custom ETL to get data into your warehouse from certain data sources, but the goal is to minimize that work.

Many factors go into data warehouse pricing, including storage, warehouse size, run time, and queries. For Redshift, you pay per hour based on nodes or per bytes scanned. BigQuery, on the other hand, has both a flat-rate model and a per-query model. Snowflake, IBM Db2, and Azure are all based on storage and compute time.

Ultimately, you want to choose the data warehouse that will do what you need it to do, not just choose the cheapest option.

For companies with a limited budget, PostgreSQL is a great free option and still has plenty of features. When you’re ready to upgrade, it’s easy to switch data warehouses, especially if you’re using a customer data platform like Segment that can communicate between the two warehouses seamlessly.

You should have a good idea of what you need based on the factors above. Here’s how the top data warehouses on the market compare.

(All the above vendors integrate with Segment.)

As we look to the future, data warehouses are set to become even more central to business intelligence. Key trends include:

Cloud-based warehousing

Integration with data lakes

Real-time data analytics

Increased data privacy policies

Advanced AI and machine learning capabilities

Using the data warehouse as a single source of truth and data activation, resulting in leaner tech stacks.

Data warehouses are poised to not only store and organize data but also facilitate advanced analytics, promote data sharing, and help businesses adapt to market dynamics. They will become a comprehensive solution for all data-related needs to drive business growth.

Twilio Segment has pre-built integrations with warehouses like Snowflake, Redshift, Postgres, Azure SQL, BigQuery, and IBM Db2, and is able to load all of your customer data (and profiles) to your cloud data warehouse in just a few minutes.

Businesses also have a fine-grained control on the sources, collections, and properties they choose to sync with their warehouse. Segment loads data into the warehouse in bulk at preset intervals, which can range from once a day to up to 24 times a day, depending on your preferences and plan tier.

Segment stores data in relational schemas, which are optimized for easy querying, cost, and performance. (You can learn more about warehouse schemas here.)

And with reverse ETL, businesses are also able to activate data in their warehouses with speed and ease (e.g., sending enriched customer data from online and offline interactions to ad destinations for highly personalized targeting).

A data warehouse is a structured repository of cleaned and transformed data, ideal for reporting and analysis. On the other hand, a data lake is a vast reservoir that stores raw, unprocessed data in its native format.

The five critical components of a data warehouse are:

Data sources that provide the raw data

ETL tools to extract, transform, and load the data

Storage where the data is housed

A query engine to run analytical queries

End-users or business intelligence tools that utilize the data for insights

A database is a system that stores data, while a data warehouse is a type of database specifically designed for data analysis and reporting. A data warehouse aggregates data from multiple disparate sources and transforms it into a format suitable for business intelligence purposes.

A data warehouse is a large, centralized repository of data that serves the entire organization. In comparison, a data mart is a smaller, more focused subset of a data warehouse tailored to meet the specific needs of a particular business unit or team.