Data Cleaning

Learn the importance of data cleaning and its best practices.

Learn the importance of data cleaning and its best practices.

Data cleaning – sometimes referred to as data cleansing or data scrubbing – is the process of identifying, modifying, or removing incorrect, duplicate, incomplete, invalid, or irrelevant data within a dataset. It helps ensure that data is correct, usable, and ready for analysis.

Take control of your data quality with intuitive features and real-time validation built for enterprise scale.

Cleaning data is an absolute necessity for businesses. It ensures that the information driving your decision making is correct. When we break down the benefits of data cleaning, we can see why – as it improves everything from data quality to the ease of data integration.

When we refer to high-quality data, we mean data that is accurate, up to date, and without any redundancies or inconsistencies that can skew analysis. Data cleaning plays a crucial role in ensuring this by:

Removing duplicate data

Standardizing naming conventions

Identifying outliers

Ensuring the timeliness of data (e.g., it’s updated in real time)

Making data widely accessible (and ensuring the right privacy and security protections are in place, depending on the risk level)

And more!

Data is consistent when it follows uniform formatting and conventions within a data set. Consistency also refers to data being aligned across multiple different systems and databases.

One example would be a database that includes student grades for a class. If the data were consistent, all those values would be in the same format (e.g., “A, B, C…versus 100%, 98%, etc.).

Combining data from various different sources isn’t as straightforward as it sounds. Data comes in different formats, can be named or organized differently between systems, and it’s easy for the same event to be counted twice (or for certain values to be missing) when all this data comes together.

Data cleaning plays a crucial role in integration. It flags and fixes the issues that would otherwise make data unreliable.

To clean the raw data you collect—and keep it clean—start with these five techniques.

Automation is key for scaling your data cleaning process—otherwise, you’d have to manually scan every single piece of data you collect.

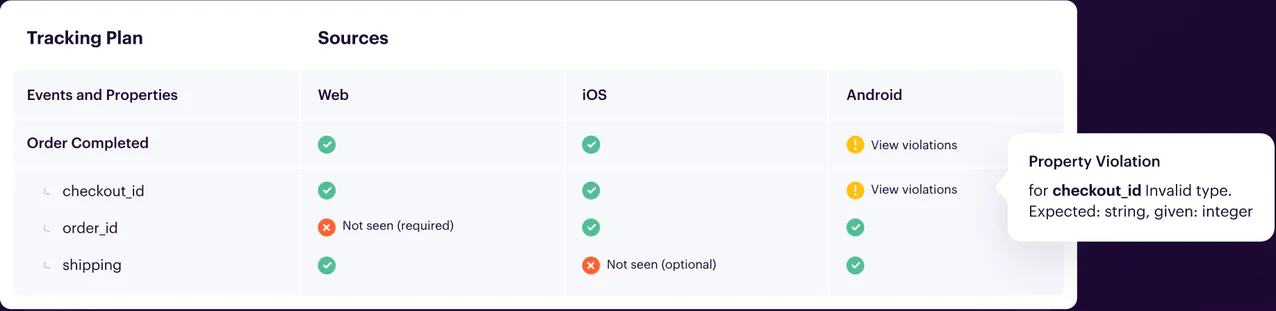

If you’ve connected your tracking plan to a data source via your CDP, your CDP will be able to identify non-conforming data. Protocols, a premium feature on the Segment CDP, runs automatic validation based on the plan. It flags violations when:

A required property is missing.

A property value’s data type is invalid.

A property value does not pass applied conditional filtering.

Next, set controls that tell your CDP how to handle non-conforming data. You might ask your CDP to alert you of violations or to block non-conforming events. The data won’t be sent to any tool downstream until your team manually approves it.

In the example below, the checkout ID, order ID, and shipping details are required to complete an order on a website. The CDP flags missing data as well as properties that don’t conform to your specified format.

Pro tip: Use APIs to connect your data cleaning tools and make your QA automation more powerful. You can connect your CDP to a messaging tool like Slack, so you receive alerts of violations. You can also link to a business intelligence or data visualization tool that lets you create custom dashboards for analyzing violation trends, identifying outliers, and determining the causes of common violations.

Through identity resolution, your CDP can create customer profiles that tie different actions across multiple channels to one and the same person. You can create identity rules that guide your CDP’s algorithm. It will decide whether to merge the information into a single profile or to remove one or more pieces of duplicate data.

Use data transformation to fix data with structural errors, such as typos, incorrect labels, or inconsistent formatting. For example, you might change an event name from completed_order to Order Completed.

Protocols’ Transformations tool lets you modify data to conform with either your tracking plan or your destination tool’s specs. With one click, you can apply the transformation to a source or destination, so you don’t have to scour your code and manually change the data value in question.

Audit every piece of data and ensure it has a use. This ties back to the business goals laid out in your tracking plan. If a user trait, event, or property isn't included in the plan, it might be because tracking it does not help you achieve your objectives.

You can ask your CDP to alert you to irrelevant or unplanned data, so you can decide on an appropriate action.

While your tracking plan serves as your data collection framework, keep in mind that it’s a living document. As your company grows and changes, so should your tracking plan.

Audit your plan to ensure it remains relevant to your business goals and customer journey. You can do this on a schedule—like every six months—or every time you implement a major business change, such as launching a product or targeting a new consumer segment.

Say you used to offer a freemium version of your SaaS product. However, you’re now eliminating the free plan and starting with a basic plan instead. You no longer have to track conversion rates from free to paid plans—and your tracking plan needs to reflect this.

There are three basic types of data quality problems that require data cleaning. And organizations with massive amounts of data and poor hygiene usually have all three.

Invalid data is data that is generated incorrectly. These mistakes can happen when your customer enters data on a form, or they can stem from your end, particularly the way you name data events and properties.

Sample scenarios:

Asking the wrong question: Labeling a field on a form as “kg” when you’re actually trying to measure pounds.

Not defining what makes data invalid: Not specifying types of data (ex: numeric values, string), a range of acceptable data (ex: only 19 years of age and above), or permitted values (ex: Gmail address not allowed if you want to get a company email ad).

Incorrectly labeling data: Placing a product in the wrong category (ex: bags under “apparel” instead of “accessories,” which invalidates your data if you’re tracking user actions related to product categories).

Using nearly identical names for different data events: If marketing uses the event name FormSubmitted for an ebook download while sales uses formSubmitted for a free demo request, it will be easy to confuse the two during analysis.

Duplicate data is data that is collected more than once, either through one channel or across various channels. This can often happen when you have not unified data in a single customer view.

For instance, a person who clicked your Facebook ad might also visit your site’s promo page and buy a product in your brick-and-mortar store. Without a single customer view, you will not recognize that this person is one and the same.

Sample scenarios:

Customer is repeatedly asked to provide the same information: Customer downloads an ebook and needs to fill in their email address, company name, and company role. Next time they download another asset on the site, they’re asked for the same info because the site does not recognize them.

Data comes in varied formats from different sources: The same person might use their nickname on one channel and their full name on another. Without unifying the data, you’ll end up with two customer profiles that share some of the same details.

Data is incomplete when it has missing values. Sometimes, this isn’t within your control; the customer might neglect or decline to provide certain information, or you might experience a technical glitch like data import issues. But you can inadvertently cause incomplete data collection by not clearly defining the details you need.

Sample scenarios:

Setting vague parameters for a form: You can wind up with incomplete data if you have a single field labeled "address," as opposed to discrete fields with specified data types or permitted values for the street name, house/unit number, city, state, country, and zip code.

Not identifying which data is required: You might not mark the zip code as a required property for completing checkout, and the customer might forget to provide that detail.

Not defining what data you need to meet your business goals: Like the analysts in the Adobe ad, you might fail to collect certain user traits or contextual information for data events.

As we’ve seen, data quality problems tend to stem from the way you gather information in the first place. Here’s how you can improve your data collection process to enhance the quality of your customer information:

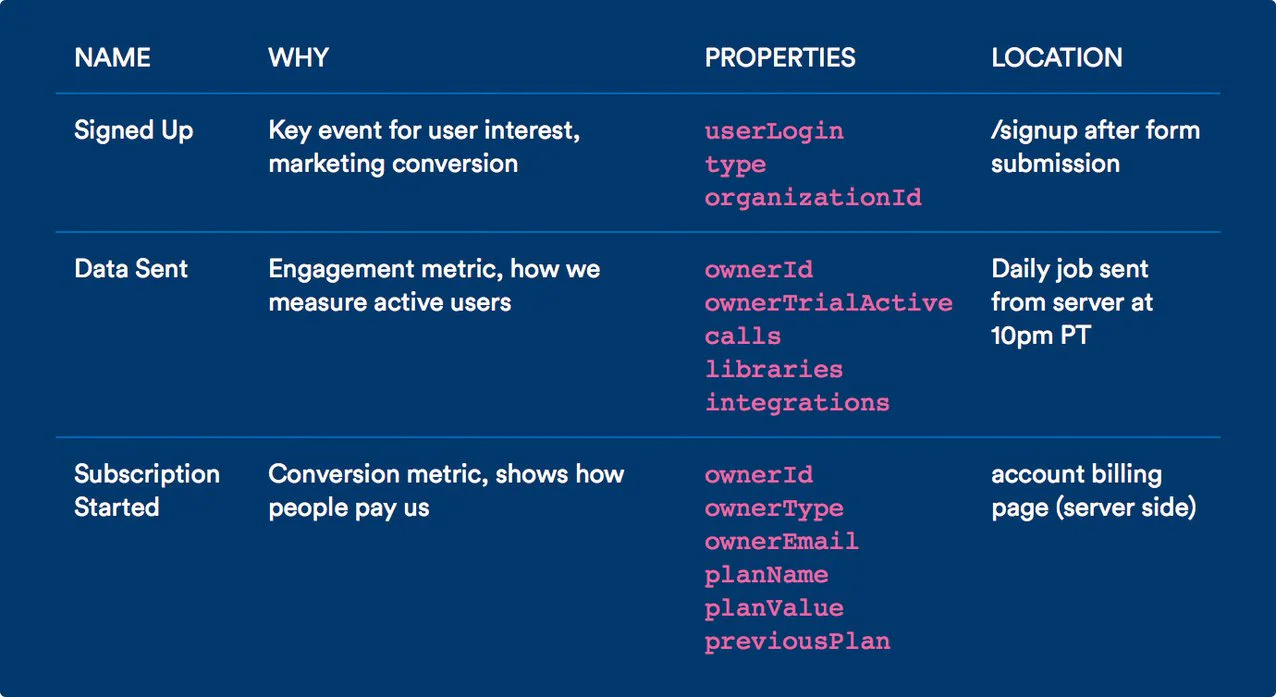

A data tracking plan lays out your organization's standards for tracking data. For the plan to be useful, all teams in your organization need to refer to it as a common source of truth.

Your data tracking plan can come in the form of a spreadsheet that identifies:

Events (i.e., user actions) that you will track, along with a description of each event and your reason for tracking it

Properties to track under each event, including which ones are required and which are optional

Data types and permitted values for properties

Where and when each event is tracked

Where events should be located in your code base

Basic components of a data tracking plan. Source.

Once your tracking plan is complete, you can upload it to your customer data platform (CDP) and apply it to your sources.

If you don’t want to create a plan from scratch or need inspiration on how to build one, check out this tracking plan template, which suggests plans for various verticals.

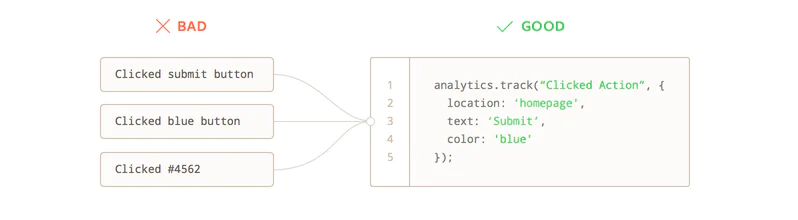

We recommend these best practices for naming your data events and properties:

Choose standards for capitalization: Ex: Title Case for event names and snake_case for property names.

Pick an event name structure: We recommend using an Object + Action structure. Ex: Product Added.

Don’t create event names dynamically: Avoid including dynamic values, such as dates, in event names. Ex: Product Added (11-12-2021).

Don’t create events to track properties: Check if a value you’re adding to an event name could be a property or property value instead. Ex: Instead of the event name Under Armour Shoes Added, you could use properties, such as "category": "Shoes" and "brand":"Under Armour".

Don’t create property keys dynamically: Vague property names make it difficult to pinpoint which actions and values you’re tracking. For example, in the key-value pairs "category_1:"true" and "category_2":"false" , you wouldn’t be able to tell at a glance exactly which product categories they refer to.

Here’s how these data naming best practices would look like in a script:

Examine all customer interactions where you collect data. Spot and fix errors in fields that:

Don’t adhere to the formats specified in your tracking plan

Don’t set parameters for valid data inputs, if such are needed

Ask the wrong questions

Ask vague questions

Protocols helps businesses automate several workflows and processes that help maintain data quality at scale. First, Protocols helps organizations adhere to their predefined tracking plan, proactively blocking bad data and transforming any events that don’t adhere to their standardized naming conventions.

Protocols also offers automatic data validation, so teams aren’t bogged down by manually testing their tracking code.

Thousands of businesses have found success with Protocols, like Typeform, which was able to reduce the number of their tracked events by 75%.

Learn more about how Protocols can help you protect your data integrity.

If data isn’t properly cleaned it can’t be analyzed accurately. Data cleaning ensures that the data coming in from multiple different sources, in multiple different formats, is properly formatted, transformed, and integrated – flagging duplicate entries or missing values, standardizing naming conventions, and more. When data isn’t cleaned, it opens the door for inaccurate or inconsistent reporting, and misguided insight.

Data cleaning is the process of correcting inconsistencies and inaccuracies in data sets to ensure that data is correct, complete, and up to date. Data transformation is the process of aggregating or reformatting data to ensure it's compatible with other data sets and ready for analysis.

The best data cleaning techniques will depend on several factors, from the types of data you’re working with to your overall objectives. But a few include:

Data Standardization: Transforming data to adhere to a consistent format (e.g., “USA” vs. “United States”).

Identify & Remove Duplicate Data: Use algorithms to identify and remove duplicate records or entries in the data set.

Automated Data Cleaning Tools: Use data cleaning software or libraries to automate and streamline many data cleaning tasks.

Data cleaning is focused on identifying and fixing issues within raw data to ensure its quality and accuracy. Data validation is focused on confirming the data meets predefined criteria and standards.

Sometimes people treat “data wrangling” and “data cleaning” as synonyms, but they can refer to different things. Data cleaning is an aspect of data wrangling, which is a more overarching process that includes data collection, transformation, integration, and enrichment.