4 Data Integration Techniques with Pros and Cons

Discover the top data integration techniques, best practices and methods for seamless data integration.

Discover the top data integration techniques, best practices and methods for seamless data integration.

Data integration can quickly become complex as you add in more sources, destinations, and data types. Too often, data ends up in silos that are difficult to break down, with the flow of data becoming over-reliant on engineers’ or analysts' manual intervention.

The best way to approach data integration will depend on your specific needs, bandwidth, and preferences. We listed the top four techniques to consider when figuring out how to broach data integration at scale.

True to its name, application-based data integration is when data from different tools and systems is integrated at the application layer. APIs (application programming interfaces) play a key role in facilitating the communication and data transfer between these different software systems and apps.

Application-based data integration offers a high level of precision and customization in allowing businesses to tailor how data is integrated between systems (e.g., controlling data flows and transformations). This method can also leverage the existing security measures of each application to protect data. It’s also a scalable technique: businesses can easily swap out systems or add in new ones.

APIs have come a long way over the years, but they aren’t as sophisticated as some alternatives. If you’re designing your own API, expect teams to put in a lot of work on the deployment and integration process. These solutions aren’t set-and-forget-it, either; maintenance is required to continue getting consistent results, especially as data source technology changes over time.

This technology gives enterprises the ability to access virtual representations of their data warehouses without having to transfer data from those separate stores.

Because data visualization doesn’t physically move the data from a virtual database, it lets you see and manipulate it in real time. This makes it more cost-effective than creating a separate consolidated store. Data virtualization can also be appealing for security reasons: it typically offers centralized access and security features (ensuring only authorized users can access specific data sources or virtualized views of data).

Some downsides to using data virtualization include a complex implementation process and ongoing maintenance. If not set up correctly from the start, performance issues may derail any efforts to be more productive. Ensuring compatibility with all of the external sources you may want to use is also challenging.

Middleware interprets data between applications and systems and is a top choice for accessing information in legacy systems.

Middleware is highly scalable and offers fast-growing companies a flexible solution with a centralized data management approach. Once integrated, it transfers data automatically and consistently, so you can count on the results, even when connecting to legacy systems.

This solution is time-consuming for companies with limited internal resources as it requires a knowledgeable developer to deploy and maintain it. The more limited functionality and learning curve may be a turn-off for agile businesses.

Change Data Capture (CDC) is when any changes made to a database are tracked, processed, and replicated in other systems to ensure synchronicity.

CDC provides a low-latency solution for companies that helps maintain data consistency between different systems. Since CDC only replicates changes that occur in the source data, this minimizes data transfer volumes and reduces network bandwidth usage.

Real-time processing requires real-time monitoring and tracking to ensure that the changed data is processed efficiently and completely. As your data needs grow and become more complex, the CDC solution will also need to become more complex. Monitoring the entire system infrastructure is often required to make sure no changed data sources are missed or processed incorrectly.

With so many data integration tools on the market, how do you decide between them? While it will ultimately come down to the needs and bandwidth of your business, there are a few important factors to consider: like future growth, compatibility with existing tools and systems in your tech stack, and data security.

All companies anticipate at least some growth, but the speed and magnitude of that growth are unique to every business. Will you be able to integrate data when faced with sudden spikes in volume?

Look for solutions that have a track record of meeting business needs in times of rapid growth or seasonal influxes (e.g., an e-commerce store during holiday periods).

Most businesses aim to get access to all of the data in their systems, including older legacy tools. Unifying data only works if the solution is compatible with existing data sources, so this should be a high priority when choosing a vendor.

Less comprehensive data integration solutions may risk leaving some types of valuable data behind.

Every time data is collected, stored, or shared, there’s a risk of it being compromised. The data integration process will involve many moving pieces, all with a potential threat to your data security and privacy.

Vendors should have a well-established plan for risk mitigation at every step of the integration process and ongoing safeguards as new data is collected.

Segment uses a single API to collect and orchestrate the flow of data. With over 450 pre-built connectors to various tools and storage systems, businesses can seamlessly connect their tech stack and integrate their data at scale.

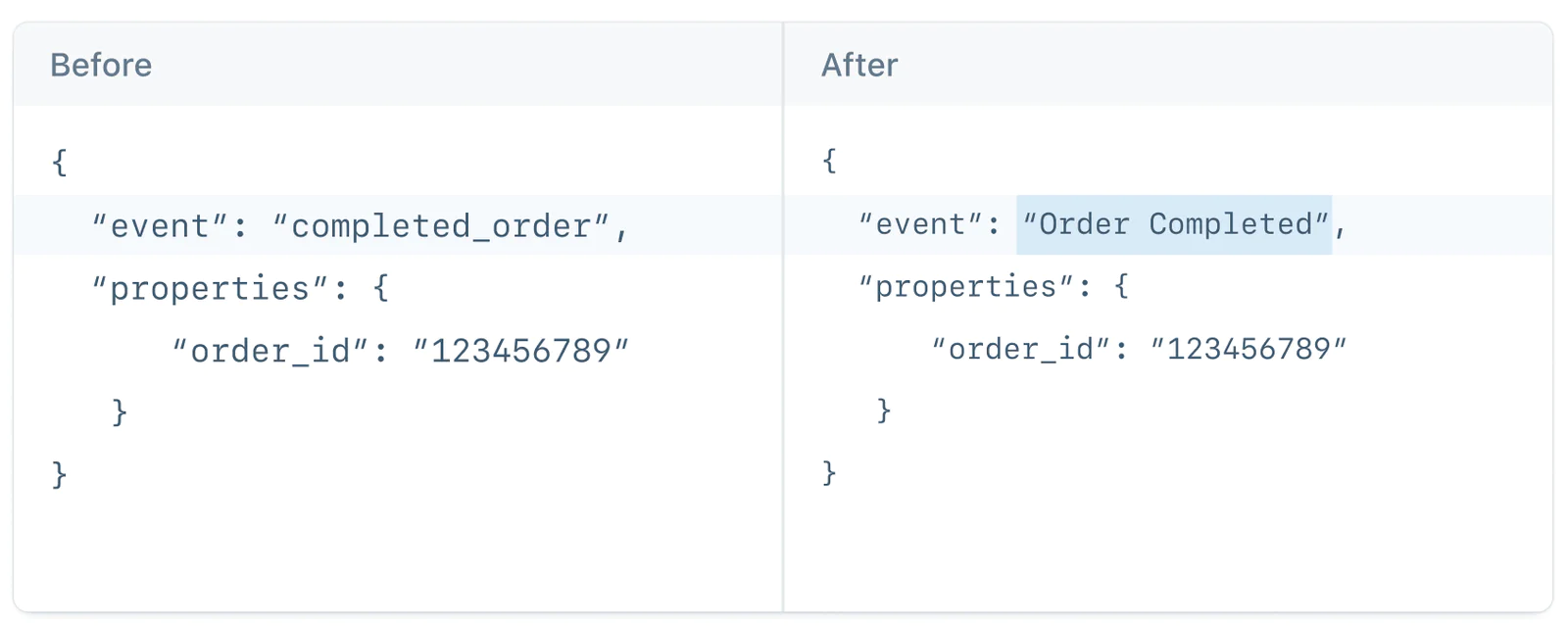

Segment offers libraries, SDKs, and the ability to create custom sources and destinations to collect data from anywhere – whether it’s client-side or server-side. On top of that, Segment offers automated and scalable data governance with Protocols to ensure data remains accurate and adheres to your tracking plan. With features like Transformations, you can also change or correct data as it flows from its source to target destination.

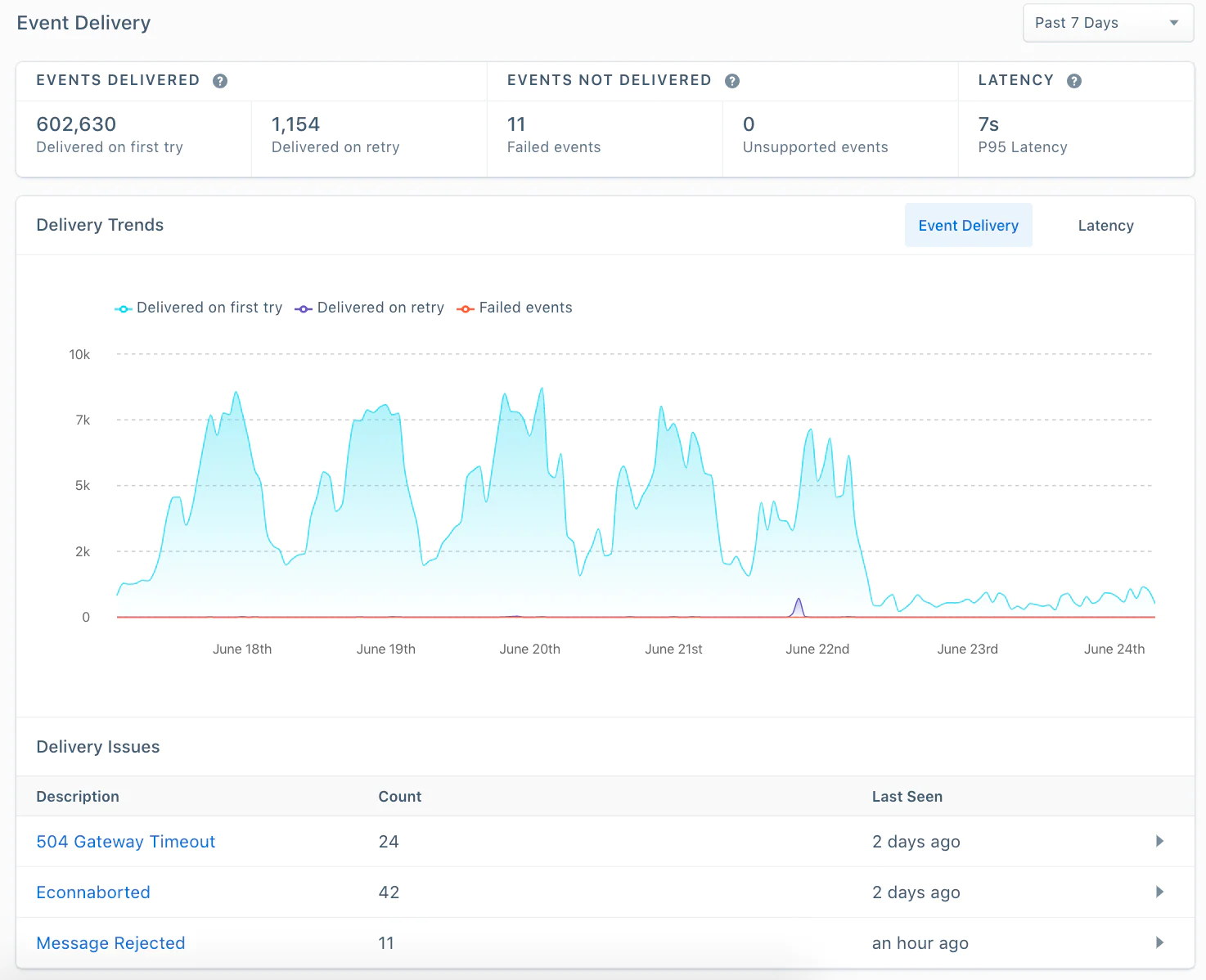

And with Event Delivery, you can identify any errors that may occur when delivering your data to its intended destination.

Connect with a Segment expert who can share more about what Segment can do for you.

We'll get back to you shortly. For now, you can create your workspace by clicking below.

A few challenges associated with data integration include integrating multiple different types of data (e.g., structured, unstructured, semi-structured), real-time data integration, compatibility between different tools and systems, handling spikes in data volume, and ensuring data security and integrity at scale.

Data can’t be accurate if it’s incomplete. Integrating data is essential to have a clear and holistic picture of business performance and the customer experience. Certain data integration techniques also include error handling, like flagging missing or duplicate data to avoid inconsistencies or inaccuracies.

Your unique business needs will determine which types of data integration you use. Factors for a new system include the following:

Type of data

Volume of data

Source characteristics and requirements

What type of connectors you’ll deploy

How you want data to appear on dashboards or reporting tools

The number and variety of different data sources

The time, money, and resources you’re able to invest

Additional considerations include any industry best practices and laws surrounding the type of data you will be integrating. You should also ask whether you want certain automation or AI functions, as well as machine learning options.

Segment uses a single API to collect, transform, and orchestrate the flow of data between sources and destinations. It also offers scalable data governance with Protocols, which is able to block bad data before it reaches a target destination, and even transform data mid-flow to ensure data accuracy at scale.

Enter your email below and we’ll send lessons directly to you so you can learn at your own pace.