The Impact of Machine Learning on Predictive Analytics

Understand popular ML models used for predictive analytics.

Understand popular ML models used for predictive analytics.

Imagine you have a massive dataset on your customers' interactions with your brand: how they navigate your website, which products they view, what they purchase, how often they engage with your emails, whether they follow you on Instagram or contact customer support. This wealth of data holds valuable insights that can be used to make business decisions and allow you to provide your customers with the best products or services.

This is where predictive analytics and machine learning enter the scene. Predictive analytics is a subdivision of advanced analytics that uses historical data, machine learning techniques, and statistical algorithms to forecast future results. It involves analyzing patterns in data to determine the likelihood of future outcomes, enabling businesses to make proactive, data-driven decisions.

Several industries, such as finance, healthcare, retail, and marketing, harness predictive analytics to gain insights and make informed decisions.

For instance, banks use predictive analytics to assess credit risk and detect fraud, while retailers employ it to forecast demand and optimize pricing strategies. Predictive analytics involves collecting and preparing relevant data, selecting appropriate machine learning models, training the models on historical data, and evaluating their performance before deploying them to predict new data.

Let’s detail how machine learning and predictive analytics work and how to use Predictions in Segment to harness these techniques with your data.

Machine learning plays an essential role in enhancing the efficacy and speed of predictive analytics. Here are some ways machine learning is used:

Feature Selection: Feature selection identifies the most relevant variables or features from a dataset that contribute significantly to the prediction task. Machine learning algorithms can automatically select the best features, reducing dimensionality and improving model performance.

Model Training: Machine learning models are trained via historical data that teaches patterns and relationships between input features and target variables. While being trained, the model adjusts its internal parameters to decrease the difference between predicted and actual outcomes, enabling it to make accurate predictions on new, unseen data.

Continuous Learning: Machine learning models can be designed to learn and adapt continuously as new data becomes available. This allows predictive analytics systems to stay up-to-date and improve their accuracy over time. Online learning or incremental learning techniques enable models to update their parameters in real time without retraining from scratch.

Anomaly Detection: Machine learning algorithms can detect anomalies or unusual patterns in data that deviate from expected behavior. Anomaly detection is valuable in predictive analytics for identifying rare events, such as fraud or equipment failures, allowing businesses to take proactive measures.

Machine learning isn’t a single entity. It is a collection of statistical techniques that allow for supervised or unsupervised learning of a dataset. Thus, choosing a suitable model is critical to the success of machine learning and the eventual predictions. There are a few ways to think about choosing your machine learning model:

Size of dataset. The size of the available dataset is a crucial factor in model selection. Some models, such as deep neural networks, need a large amount of labeled training data to achieve optimal performance. If the dataset is small, simpler models like decision trees or logistic regression are more appropriate to avoid overfitting.

Type of dataset. The type of dataset, whether numerical, categorical, or textual, affects the choice of machine learning model. For example, linear regression works well with numerical data, while decision trees can handle numerical and categorical features. Natural language processing tasks may require specialized models like recurrent neural networks (RNNs) or transformers.

Expected output. The predictive model's expected output also influences the model selection. If the goal is to predict a continuous numerical value, regression models like linear regression or support vector regression (SVR) are suitable. For binary classification problems, logistic regression or decision trees can be used. Multi-class classification tasks may benefit from models like random forests or neural networks.

Complexity of prediction. The complexity of the prediction task influences the choice of machine learning model. Simpler models, such as linear regression, are suitable for straightforward relationships between variables, while complex models like neural networks can handle intricate, nonlinear patterns in data.

Interoperability refers to a machine learning model's ability to integrate smoothly with existing systems and workflows. When selecting a model, it's essential to consider its compatibility with the organization's existing programming languages, libraries, and platforms.

When you understand your dataset through exploratory data analysis, you can choose the correct model.

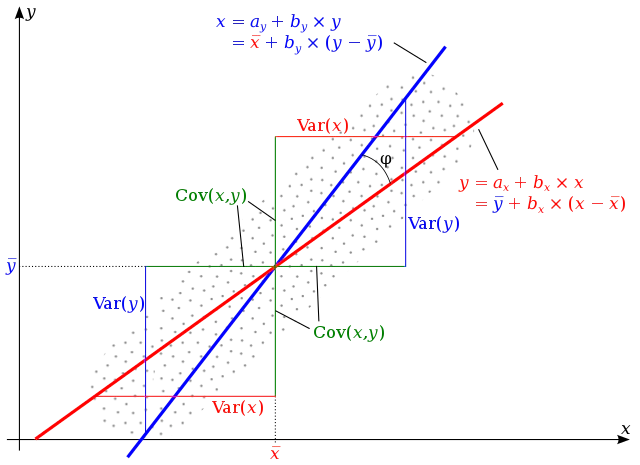

Linear regression is a straightforward machine learning model that assumes a linear relationship between input features and the target variable. It aims to find the best-fitting line that minimizes the sum of squared differences between the predicted and actual values. The equation for linear regression is:

y = β₀ + β₁x₁ + β₂x₂ + ... + βₙxₙ

Where y is the target variable, x₁, x₂, ..., xₙ are the input features, and β₀, β₁, ..., βₙ are the coefficients that represent the weights assigned to each feature. The coefficients are learned during training using optimization techniques like gradient descent. Linear regression is suitable for predicting continuous numerical values when the data has a clear linear trend.

(source: Wikimedia)

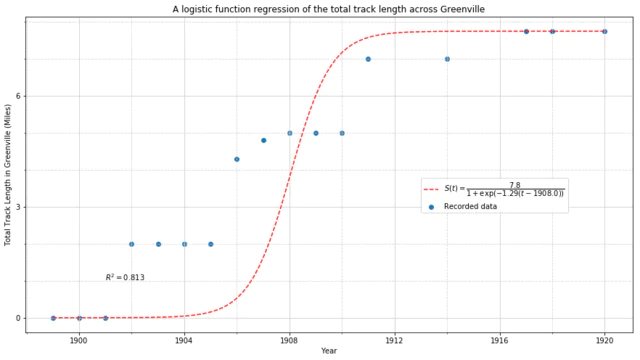

Logistic regression is used for binary classification problems where the target variable has two possible outcomes. It estimates the probability of an instance belonging to a particular class based on the input features. The logistic regression model applies the logistic function (sigmoid) to the weighted sum of input features:

P(y=1 | x) = 1 / (1 + e^-(β₀ + β₁x₁ + β₂x₂ + ... + βₙxₙ))

where P(y=1 | x) is the probability of the instance belonging to class 1 given the input features x, and β₀, β₁, ..., βₙ are the coefficients learned during training. The decision boundary is determined by a threshold probability (usually 0.5), above which an instance is classified as class 1 and below which it is classified as class 0.

(Source: Wikimedia)

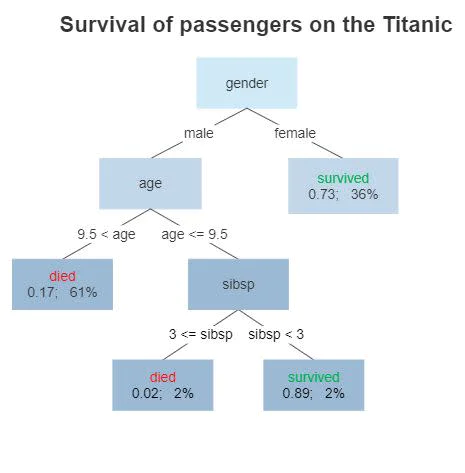

Decision trees are tree-structured models that make predictions by recursively splitting the data based on feature values. At each internal node of the tree, a feature is selected that best splits the data into subsets based on a specific criterion, such as Gini impurity or information gain.

The splitting process continues until a stopping condition is met, like meeting a maximum depth or a minimum number of instances in a leaf node. During prediction, an instance traverses the tree from the root to a leaf node, following the path determined by the feature values, and the majority class or average value in the leaf node is assigned as the prediction.

(Source: Wikimedia)

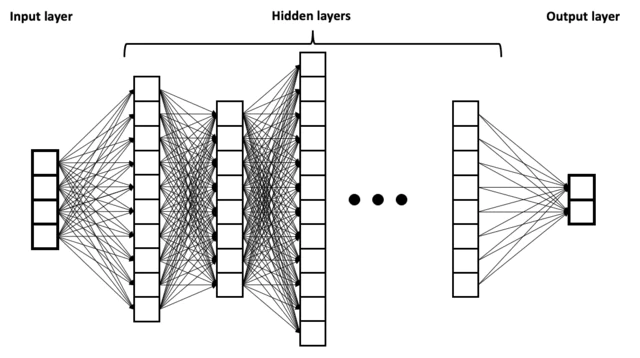

Neural networks are based on how the human brain functions and how it's structured. They consist of interconnected nodes (neurons) organized in layers. Each neuron gets inputs from the previous layer, applies a weight to each input, and computes a weighted sum. That sum is then passed through an activation function, like sigmoid or ReLU, to introduce nonlinearity. The output of one layer will serve as the input to the next layer, and this process goes on until the final output layer is reached.

During training, neural network weights are adjusted using backpropagation and optimization algorithms like gradient descent. The goal is to minimize a loss function that measures the difference between the predicted and actual outputs. Neural networks can learn complex, nonlinear relationships in the data and handle a wide range of predictive analytics tasks. However, they require a large amount of labeled training data and can be computationally expensive to train. Neural networks underpin the current advances in artificial intelligence.

(Source: Wikimedia)

We’ve seen above that machine learning challenges come from both the data and the model. Let’s now explore some of the issues you’ll need to overcome when using machine learning for predictive analytics.

Data quality is fundamental to the success of machine learning-based predictive analytics. Poor data quality, including missing values, outliers, or inconsistencies, can lead to inaccurate predictions and skewed insights. Data completeness, accuracy, and relevance are essential for building reliable predictive models.

To overcome data quality challenges, engineers can:

Perform thorough data preprocessing, including data cleaning, normalization, and handling missing values.

Implement data validation and quality checks to pinpoint and address data issues early in the pipeline.

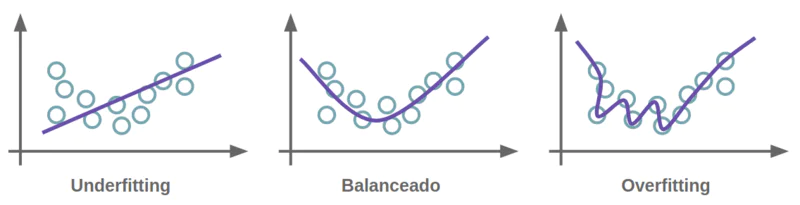

Overfitting occurs when a model learns the noise or random fluctuations in the training data, resulting in poor generalization on new, unseen data. Conversely, underfitting happens when a model is too simple to grasp the underlying patterns in the data. Both overfitting and underfitting can result in suboptimal predictive performance.

To mitigate overfitting and underfitting, engineers can:

Regularization techniques, such as L1 (Lasso) or L2 (Ridge) regularization, should be used to control model complexity and prevent overfitting.

Employ cross-validation to assess model performance on unseen data and select the best model hyperparameters.

(Source: Wikimedia)

Complex models, such as deep neural networks, can capture intricate patterns in data but may be difficult to interpret and explain. The lack of interpretability can be challenging in industries where transparency and accountability are crucial, such as healthcare or finance. Striking a balance between model complexity and interpretability is essential for building trust and understanding the reasoning behind predictions.

To address model complexity and interpretability, engineers can:

Use interpretable models, such as decision trees or linear models, when interpretability is a priority.

Apply techniques like SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-Agnostic Explanations) to explain individual predictions of complex models.

Training and deploying machine learning models for predictive analytics can be computationally intensive, especially when dealing with large datasets or complex models. Adequate computational resources, such as powerful processors, GPUs, and distributed computing frameworks, are necessary to handle the computational demands of machine learning algorithms.

To manage computational resources effectively, engineers can:

Utilize cloud computing platforms or distributed computing frameworks like Apache Spark to scale computations across multiple machines.

Optimize algorithms and implement efficient data processing techniques to reduce computational overhead.

Choosing appropriate evaluation metrics is critical for assessing predictive models' performance. Different metrics, such as accuracy, precision, recall, F1-score, or area under the ROC curve (AUC), may be suitable depending on the problem and business objectives. It's important to select metrics that are in line with the specific goals of the predictive analytics project and provide meaningful insights.

To select and interpret evaluation metrics effectively, engineers can:

Understand the business context and choose metrics that reflect the desired outcomes and costs associated with different types of errors.

Use a combination of metrics to understand model performance comprehensively, considering overall accuracy and class-specific performance measures.

By addressing these common challenges, engineers can develop robust and reliable machine learning models for predictive analytics, ensuring data quality, mitigating overfitting and underfitting, balancing model complexity and interpretability, managing computational resources effectively, and selecting appropriate evaluation metrics.

Segment uses Predictions to anticipate the likelihood of a specific event occurring by leveraging machine learning algorithms to analyze historical data tracked within Segment. This allows businesses to predict the probability of any event they track, such as a user making a purchase, subscribing to a service, or churning.

Here's how Segment uses Predictions:

Data Collection: Segment collects and integrates customer data from several sources, such as websites, mobile apps, and CRM systems, providing a comprehensive view of user behavior and interactions.

Event Tracking: Businesses can track specific user actions and events within Segment, such as product views, purchases, or feature usage. This historical event data serves as the foundation for generating predictions.

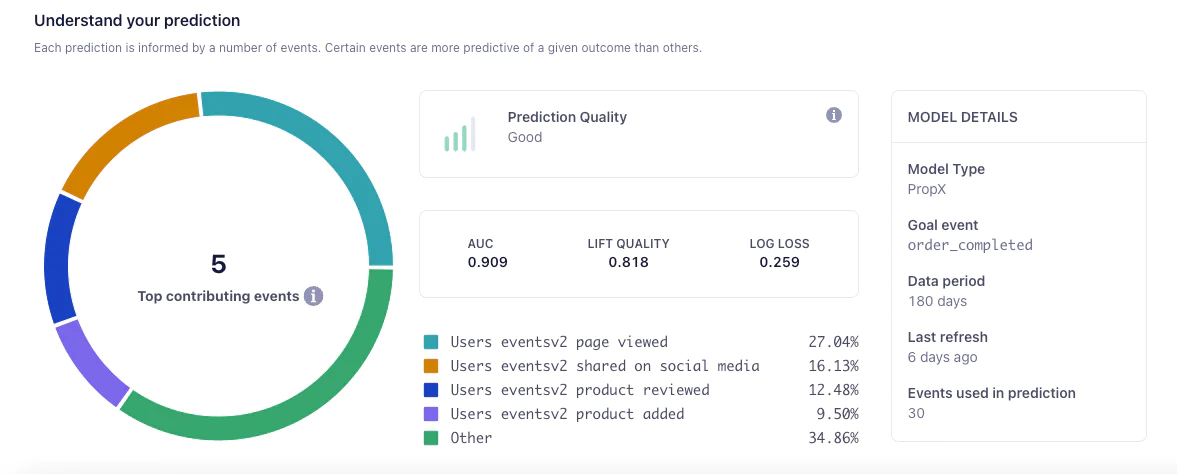

Machine Learning Model: Segment employs a binary classification model using decision trees to create predictions. The model learns relationships and patterns from the historical data to predict the likelihood of a specific event occurring for each user.

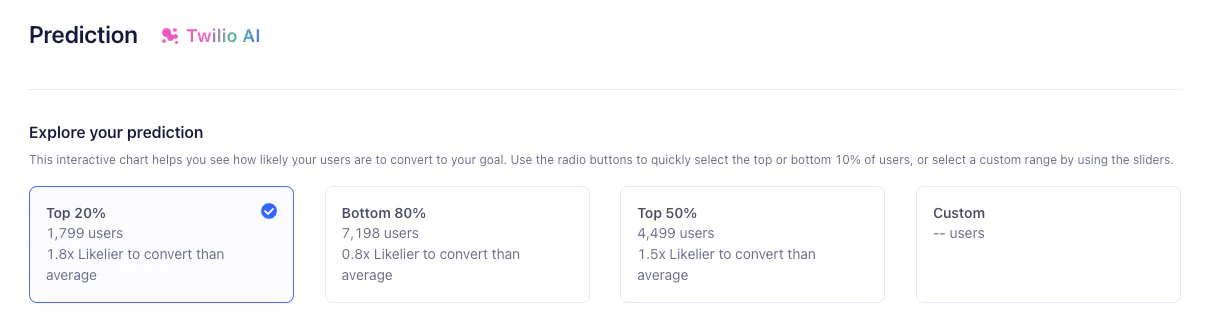

Predictive Scores: Once the model is trained, Segment assigns each user a predictive score, representing their likelihood of performing the target event. The scores are stored as percentile cohorts on user profiles, with higher scores indicating a higher probability of the event occurring.

The "Understand your prediction" tab in Segment provides valuable insights into the performance and effectiveness of the predictive model:

AUC (Area Under the ROC Curve): AUC measures the model's ability to distinguish between users likely to perform the target event and those not. A higher AUC value indicates better predictive performance.

Lift Quality: Lift Quality compares the results obtained with and without the predictive model. It quantifies the improvement in targeting and effectiveness achieved using the predictive model.

Top Contributing Events: This section highlights the events and associated weights that significantly impact the prediction. It helps businesses understand the key factors influencing the likelihood of the target event occurring.

Firstly, model improvement. Data scientists can extract Segment's predictive scores and incorporate them into their proprietary machine learning models. By combining Segment's predictions with additional data sources and algorithms, businesses can enhance the accuracy and performance of their predictive models.

Secondly, testing experiences. Data teams can validate and strengthen their existing machine learning models by comparing them against Segment's out-of-the-box models. This allows for benchmarking and identifying areas for improvement in their proprietary models.

Lastly, time savings. Segment's pre-built predictive models can save data science teams time and effort in building their own models from scratch. This allows them to focus on other important tasks, such as inventory management or fraud detection, while leveraging predictive analytics' benefits.

By utilizing Segment Predictions, businesses can gain actionable insights into user behavior, anticipate future events, and make data-driven decisions. They can create targeted audiences, optimize marketing campaigns, personalize user experiences, and proactively engage with customers based on their predicted likelihood of performing desired actions. Predictions enable businesses to improve customer acquisition, retention, and lifetime value by leveraging the power of machine learning and predictive analytics within the Segment platform.

Connect with a Segment expert who can share more about what Segment can do for you.

We'll get back to you shortly. For now, you can create your workspace by clicking below.

Popular techniques for predictive analytics include:

Regression analysis: Linear and logistic regression are widely used for predicting continuous values or binary outcomes. These techniques model the relationship between input features and the target variable.

Decision trees and random forests: This technique recursively divides the data based on feature values to make predictions. Random forests combine multiple decision trees to improve accuracy and reduce overfitting.

Neural networks and deep learning: Neural networks, in particular, deep learning architectures like convolutional neural networks (CNNs) and recurrent neural networks (RNNs), can learn complex patterns and are suitable for image recognition and sequence prediction tasks.

An example of predictive analytics in the retail industry is customer churn prediction. Through historical customer data analysis, such as purchase history, engagement patterns, and demographic information, a retailer can build a predictive model to pinpoint customers at a high risk of churning (i.e., discontinuing their relationship with the company).

The predictive model considers various factors contributing to churn, such as reduced purchase frequency, decreased login activity, or lack of response to promotional offers. Training the model on labeled data (customers who have churned and those who have not) teaches patterns and relationships that indicate a higher likelihood of churn.

Once the model is trained, data scientists are able to apply it to the retailer's current customer base to predict each customer's probability of churn. Customers identified as high-risk can then be targeted with personalized retention strategies, such as special offers, targeted communications, or incentives, to prevent them from leaving.

By proactively identifying and addressing potential churn, the retailer can take steps to retain valuable customers, improve customer satisfaction, and ultimately increase customer lifetime value.

Segment can help with predictive analytics in several ways:

Data collection and integration: Segment centralizes and unifies customer data from several sources, providing a comprehensive foundation for predictive analytics.

Event tracking and prediction: Segment's Predictions feature analyzes historical event data to anticipate the likelihood of future events, enabling proactive decision-making.

Audience segmentation: Segment allows businesses to create meaningful customer segments for targeted predictive modeling.

By leveraging Segment's data collection, integration, and predictive analytics features, businesses can gain valuable insights, anticipate trends, and make data-driven decisions to optimize customer experiences, improve retention, and drive growth.

Enter your email below and we’ll send lessons directly to you so you can learn at your own pace.