Unify a customer's online and in-store purchases downstream with Segment Reverse ETL

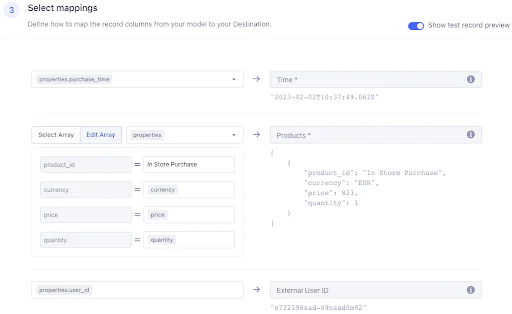

It's easy to use Segment to track your customer's online purchases, as a visitor to your website. But online is not the only place that customers can shop and interact with your brand. It's very likely that customers shopping online will also make a purchase in your store. In this recipe, we'll make sure that the online purchases and the in-store purchases are both attributable to the same customer. This matching will create a seamless brand experience for your customers and allows you to further personalize marketing content for your customers.

Made by Kalyan Kola Cahill

Made by Kalyan Kola Cahill

What do you need?

-

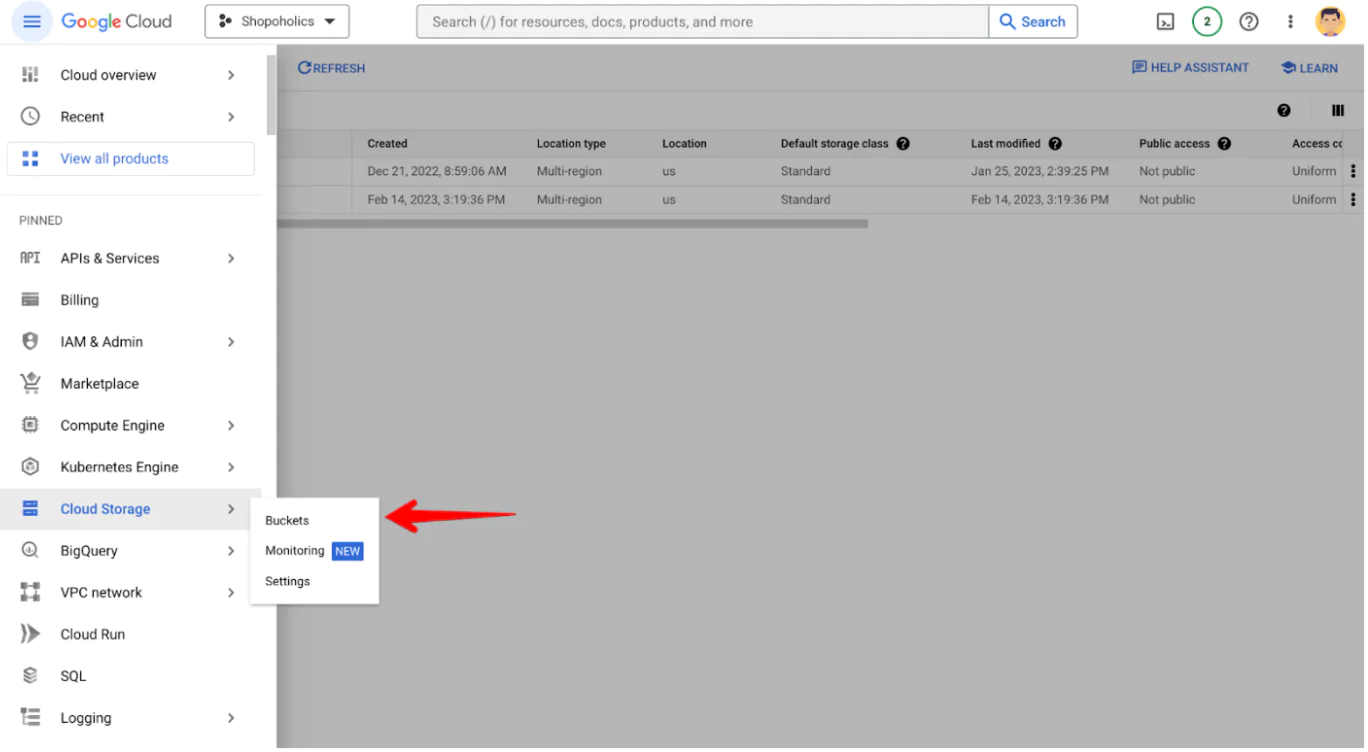

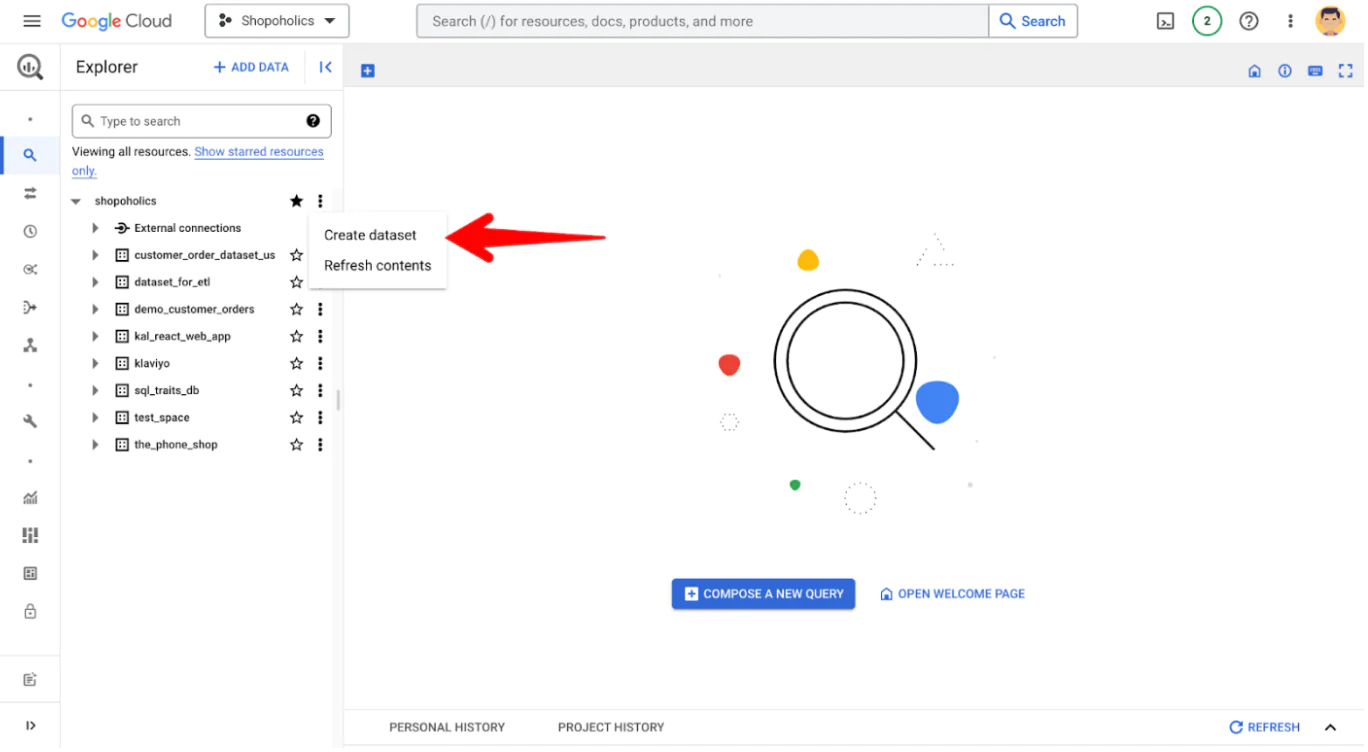

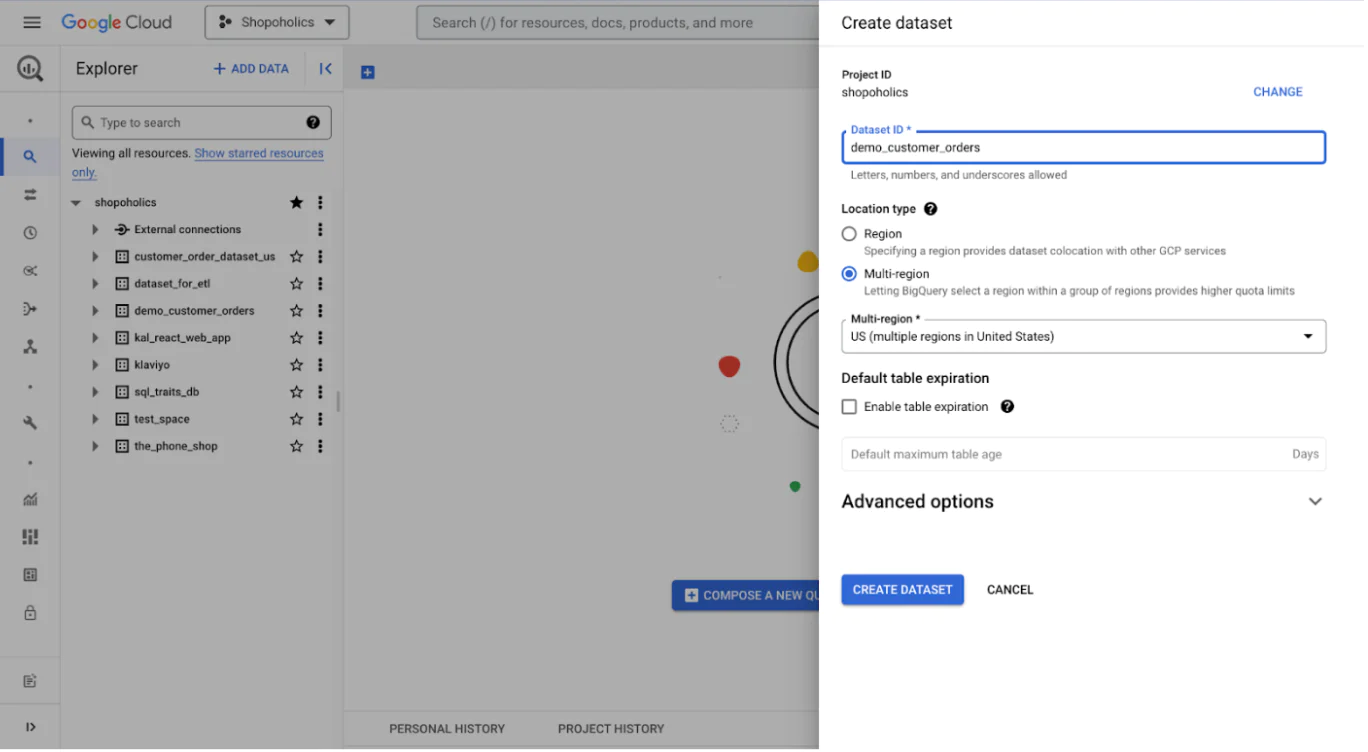

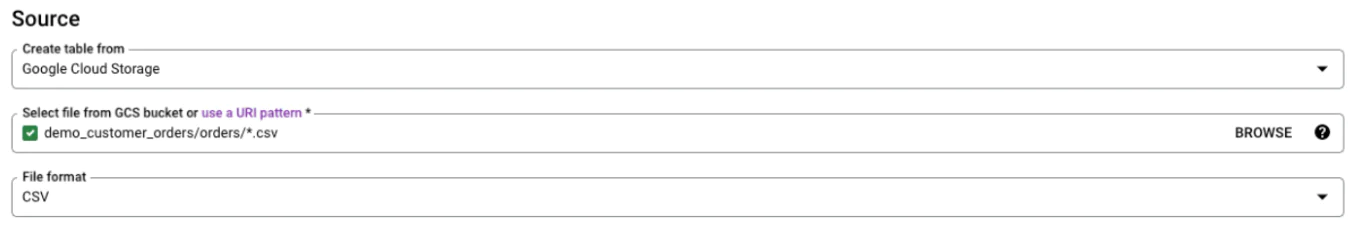

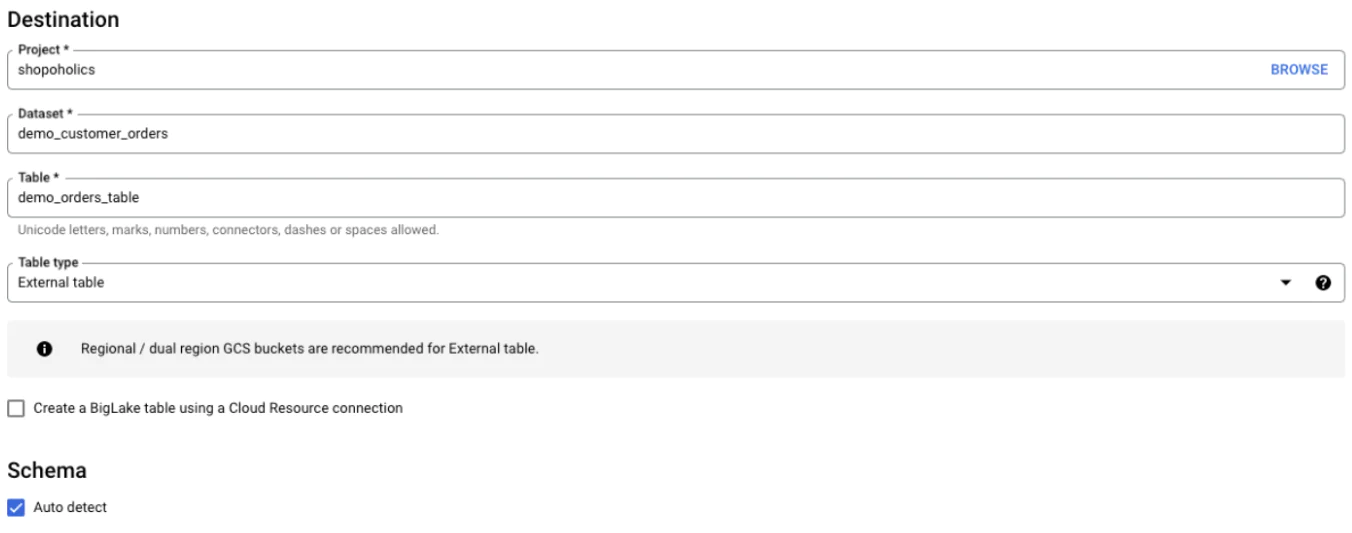

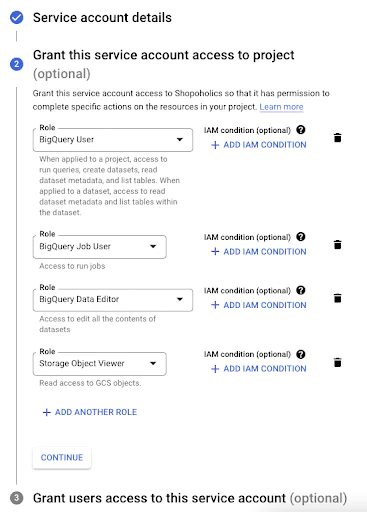

BigQuery

-

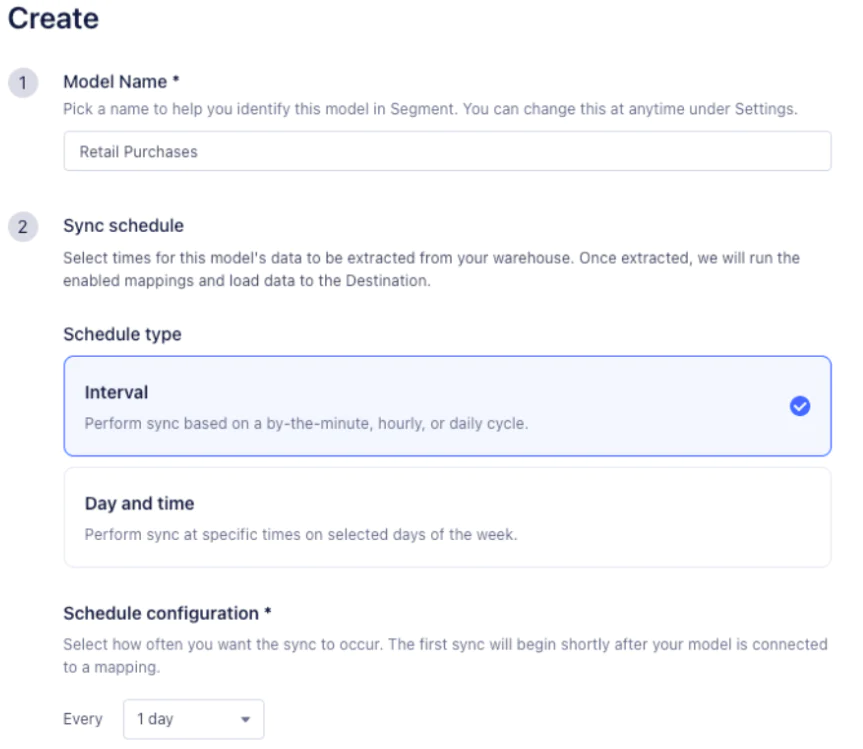

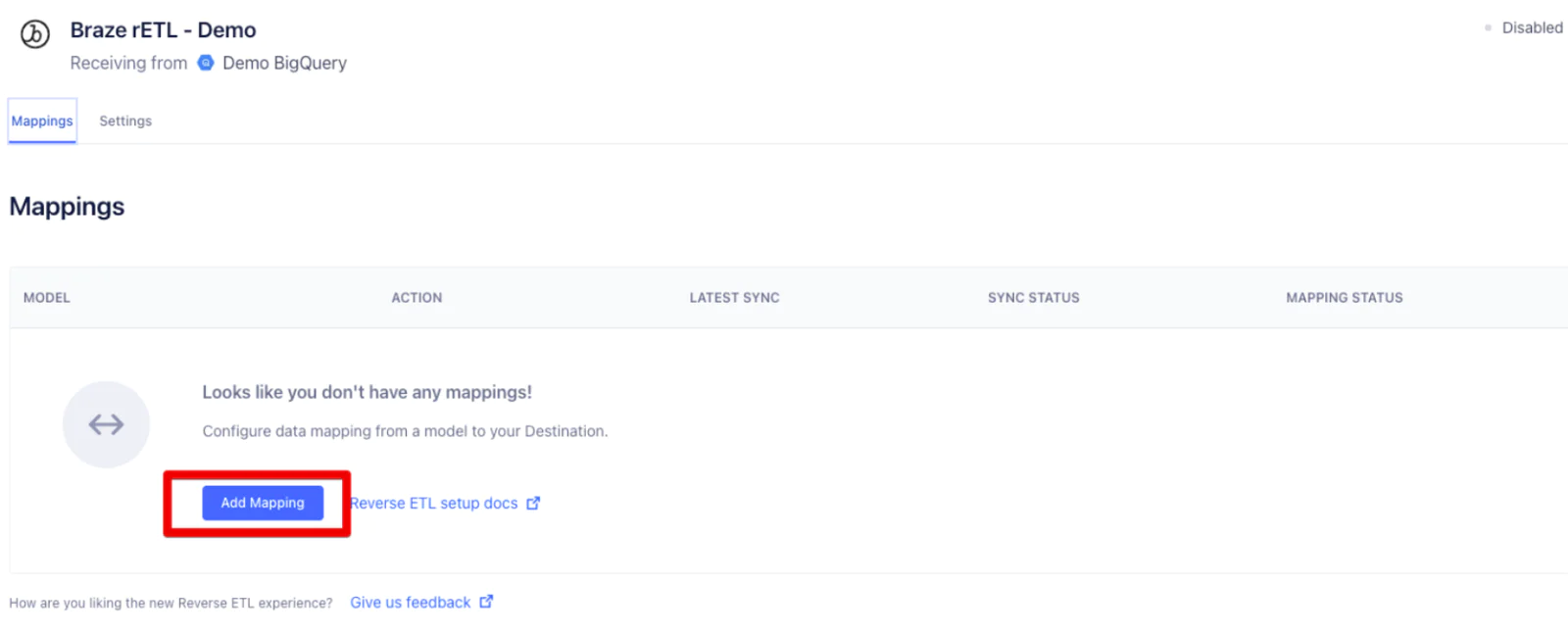

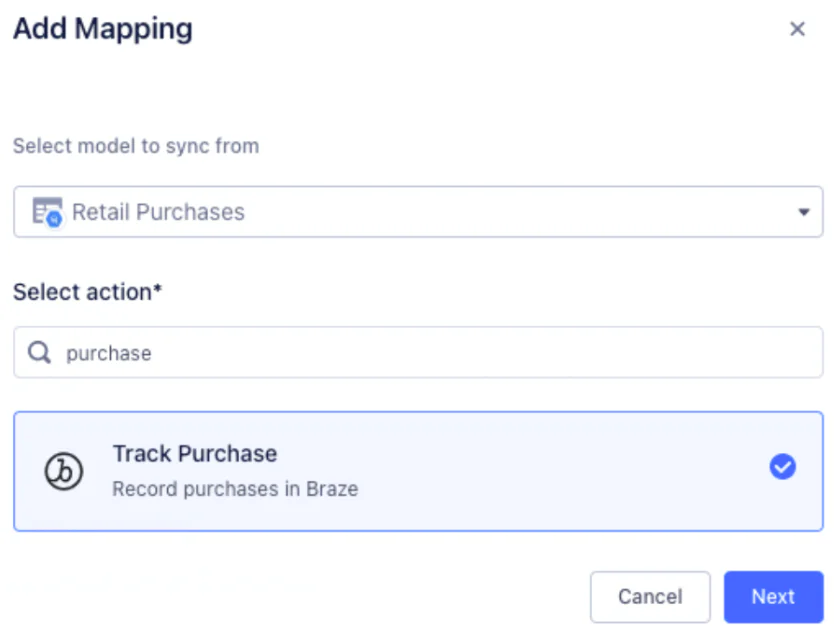

Segment Reverse ETL

-

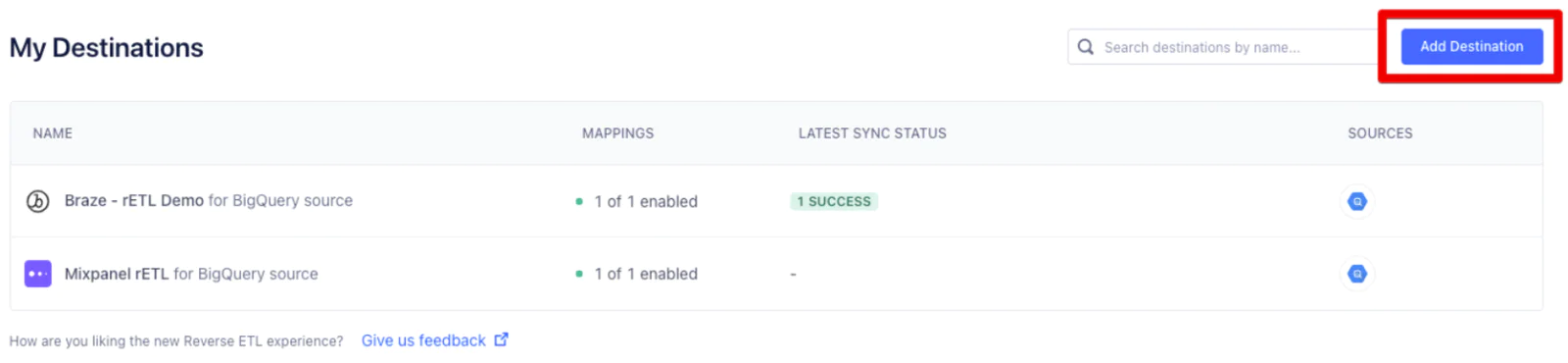

An analytics tool - we use Braze or Mixpanel, but YMMV

Easily personalize customer experiences with first-party data

With a huge integration catalog and plenty of no-code features, Segment provides easy-to-maintain capability to your teams with minimal engineering effort. Great data doesn't have to be hard work!