Data Lake Tools & Solutions to Unlock Insights

Learn about the most popular data lake tools and solutions, what they’re used for, and how to choose the best for your needs.

Learn about the most popular data lake tools and solutions, what they’re used for, and how to choose the best for your needs.

Data lakes are useful storage sites for companies of all sizes and industries. They're incredibly versatile, holding both structured and unstructured data. However, companies must search out solutions to keep data lake assets organized, optimized, and secure.

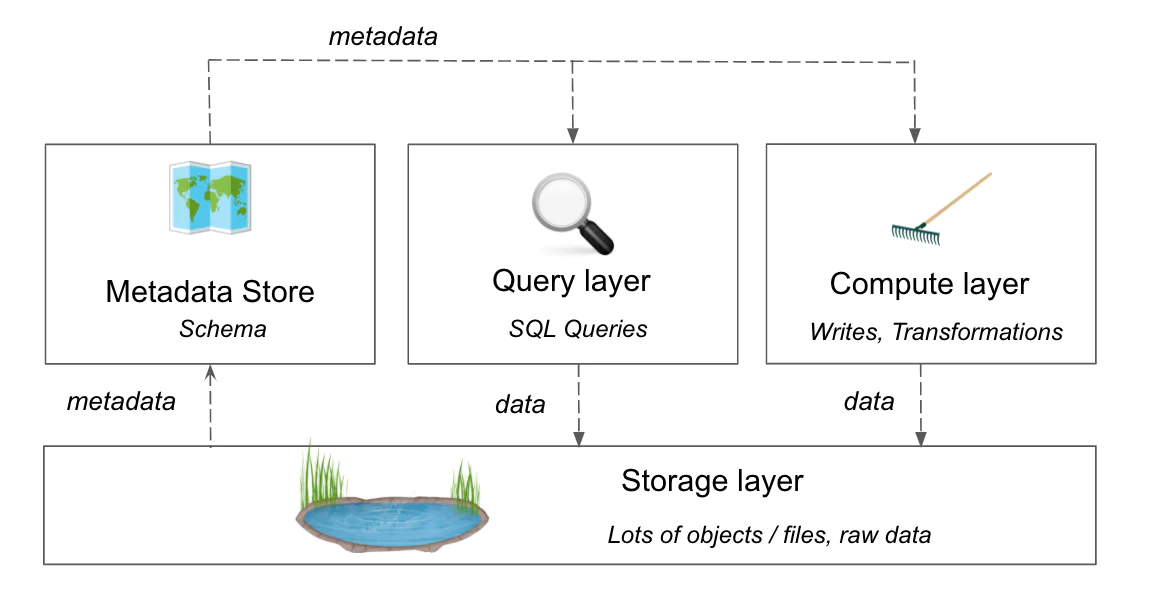

A data lake is a central repository for storing different types of data from various different sources. Data lake solutions refer to the different types of data lakes available (e.g., on-prem, cloud, hybrid) and the vendors that offer them. It can also refer to the different pieces that together create the architecture of a data lake (e..g, the metadata layer, query layer, etc.).

Below, we take a closer look at four different solutions that play an instrumental part in constructing and scaling a data lake.

Two popular data lake options are Amazon Web Services (AWS) S3 and Azure Data Lake Storage (ADLS) Gen2, which serve as the storage layer within a data lake. Segment is compatible with both these solutions – able to send consolidated data in an optimized format to reduce processing times.

Let’s learn more about the different tools used at each layer in a data lake. (As a reminder, this isn’t an exhaustive list, but a compilation of popular solutions.)

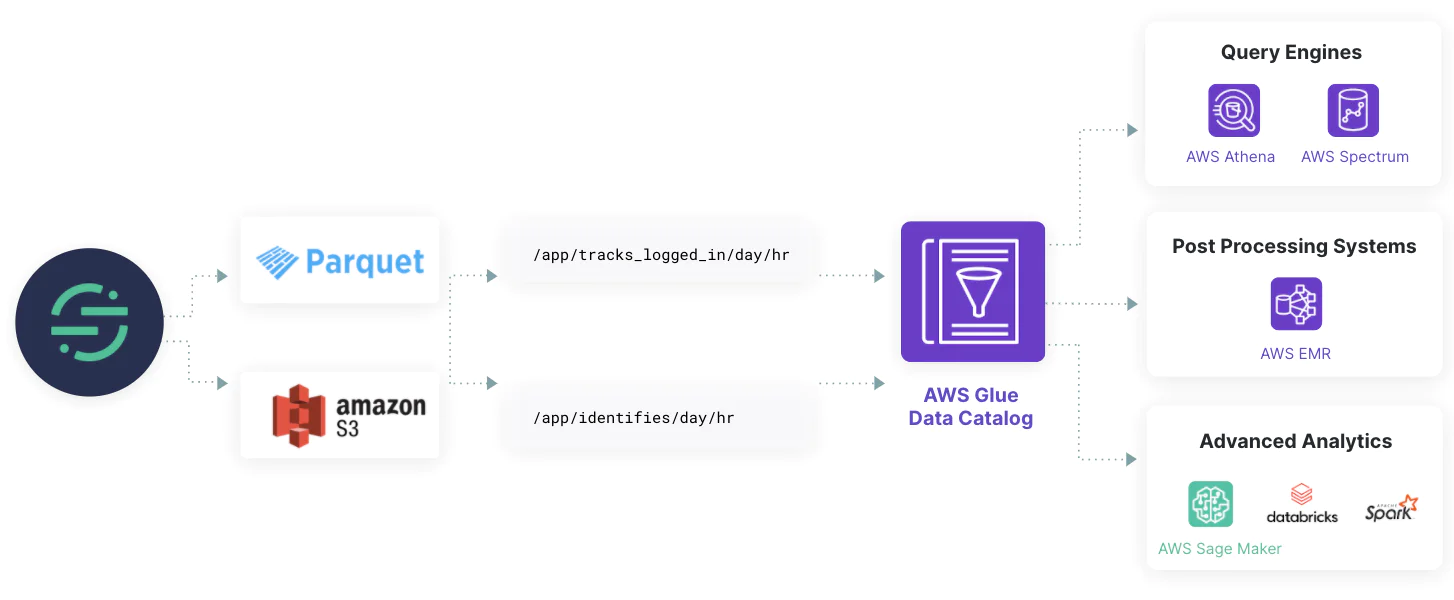

The AWS Glue Data Catalog is a central store for all the metadata in your data lake (e.g., different data types, formats, locations, etc.).

Once a data event occurs, it gets assigned a schema and becomes part of the data tables and columns inside the AWS Glue Data Catalog. Once it’s been cataloged into a more usable format, various data analytics applications can find it easier and faster, and your business tools become more powerful than before.

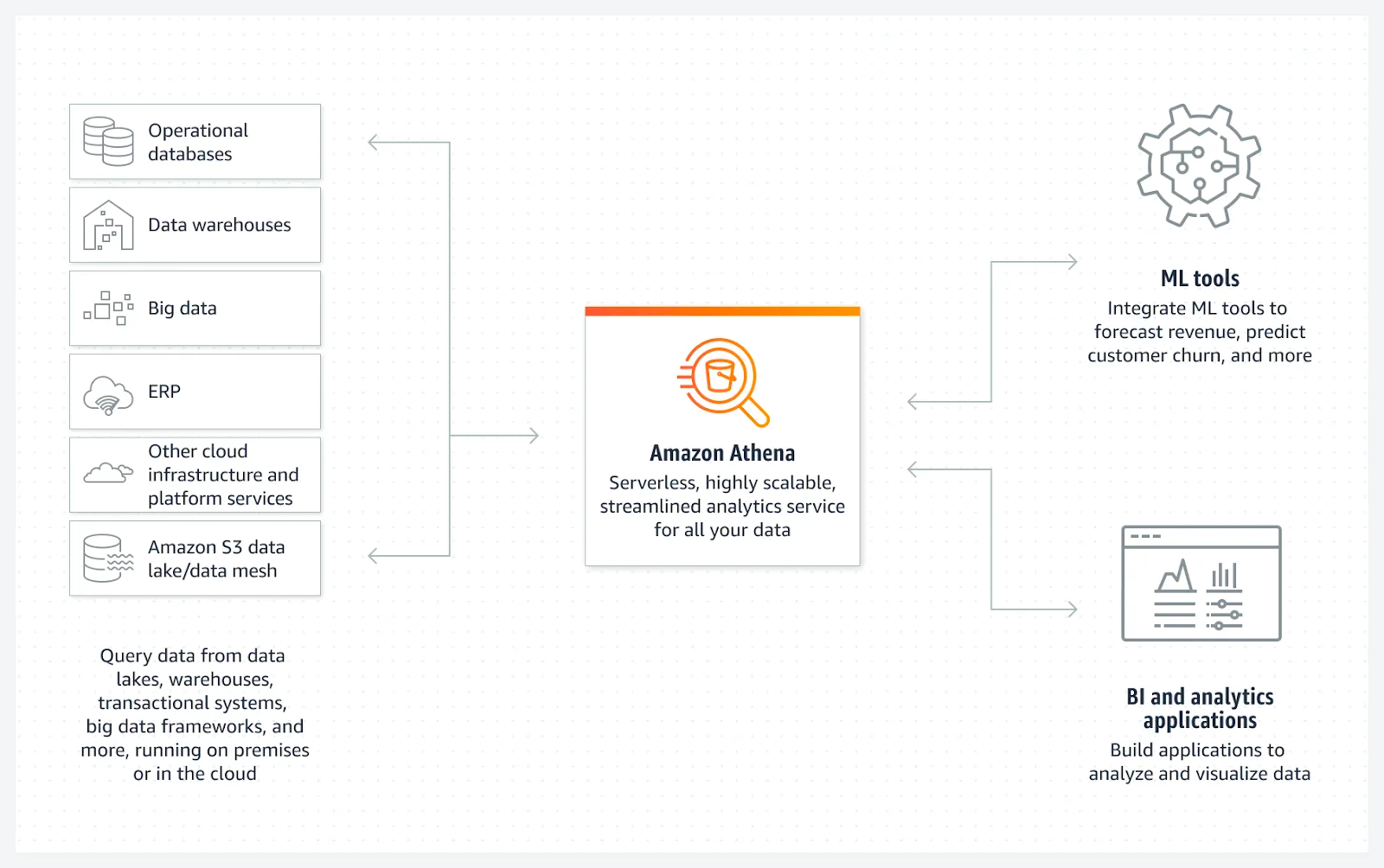

Athena provides a SQL interface to query data in your data lake (in Amazon S3). Athena is serverless and customers only pay-per-query.

Athena allows you to query data stored in common file formats like CSV, TSV (Tab-Separated Values), and Parquet, helping to filter, aggregate, and analyze data within these files.

Other potential solutions for the query layer in a data lake include BigQuery or Apache Hive.

Elastic MapReduce (EMR) is a cloud-based big data processing service provided by AWS. It simplifies the processing of large datasets with the help of frameworks like Apache Hadoop and Apache Spark.

EMR makes it easier for businesses to perform data transformation, analysis, and machine learning by providing a managed and scalable environment for running distributed data processing applications.

The partnership between Segment and Databricks offers a two-way transmission of data between tools for advanced analytics and AI insights. Use Segment and Databricks together to collect and activate data from inside your data lake. Some of the benefits include:

Activating data from Databricks Lakehouse in over 450 Segment-supported destinations (as well as any custom integrations).

Build powerful AI models on enriched, golden customer profiles (with less tools and simplified data orchestration).

Segment and Databricks partnership

Choosing the right data lake solution(s) will depend on your business and intended use cases. However, there are a few overarching pieces of advice worth following, from considering how well it will integrate within your existing data architecture to data security.

Data lakes are part of a larger ecosystem of tools which range from analytics to data processing and machine learning. When choosing a data lake, it’s important to think through how well it will integrate with the existing tools in your tech stack.

Data lakes often support various file formats, such as CSV, JSON, and Parquet. But data format may impact the data lake’s ease and efficiency when it comes to processing and querying. For example, a data lake optimized for columnar storage might prefer Parquet for better performance.

Look for data lake vendors that understand the current state of cybersecurity and what’s required for data in your particular industry. A few things to consider when it comes to data safety include:

Data encryption

Risk monitoring

Data lake solutions rarely have a single upfront cost; their prices usually fluctuate based on each customer’s unique needs. The final cost will depend on your data volume, storage needs, features, integrations, and setup or training fees.

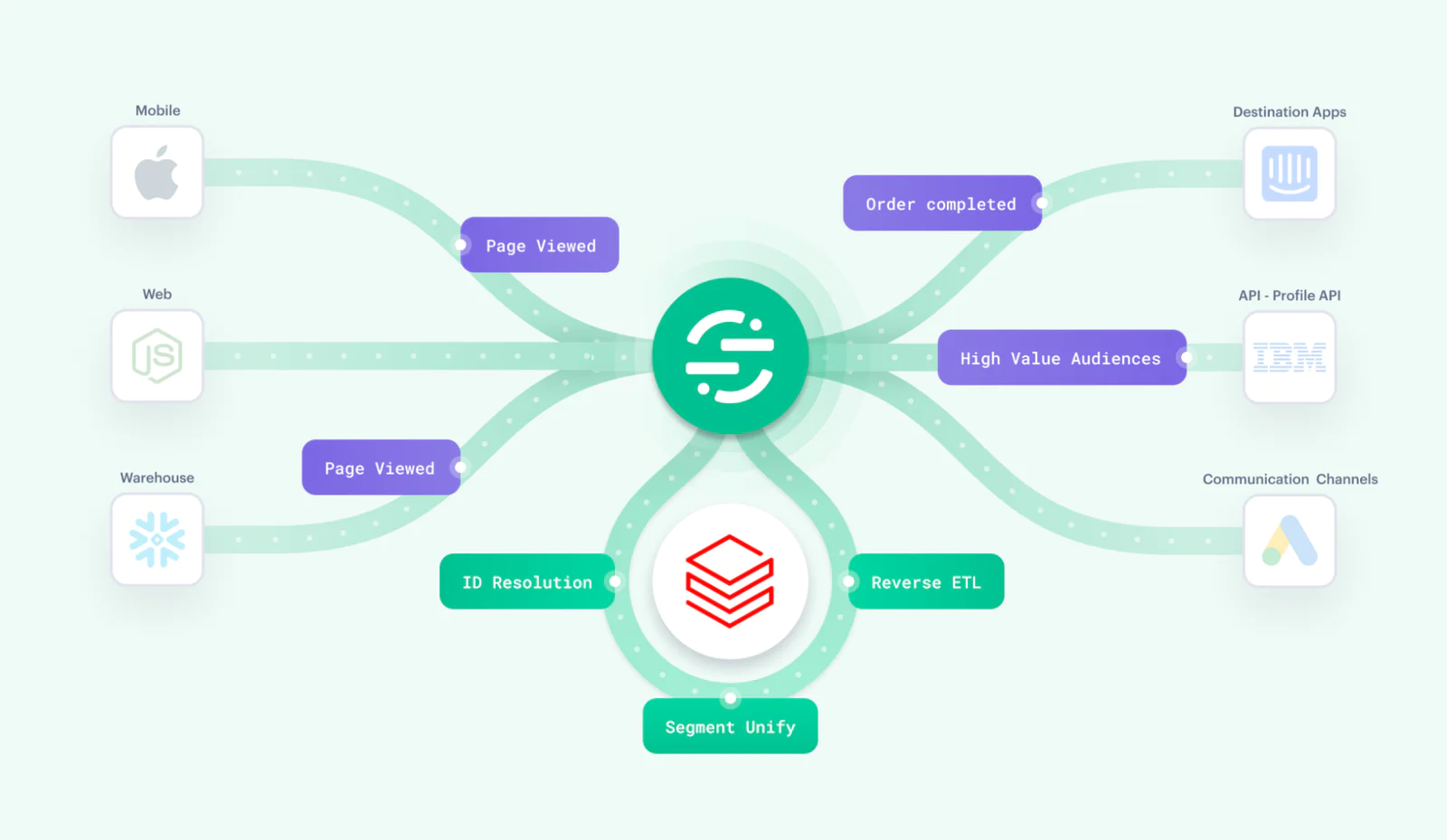

Segment's out-of-the-box data lake balances efficiency, cost, and performance. Engineers get the most out of existing data while positioning new data for future analytics and machine learning capabilities. Here are a few benefits of using Segment Data Lakes.

Data lakes are incredibly useful, but creating your own from the ground up is a considerable task – one that can take months on end. By using Segment’s baseline data architecture, engineers skip costly steps and have an easier time maintaining the data lake down the road.

Segment also applies a standard schema so it’s easier (and quicker) to query raw data. (You can learn more about Segment’s schema and how it partitions data for S3 and ALDS here.)

Cost will always be a significant consideration for businesses. Because data lakes run on affordable data stores like Amazon S3, they are much more reasonable to build, use, and maintain. Take Rokfin, which was able to reduce their data storage costs by 60% when they migrated from a data warehouse to Segment Data Lakes.

In the past, cost concerns and architecture constraints may have forced businesses into only keeping subsets of their data or risk higher storage fees and less than optimal compute performance. But with Segment Data Lakes, all historical and current behavioral data can be stored in a cost-effective and efficient way. Teams can also use Segment replay to re-process and re-ingest historical data they’d previously unloaded, to rebuild a behavioral dataset in S3.

Connect with a Segment expert who can share more about what Segment can do for you.

We'll get back to you shortly. For now, you can create your workspace by clicking below.

Segment supports Data Lakes hosted on Amazon Web Services (AWS) or Microsoft Azure, with the ability to send clean, consolidated customer data in an optimized format for better processing performance and analytics.

Segment has several security features and certifications to protect customer data, such as:

Data encryption at rest and in transit

The ability to automatically detect and classify data based on risk level

ISO 27001, ISO 27017, and ISO 27018 certified

You can send data from any event sources, such as website libraries, servers, mobile, and event cloud sources.

Once Segment sets a data type for a column, all following data will try to be cast into that data type. If incoming data doesn’t match the data type, Segment will try to cast the column to the target data type.

Enter your email below and we’ll send lessons directly to you so you can learn at your own pace.