What is Data Validation?

Learn about the importance of validating data and how to do it.

Learn about the importance of validating data and how to do it.

Data validation is the process of ensuring data is accurate, consistent, and meets certain criteria or quality standards. The goal of data validation is to prevent errors or inconsistencies between datasets, which can cause issues like inaccurate reporting and poor decision-making.

One example of data validation you’ve likely already encountered is in spreadsheets like Microsoft Excel or Google Sheets. You can set data validation rules to ensure values entered into a row or column are consistent, like by having users choose from a dropdown list or creating constraints on how numerical values should be saved (e.g., formatted as a date, time, or are only whole numbers).

Below, we dive into how to validate data at scale, across all systems and databases.

Take control of your data quality with intuitive features and real-time validation built for enterprise scale.

At its core, data validation applies certain rules and standards to how data is collected, formatted, and stored to ensure it remains reliable.

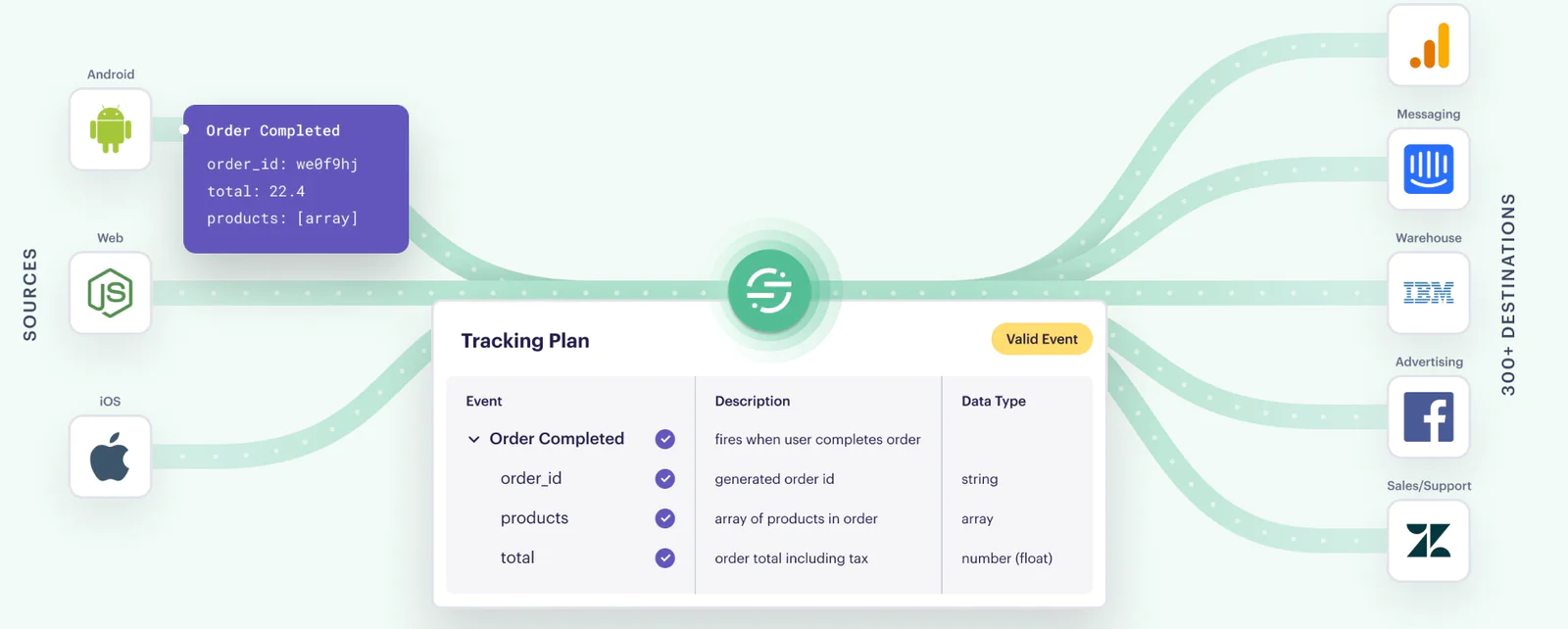

To get started with data validation, you first need to create internal alignment over what data you’re collecting, why you’re collecting it, and who will be using it. In other words, you need a data tracking plan. This tracking plan will help create clarity on the naming conventions for data – because even the subtlest difference like whether or not to capitalize “Page_Viewed” vs. “page_viewed” can mess up reporting.

Data validation rules often check for things like proper formatting, valid ranges, and correct data types. Here are a few other characteristics to consider:

Completeness: All required data fields are filled and no essential information is missing.

Consistency: Data is consistent both within a dataset and across different datasets. For example, if a system uses the term "US Dollars" in one place, it should not use "USD" in another.

Format: Data adheres to a specified format. This may include date formats, phone numbers, email addresses, etc.

Range checks: Numeric data should fall within acceptable ranges. For example, a person's age could not be negative.

Unique constraints: Certain fields, such as a unique identifier or primary key, contain distinct values. This helps prevent duplicate records.

Cross-field validation: Checking the relationship between different fields to ensure that they make logical sense together. For example, verifying that a start date comes before an end date.

Data validation comes with its own set of challenges, from handling the growing volume and velocity of data, to ensuring consistency and alignment across a disconnected tech stack. Here are a few common data validation challenges to be aware of:

Human error. Mistakes may be inevitable (to a certain degree), but when you’re dependent on manual data entry, or each team is working with their own naming conventions, you’re inviting mishaps and misreporting. Data validation rules can help remedy this, from introducing dropdown lists in spreadsheets to flagging any data that doesn’t adhere to your company’s tracking plan.

Incomplete data. Missing fields or records can degrade the quality of your data. Clearly communicate to your team what data events you intend to track, which fields are required, and run QA checks to ensure data is complete.

Changing Data Requirements. Data validation rules may need to be updated as business requirements change. Be sure to regularly review and update validation rules to accommodate evolving business needs.

Cross-System Integration. Validating data consistency across multiple systems or databases can be complex. Data validation rules can help ensure accuracy as you integrate data from different systems, like by deduplicating entries to ensure the same event isn’t counted multiple times. For example, Typeform was able to reduce the number of events they were tracking by 75% after automating data validation with Segment Protocols.

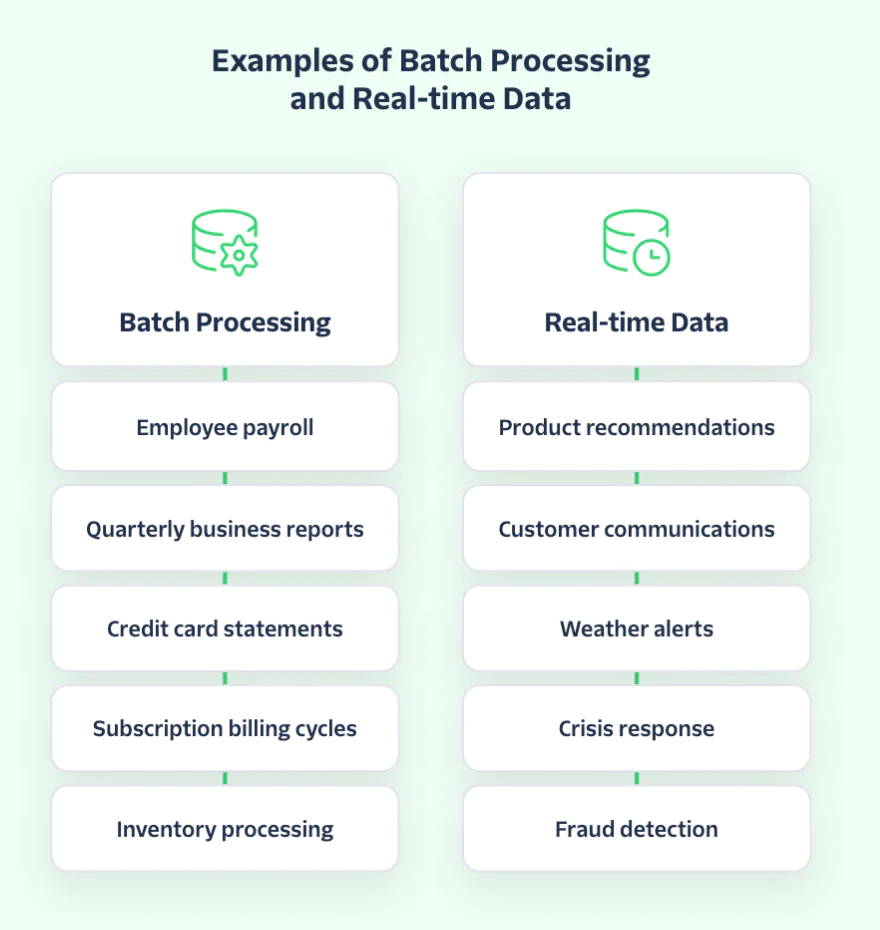

Data Volume: As we mentioned above, validating large volumes of data can be resource-intensive. Consider distributed data processing and what data should be done via batch processing vs. real-time streaming.

Segment Protocols helps automate data validation (and several other processes related to data governance) at scale. First, Segment helps align internal teams around a single tracking plan, and is able to automatically block any event or property that doesn’t adhere to it.

You’ll be notified of violations immediately, and be able to view details about the violation in Segment (for instance, if certain required properties were missing or if there are invalid property value types). Violations are also aggregated so you can quickly pinpoint any trends or root causes to these inconsistencies.

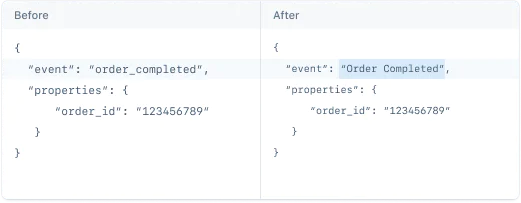

And with Transformations, businesses are able to correct bad data as it flows through Segment (or customize data for specific destinations). This can be used to rename an event that doesn’t match your naming conventions (e.g., User_SignedUp to user_signup), update a property value (e.g., changing “currency” to “USD”), create a new property value, and more.

Data validation ensures that data remains accurate, consistent, and complete at scale. It’s an essential component of having trust in your data and analytics.

Let's use the example of an online registration form where users provide their age. In this scenario, we might set data validation rules to ensure the entered age is a positive integer and falls within a reasonable range (e.g., isn’t a negative number). You can also institute cross-field validation – for instance, if a birthdate is provided, you can calculate the person’s age to ensure it matches what they provided.

Protocols is able to automate data validation at scale by automatically enforcing a company’s tracking plan while blocking and/or flagging any non-conforming events for review.