11 Essential Data Validation Techniques

We walk through 11 indispensable data validation techniques for ensuring accuracy, reliability, and integrity in your datasets.

We walk through 11 indispensable data validation techniques for ensuring accuracy, reliability, and integrity in your datasets.

Data validation is the process of ensuring data meets standards to be consistent and accurate. It helps ensure the large volumes of data captured and created by an organization can be used effectively.

Why is this essential for businesses to practice? Without consistent data, it’s nearly impossible to have correct reporting or use data for enhanced analytics and insights. More than that, data validation can catch software glitches, errors in data collection and handling, or other company-wide quality issues that need to be addressed.

Both engineers and analysts play a role in validating data, although these roles can differ. For example, data engineers may be more likely to build and maintain the data pipeline and create the tools for data transfer and analysis workflows. Analysts, on the other hand, focus on the analysis, interpretation, and insights gained from data, as well as figuring out how to translate business problems into data queries. In most organizations, their duties may overlap somewhat, but their work is most geared toward the data validation techniques mentioned here.

Below are some common data validation techniques that engineers may use in their day to day.

A data type is the kind of information contained in a data field. An example of data type validation would be checking whether a numerical data field contains a number and rejecting other characters, such as uppercase letters or special symbols.

A range is the high and low end of a value and the values that fall between, for example, the range of 1-10. In range validation, only valid values within the range are accepted. For data with a specified latitude range of -20 to 40 degrees, range validation will help catch a value of -25 degrees.

Ensuring a predefined format is part of format validation. An example of this would be the date format of YYYY-MM-DD compared to MM-DD-YYYY. This may be relevant when dealing with data from different countries, where date format convention varies. Validation flags data that doesn’t fit the format to ensure it can be fixed.

A presence check simply checks that a field isn’t empty. If a data set is missing the title of a name, it may not be important. If it’s missing the last name, however, this may become an issue. Presence check ensures that the last name field has data for the entire usable dataset.

Pattern matching is very beneficial, especially when dealing with large volumes of data that may include some manual data entry. It checks against a set pattern to be sure all data in the set follows that pattern. An example includes an email format, which has some text before the @, as well as a domain and a .com, .org, or other. Any data that doesn’t fit this pattern, such as an email missing an @, will be flagged as invalid.

When two or more fields are checked and compared in relation to each other, this is cross-field validation. An example would be taking the number of concertgoers with VIP tickets, premium seats, and general admission and adding them together to compare with the total number of tickets sold. This can alert engineers to problems with ticket counts or an error in the total available seats in a stadium.

Now, let’s look at common data validation techniques for analysts.

A uniqueness check works just like it sounds. This validation looks to see that all data values are truly unique, such as the Social Security Number of a list of employees or the order number for a list of store orders to ship out. If any two fields have the same data, it’s a sign that something is wrong.

Data profiling is more involved than other types of data validation and seeks to understand three data characteristics at once:

Structure, or data organization and format

Content errors and inconsistencies

Relationship between parts of the data, such as data between two tables within a dataset

Data profiling helps ensure the data is in the correct form for data analysis and business insights.

In statistical validation, the data is evaluated by the conclusions one can draw from it. Specifically, it reveals if a scientific study can be replicated based on the data alone. If the data conclusions have merit and match research objectives, then they pass statistical validation, and the method of using the data can be used in similar future studies.

Companies often have proprietary workflows and ways they use data. These methods are unique to the business and meet their internal policies and values. When data is checked to be accurate and consistent according to these internal policies, it’s considered to be a form of business rule validation. It makes it much easier to automate data audits and check for compliance with industry best practices and data laws.

With external data validation, only data that meets preset rules can be entered into the dataset or processed. An example would be a database software that only accepts numerical data in a particular cell. It prevents non-numerical data from being included in the dataset and can prevent manual data entry errors or the importing of data that doesn’t meet the criteria.

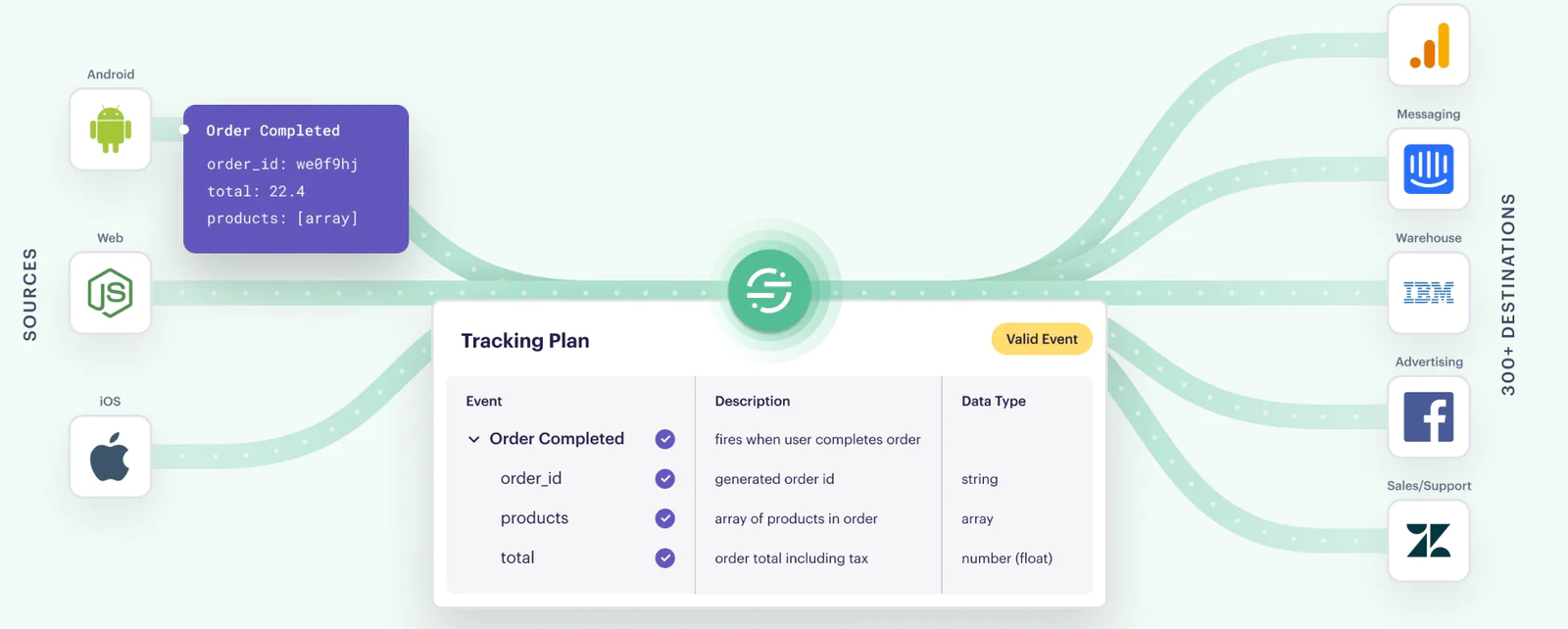

There are almost a dozen ways to check for data accuracy, consistency, and relevance, and they can be done manually to some degree. However, this is costly, uses too many human resources, and becomes very difficult to scale in this age of big data. Segment Protocols allows organizations to automate more of the data validation process by helping teams set up data governance guidelines at scale.

Review event violations at regular intervals, such as every hour, 24 hours, and seven days. Events can be viewed by type, such as missing required properties, invalid property value data types, or values not meeting conditional filtering. After these violations have been identified, they can be resolved; further action may be taken to address the issue so it doesn't happen again.

Organizations can use Schema to determine which data events should be accepted or blocked and even omit properties and traits. Any events not included in your custom Tracking Plan can be permanently dropped, while any invalid values and properties can be blocked altogether and discarded from the dataset. This saves time when combining data that isn't necessary or useful and speeds up the validation process.

Segment’s Transformations technology lets organizations fix bad data by changing it as it flows down the data stream. An example would be renaming a data field or changing a property value. It cannot fix past data, but it does allow organizations to run older data back through as new to transform it into useful data.

What’s normal for one business may be unexpected for another. That’s where anomaly detection becomes very useful, as it lets organizations set up rules for what would be an anomaly in their data. This could be a sudden drop in business outside of a typical slow time or a new increase in violations as defined by the Segment Tracking Plan.

Connect with a Segment expert who can share more about what Segment can do for you.

We'll get back to you shortly. For now, you can create your workspace by clicking below.

Data can be checked for accuracy through the process of validation. This can be done manually, with a human going through lines of data to catch errors, or done through automated means. With automated data validation, large volumes of data are checked against rules to ensure all datasets meet the requirements to be processed correctly. Often, only one piece of data needs to be flagged for inaccuracy to bring attention to the entire dataset.

One way organizations can validate data is by checking for violations in the formatting of fields. For example, any data that doesn't have the name field filled in or properly formatted would show up as a violation in an automated data validation tool and trigger the need for repair. Once fixed, this data is validated and ready to be used in the context of the larger dataset.

Yes, data validation can be automated to help ensure data accuracy and manage large volumes of data. Segment Protocols is one such data validation solution. Its features help organizations validate the accuracy and consistency of data at scale and let teams track data through a unified data tracking plan.

Enter your email below and we’ll send lessons directly to you so you can learn at your own pace.