Be specific about casing

When you're documenting each of these events and the properties associated with them, you also want to be crystal clear about the casing. This might seem nitpicky, but it's imperative in the long run.

Here are the five most common options:

- all lowercase —

account created - snake_case —

account_created - Proper Case —

Account created - camelCase —

account created - Sentence case —

Account created

We like Proper Case for events and snake_case for properties.

Put it all together

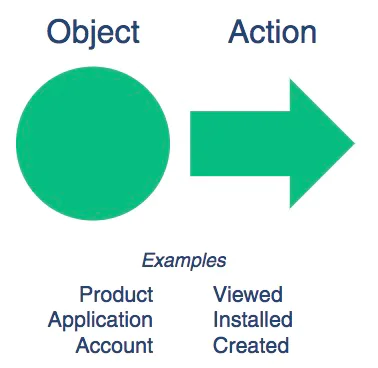

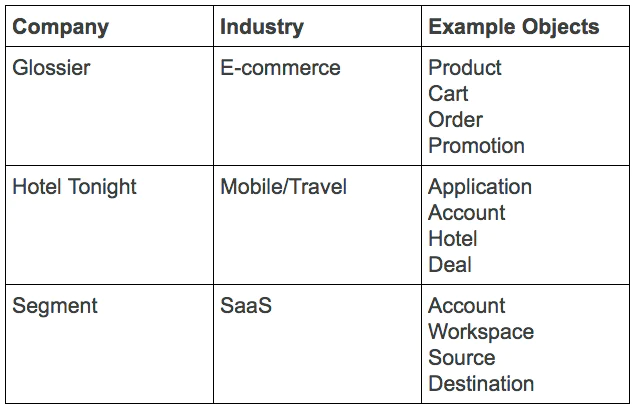

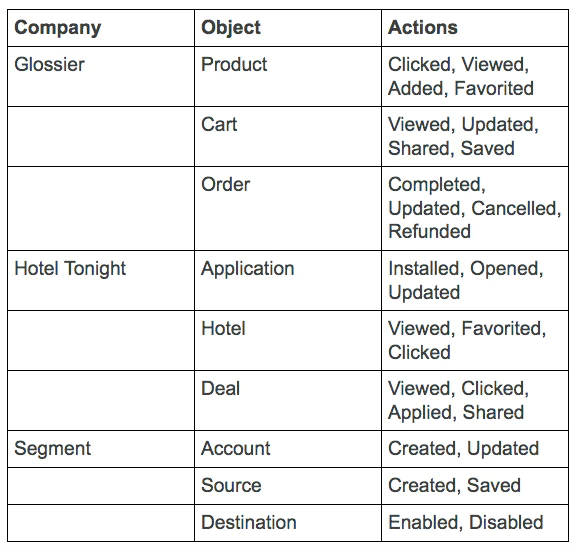

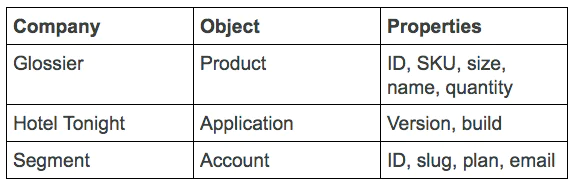

Putting this all together, it's really easy to construct your events.

Object Action:

Song Played:

- name

- artist_name

- album

- label

- genre

Document these events in your tracking plan and make sure to implement them in code with the same formatting.

In code, it would look something like this:

analytics.track({'Song Played',

name: 'What Do You Mean',

artist_name: 'Justin Bieber',

album: 'Purpose',

label: 'Def Jam - School Boy',

genre: 'Dance Pop',

});

Other options

Of course the object-action framework isn't the only way to do this. You can use any order of actions and objects, and any type of casing. You can also do present or past tense.

The only thing that really matters is that you keep it consistent!

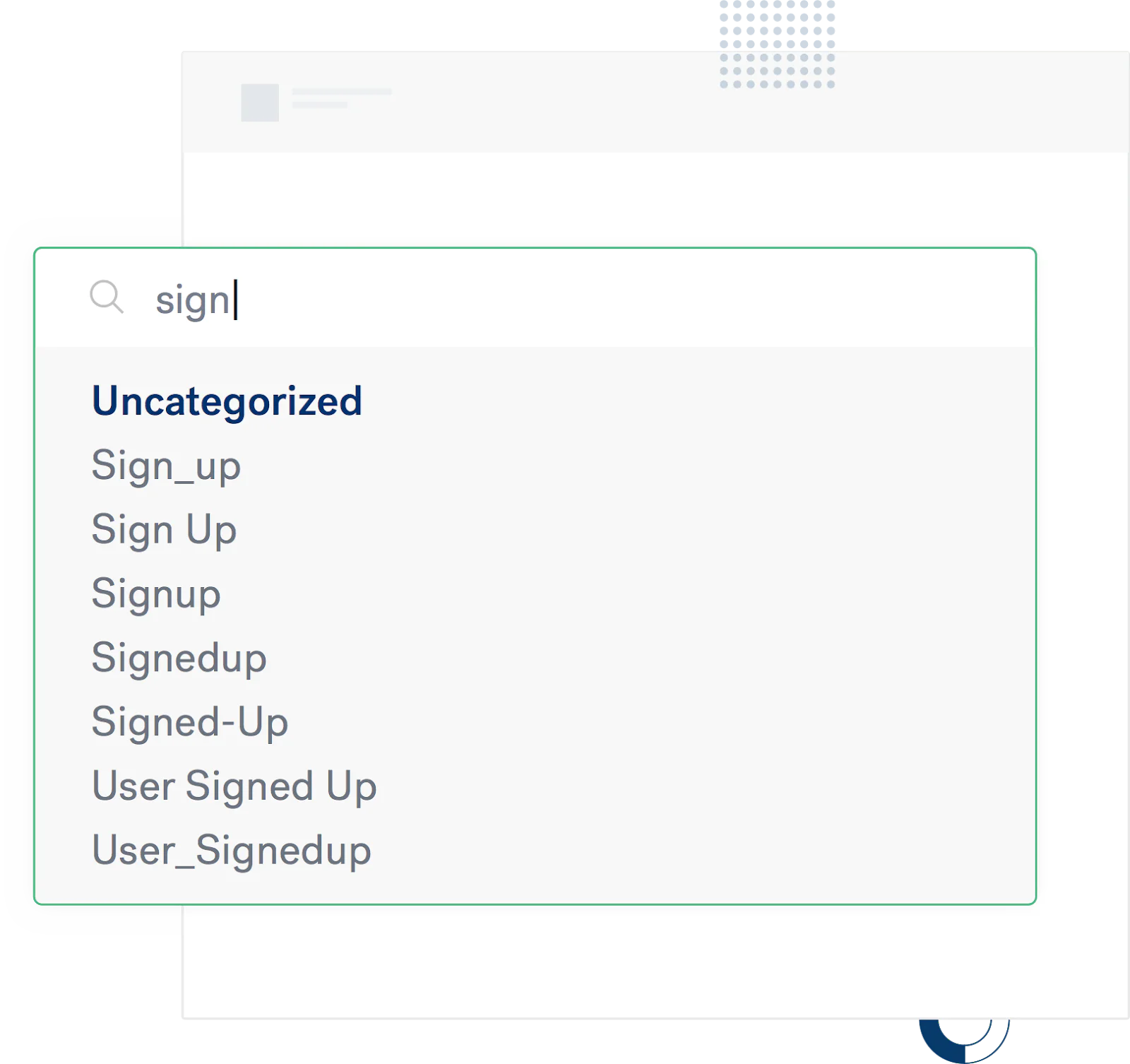

One other practice to avoid is dynamically creating new events with unique variables. You will never be able to make any sense of your funnels. Plus, your bills for your analytics tools will get out of control.

For example, sometimes we see people pass through the unique email with every sign up in the event name. You're much better off keeping those as properties.

Don't send sign up events as analytics.track('Sign Up - jake@segment.com');

Instead, send them as analytics.track('Sign Up', {email: 'jake@segment.com'});

Hopefully this deep dive into the object-action framework gave you some inspiration for keeping your data squeaky clean. We can't stress how much you will thank yourself down the road when all of your events adhere to the same naming convention.

Have a different way you like to name events? Let us know on Twitter!