Data Extraction Guide

Demystifying the complex world of data extraction. Uncover methods FAQs, and how Twilio Segment can elevate your data-driven strategies.

Demystifying the complex world of data extraction. Uncover methods FAQs, and how Twilio Segment can elevate your data-driven strategies.

Data extraction is the process of collecting data from one or more sources and moving it to another environment for storage or analysis.

Companies collect data to make decisions that improve the effectiveness and efficiency of business activities. But all of that data is generated at different touchpoints. Customers may interact with your business online via your website, app, social media, or email. Brick-and-mortar shops may store sales and inventory data via point-of-sale systems or supply chain software. Factory floors and delivery fleets have IoT sensors that collect data like machine performance and vehicle speed.

All of those data records are mere facts that mean little to your business when they remain in the environments where they’re generated. When you extract, process, and contextualize the data, you turn it into information. You can then query and analyze it to gain valuable business insights. You also gain a competitive edge.

A single platform to collect, unify, and connect your customer data.

Most data generated by businesses is never analyzed. But with the right tools and systems, you can beat the competition and make data accessible across your organization.

Structured data is data that is presented in a standardized format, adhering to specified naming conventions and data models. Structured data includes numerical entries and data that can be organized in tabular form, so it’s typically ready to be queried, sorted, profiled, and analyzed.

For example, look at your accounting books, inventory lists, and CRM. Any data that comes out of an ETL pipeline or is stored in a relational database is also structured.

Despite its apparent simplicity, structured data enables powerful applications. Machine learning algorithms, for instance, are trained on data with clear labels, categories, and hierarchies.

Examples: CRM entries, accounting records, directories

Unstructured data doesn’t adhere to a specific data model. It’s stored in its native format – the format in which it was generated. It’s undefined and uncategorized. This means it doesn’t fit in rigid relational databases, so you’d store it in NoSQL databases or data lakes.

IDC predicts that by 2025, 90% of data generated globally will be unstructured. You generate lots of it every day – when you converse with customers on DM or social media, create product demo videos, or gather customer feedback on your products. AI- and ML-assisted analytics are the most efficient way to make sense of such data. For instance, call centers use sentiment analysis to assess a customer’s emotions in a voice call or determine the nature of a written request.

Examples: audio recordings, IoT sensor data, emails

Halfway between structured and unstructured data is semi-structured data. This type of data can’t be stored in rigid rows and columns. However, it contains a semblance of structure through hierarchies based on metadata and tags.

Examples: image alt text, article headers, social media posts under a given hashtag

You get nuanced insights when you combine unstructured, structured, and semi-structured data. For instance, when you want to assess thousands of customer reviews, you use both quantitative data (numerical ratings, like one to five stars) and qualitative data (written feedback and social media comments, which you assess using natural language processing technology).

Data extraction can be done in a few ways, from event streaming to incremental batch processing. Let's take a closer look at these different methods.

Think of this as a bulk transfer – you’re taking all of your data and transferring it to a target system. Teams typically do full extractions to migrate from one storage environment to another, create a complete backup, or add records to a system for the first time.

Incremental batch extraction cuts large datasets into smaller chunks, making records easier to retrieve. It’s the difference between downloading one huge ZIP file (akin to full extraction) versus downloading batches of smaller files at a time (incremental batch extraction). The latter is more efficient – you can work with data that you’ve already retrieved while waiting for the rest of the dataset to be extracted.

Let’s say you want to know the potential lifetime value of five different customer segments. Incremental batch extraction allows you to extract spending data by segment and begin analyzing the first group while extracting the other batches.

With incremental stream extraction, you only retrieve data that has been transformed since your last extraction. This is useful for rule-based data collection. It uses less time, energy, and bandwidth than full extraction, as you only get the data you need.

Say you want to email a reward to customers who spend $50 or more on your eCommerce app this upcoming month. At the end of week one, you retrieve the email addresses of 10 customers who fit the criteria. The following week, an incremental stream extraction will only give you the email addresses of those customers who had hit the $50 spend that week and not since the beginning of the month.

Data extraction is the first step in building personalized experiences for your customers. With the Segment Customer Data Platform (CDP), you can put disparate data together to gain a single customer view and build accurate audience segments. You can then build workflows for marketing, customer engagement, and product experiences based on these segments and real-time customer actions.

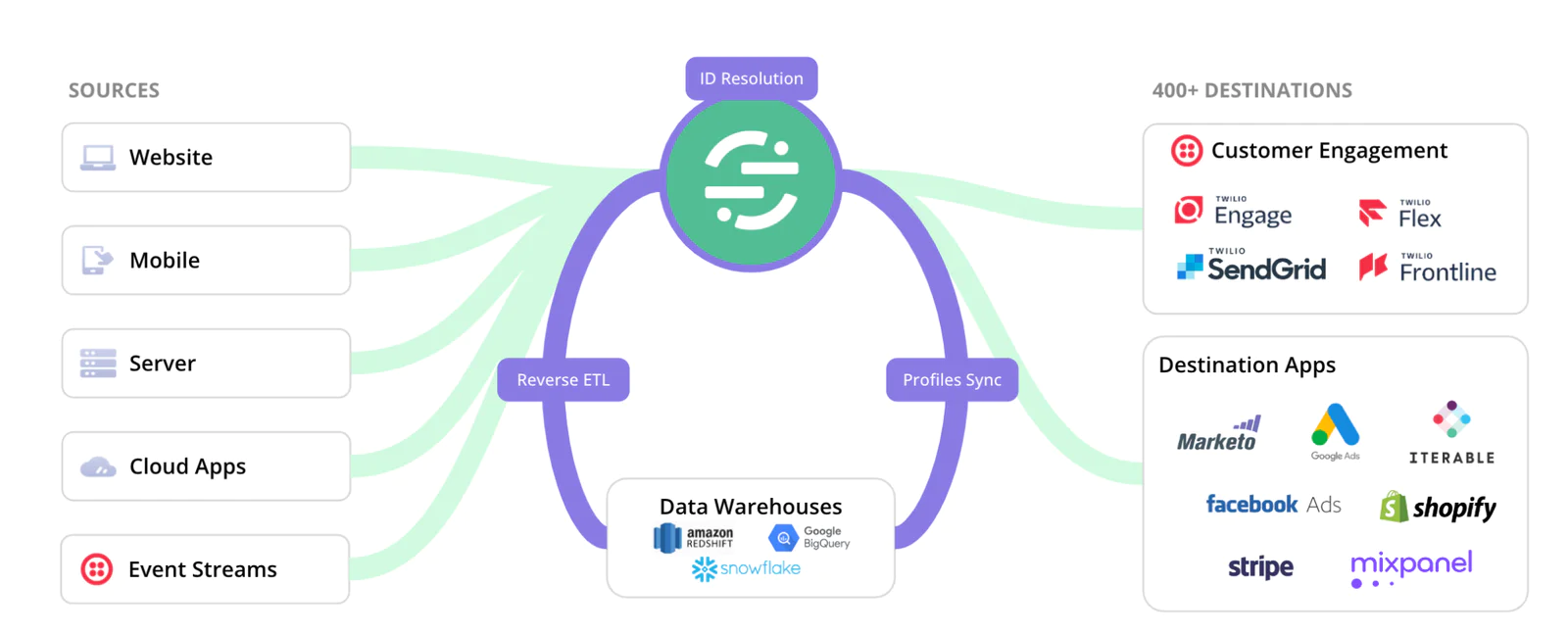

You can integrate and sync data in your CDP with your warehouse data using Segment’s Profiles Sync and Reverse ETL features. As a result, you break down data silos, reduce the need to create shadow profiles, and enrich your customer data with relevant traits from your warehouse.

By having all of your data in one place, you reduce data silos in your organization. You also get a broader view of your customers’ interactions with your business. Segment’s Protocols data cleaning tool also lets you set controls to ensure privacy and security. For instance, you can set parameters to anonymize data in compliance with relevant regulations.

Segment’s identity resolution feature analyzes the first-party data in your CDP to identify a customer's journey across different channels. It then stitches that data into a unified profile, giving you an aerial view of your customer’s journey across different touchpoints.

Segment’s Profiles Sync feature lets you send the unified profiles to your warehouse, eliminating the need to create shadow profiles. Your data scientists can enrich the dataset with hundreds or even thousands of traits. Send the enriched dataset back to the Segment CDP to create more detailed, accurate, and granular profiles and audience segments.

You don’t need to build and manage multiple reverse ETL pipelines to activate your warehouse data. Use Segment’s reverse ETL to send synced and enriched profiles back to the Segment CDP, which integrates with more than 450 downstream tools for immediate activation.

Structured data is the type of data that can be organized in rows and columns and adheres to a data model. Unstructured data can’t be stored in a simple relational database and doesn’t follow hierarchies, standard formats, and naming conventions.

Data extraction is the process of retrieving data from a source for the purpose of storing and/or processing it. Data mining is searching through massive datasets to identify patterns and relationships that are useful for analysis.

Reverse ETL is extracting, transforming, and loading data from a data storage repository to a destination software or platform. ETL goes the opposite way – you extract data from a source (or sources), transform it, and load it into a data storage repository.

The Segment Customer Data Platform (CDP) does away with the need to build and manage separate ETL pipelines for every data source. Using APIs, Segment connects to your sources with a few clicks and lets you load the data into a centralized database.