What is Data Governance? Types, Examples & Best Practices

Data Governance 101: Understand what data governance entails, and best practices, to ensure data remains secure, private, accurate, and useful.

Data Governance 101: Understand what data governance entails, and best practices, to ensure data remains secure, private, accurate, and useful.

Data governance refers to a set of policies and best practices created by a business to dictate how their data should be collected, unified, stored, activated, and deleted. It essentially provides a set of instructions on how to standardize data collection, achieve data democratization, and regulates who internally can have access to sensitive or personally identifiable information (PII).

PROTOCOLS

Take control of your data quality with intuitive features and real-time validation built for enterprise scale.

Businesses are under increased pressure to be data driven. Data is an asset, competitive differentiator, and the North Star helping you navigate an unpredictable landscape. But when data isn’t managed properly all those positive qualities can flip: turning data into a red herring, a liability, and a financial burden.

All this to say: to succeed at being data driven, businesses need data governance.

We’ve talked about what data governance helps protect against. Now, let’s dive deeper into its benefits.

Data silos are easy to create and incredibly annoying to fix. The average business has hundreds of tools in their tech stack (for a typical mid-market company there’s roughly 185 apps used throughout their workflows). And while each team has access to the data they collect in their owned tools, it can be difficult to share it across an organization unless it’s manually pulled and formatted by an engineer.

An overview of some common sources of customer data

In one study of 400+ decision-makers across industries, 75% said they struggled with siloed data. When discussing why they felt their current data and analytics management wasn’t helping them evolve with customer expectations, three main reasons emerged:

A lack of integrations

Difficulty in setting up integrations

An inability to handle the volume of data they had to collect and unify

Data governance tackles these pain points ahead of time rather than reacting in the moment. It asks – and answers – important questions like: how are we setting up integrations, and who is the point person for this? Is this something we can automate at scale? How are we unifying data and where are we sending it? How are we ensuring every team is able to act on insights?

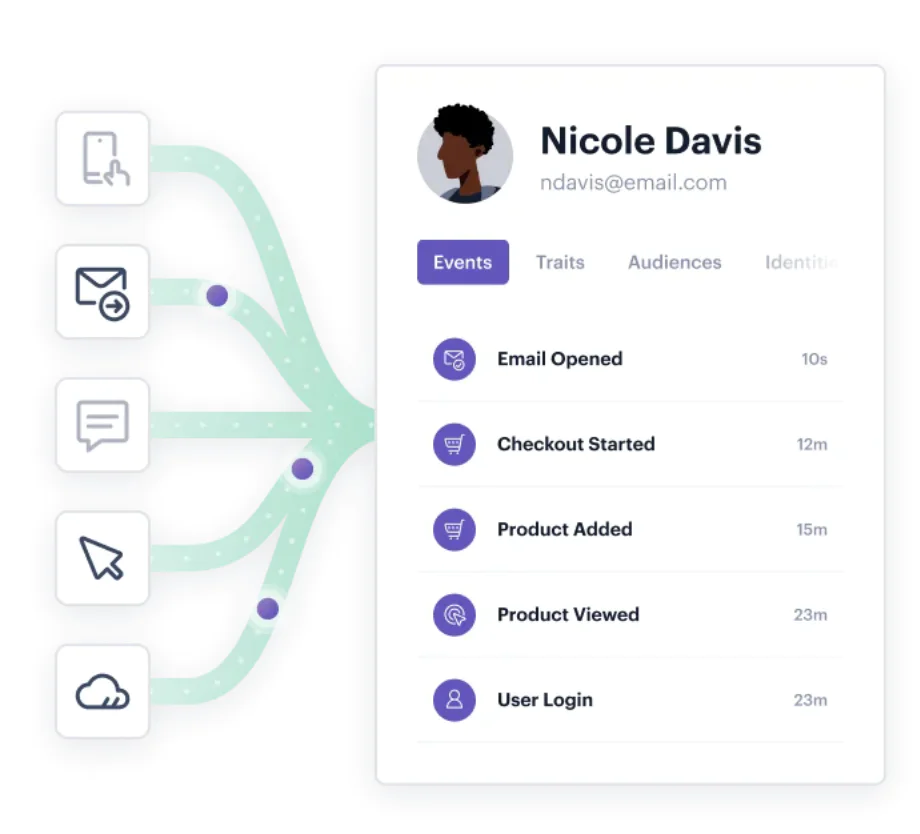

Eliminating data silos is a crucial step in establishing a single view of the customer. True to its name, this single view consolidates a customer’s behavioral history with your business (e.g., pages viewed, orders completed) along with key traits and identifiers (e.g., their email address, phone number, NPS ratings, etc.). This unified customer profile provides businesses with the ability to act on real-time data.

From a data governance point of view, achieving a single view of the customer means answering: how are we performing identity resolution? This is a complex question with a complex answer to match, but we’ll cover a few key components. First, how are you identifying customers? Common identifiers tend to be an email address, IP address, login details – important information in its own right, but not enough to create a nuanced understanding of customers.

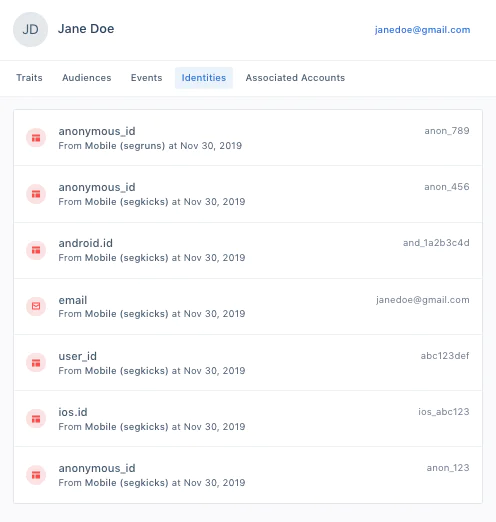

This is where externalIDs come in, or identifiers pulled from external sources. At Segment, we automatically include external identifiers like Android and iOS IDs, along with anonymous_IDs that can be merged into a known profile. However, customers can further customize externalIDs to have complete control over what constitutes an identifier. (All of these identifiers are then stored into an identity graph.)

Identity resolution can link anonymous_ids to known users to avoid having multiple profiles for the same person.

Identity resolution is often split between two approaches: deterministic or probabilistic. Deterministic means identity resolution is based on what you know with absolute certainty (e.g., first-party customer data). Probabilistic is based on what you predict to be true (using a statistical model). At Segment, we use deterministic identity resolution to avoid any margin of error.

Want to learn more about identity resolution? Check out our comprehensive guide.

For data to be reliable it needs to be:

Complete (e.g., no missing values)

Consistent (e.g., reporting doesn’t vary between different tools)

Gathered from trustworthy sources (e.g., don’t buy customer data)

Unique (e.g., no double entries for the same event)

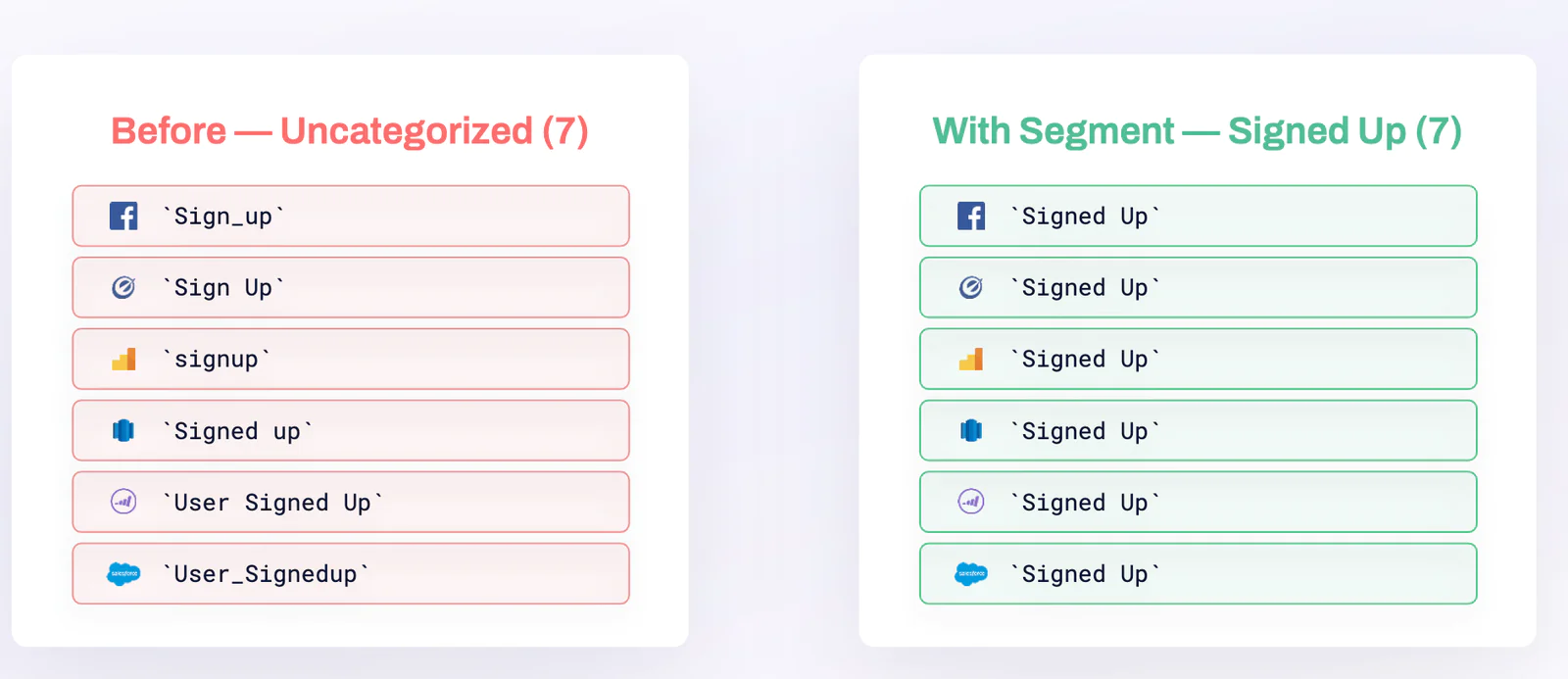

Data governance helps ensure these qualities are met, first by establishing a company-wide tracking plan to standardize naming conventions. This avoids miscounting the same event under different nomenclature, like “sign_up” versus “signed up” (which happens a lot more than you think).

You can learn more about formalizing naming conventions and data collection best practices here.

The importance of standardized naming conventions can be seen above: without them, a single event can be tracked multiple times.

You also need to think through how you’ll be building and maintaining data pipelines to guarantee data cleanliness and low latency. To our previous point, business operations don’t often begin with 185 tools (or more) right off the bat. Tools and apps are integrated or deprecated over time, which means you have to continuously ensure data quality as your tech stack evolves.

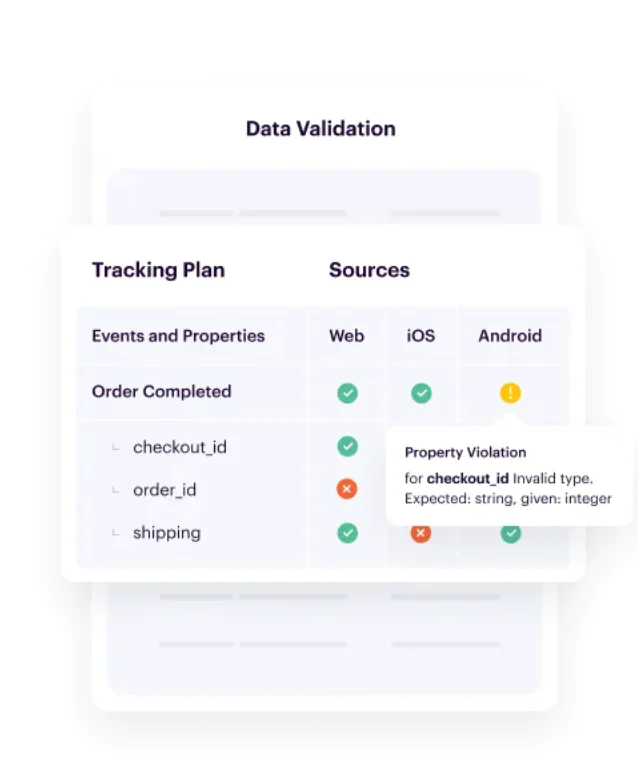

We recommend automating data validation as it’s more scalable and less time consuming than manually testing your tracking code. Automation allows you to audit data in minutes, and can block issues before bad data is spread throughout the organization.

With Segment Protocols, events that don’t adhere to a business’s tracking plan are automatically blocked and flagged for review.

Every business needs a data privacy policy, for both legal and ethical reasons. From the GDPR, to CCPA and HIPPA, there’s been a greater sense of urgency surrounding data privacy in recent years – from both a regulator and consumer standpoint. Data breaches and shady third-party data vendors have eroded consumer trust: one survey found that only 40% of consumers trusted businesses to keep their personal data secure and use it responsibly.

A privacy policy outlines how your business will be collecting customer data (and why), while clarifying how that data will be used. Companies also need to be prepared to handle data deletion requests should users ask for it, and consider where they’re storing and processing data if they do business internationally (as data localization laws are on the rise). You can take a look at Twilio Segment’s privacy policy here.

To protect personally identifiable information (PII), many businesses follow the principle of least privilege. This often means configuring privacy controls to selectively route customer data to specific destinations, while also automatically masking or blocking PII to limit access to certain individuals or departments.

Then, there’s the matter of how you’re protecting customer data from external threats like security breaches. As of now, the leading international standard is the ISO/IEC 27001, which provides guidelines on data protection and cybersecurity, along with certifications. The SOC 2 is another framework for protecting customer that was built around the five Trust Services criteria:

Security

Availability

Processing

Integrity

Confidentiality

Privacy

Here’s a look at how we handle security at Segment.

The collective cost of bad data each year has crossed over into the trillions. For individual companies, bad data can come with the annual price tag of 12.9 million.

We know that data shapes everything from product development to business strategies, AI & machine learning, and day-to-day decision making. And when that data is inaccurate or unreliable, the cost is clearly monumental.

But beyond monetary costs, building custom integrations, maintaining data pipelines, and cleaning data can come with a hefty drain on time and resources. We’ve found that it takes an average of 100 engineering hours to connect a new marketing or analytics tool, and roughly 21 hours a month to maintain it. Not to mention, having engineers and analysts act as data gatekeepers can slow down operations across the board: marketers need to wait for engineers to manually pull audience lists, creating a gap between campaign idea and execution (and engineers are often pulled off their own projects to comply with these requests).

Effective data governance makes sure every team has access to insights. A prime example of this is with DigitalOcean. They used Twilio Engage to unify data and give their user acquisition team the ability to make highly personalized audiences in real time. Before, this process would take days: DigitalOcean’s analytics team would have to pull data from their internal systems, manually build out audiences, and export them to marketing – and at that point, those lists were already outdated. After making the switch, the user acquisition team was able to create audiences for campaigns at a 5x faster rate, and analysts no longer had to take on this manual work.

At Segment, we break down data governance into three overarching themes: Alignment, Validation, and Enforcement.

The first and foundational step in data governance is to align your entire organization around a standardized tracking plan. This should be tied to your overarching business goals, and the metrics you’ll be using to measure progress and performance. Tracking plans, in general, will outline:

What events to track

Where in your code base/app to track them

Why you’re tracking them (e.g., what business goal is this tied to?)

A good rule of thumb to follow when creating a standardized tracking plan.

Keeping data clean means incorporating regular QA (quality assurance) checks. That is, validating new tracking events before they impact production, and flagging any event that doesn’t adhere to your company’s tracking plan.

True to its name, enforcement is concerned with how you’re putting data governance into practice. Many businesses establish a “data council” to help enforce these policies (like approving which events can be added, removed, or updated to your tracking plan).

But just as important as having the right documentation to enforce data governance is having the right stakeholders to oversee its implementation. We’ve seen two models for this: the wrangler or the champions. Whereas the wrangler acts as the DRI to reinforce data governance, “the champions” structure is when multiple stakeholders are chosen from different teams throughout the organization. Both approaches have their own set of pros and cons, which we discuss here.

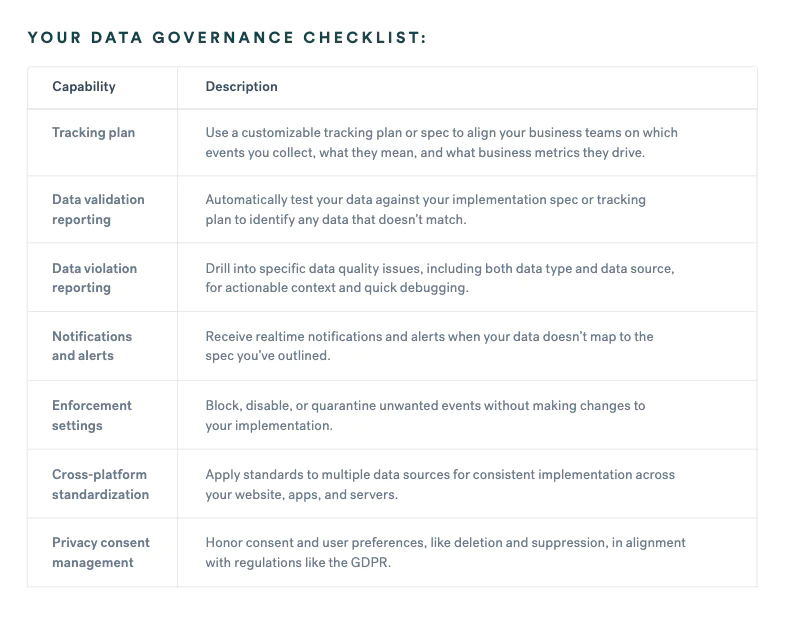

Here’s a quick checklist to highlight the main points of data governance.

Segment Protocols helps businesses protect data integrity at scale. With Protocols, businesses can align their teams around a single data dictionary and audit data in minutes with automatic data validation. Protocols will flag data violations before they reach downstream tools, and aggregate these issues to help spot trends. With Transformations, you’re able to change data as it flows through Segment’s CDP to make corrections or customizations for specific destinations.

Learn how Typeform used Protocols to enforce data governance and establish a single source of truth.

The key roles of data governance are to establish a formal policy and set of best practices around data collection, unification, storage, activation, and deletion. The goal of data governance is to protect data quality (at scale), achieve data democratization within an organization (to improve agility and allow teams to act autonomously on insights), and ensure compliance with data regulations and consumer privacy.

One example of data governance is standardizing naming conventions to ensure data events aren’t mislabeled or referred to in numerous different ways (e.g., signed_up vs. sign_up), which can skew reporting.

Data governance refers to the policies and standards a company creates around how their data is collected, stored, activated, and deleted. Data architecture is also concerned with how a business is managing the data lifecycle, but gets more into the nitty-gritty of the technology, system requirements, and infrastructure needed to implement these plans and policies.