Navigating the Complexities of Data Lake Management

Explore the essentials of data lake management and learn about best practices, integration with Segment, and strategies for optimizing big data processing, storage, and security.

Explore the essentials of data lake management and learn about best practices, integration with Segment, and strategies for optimizing big data processing, storage, and security.

Since data lakes store unprocessed data in its original format – whether it’s structured, unstructured, or semi-structured - they’ve become increasingly important for businesses as they grapple with growing data volumes and varieties.

But even though data lakes are flexible, if they’re not properly managed all that promise of cost-effectiveness and advanced analytics will turn into wasted potential.

Data lake management refers to the best practices and processes that are responsible for collecting, storing, and analyzing the diverse datasets within a data lake.

The architecture of a data lake is often divided into four layers: storage, metadata, query, and compute. Data lake management would be concerned with the efficiency of each layer and the overall repository, with responsibilities including:

Creating and maintaining metadata logs

Partitioning data based on certain criteria for better query performance

Implementing access controls and other security measures

Creating guidelines for data retention and deletion

Instituting good data governance (e.g., running QA checks, identifying data stewards or key stakeholders).

Managing integrations with analytics and business intelligence tools

And much more!

Managing a data lake isn’t without its challenges. Businesses often run into obstacles concerning query performance, ingesting different data types at scale, and preserving data quality. Let’s dive into these in more detail.

Data lakes are designed to be highly scalable, handling vast volumes of data. Their elastic, often cloud-based infrastructure allows companies to scale up and down as needed. And unlike traditional databases that require a strict schema, data lakes can hold all different types of data.

However, this scalability and flexibility can negatively impact performance if not handled carefully. For instance, not properly indexing and partitioning data in the lake can lead to slow query performance and delayed insights.

Poor metadata management can also make it difficult to understand the full context of data, leading to more misconceptions. Additionally, if there's a growing amount of unused or duplicate data in the lake, storage costs will mount as insights become more muddled, and maintenance becomes more labor-intensive.

Since data lakes can store raw, unprocessed data in any format, they often require complex processes for data ingestion (e.g., ELT).

Organizations need to consider the bandwidth involved to keep pace with this influx of data (e..g, what data should be processed in real time vs. batch processing?)

On top of that, without the proper data quality controls in place, raw data can come with errors, inconsistencies, or missing values. Tracking data lineage can also be difficult with a schema-on-read approach, which is typical of data lakes (i.e., when a schema is applied at the point of analysis, instead of beforehand, at the point of ingestion as is the way with a schema-on-write approach).

Organizations need to ensure that the data flowing into a lake is accurate and complete, even if transformations and analysis will be applied at a later date. Performing large-scale data validation at the time of ingestion can be a challenge for businesses, but it is essential.

We recommend automating certain aspects of your data governance to validate data at scale: like by automatically enforcing standardized naming conventions that are aligned with a company’s tracking plan.

Cybersecurity and regulatory compliance are significant challenges in data lake management. Data lake attacks could attempt to gain remote control of your software systems. Or, they might insert bad data into your lake to damage the performance of your AI models.

Regulations such as CCPA and GDPR also give users certain rights regarding their data. The GDPR, for example, allows users to request a copy of the personal data you’ve collected about them. To comply with their request, you need to locate all the files in your data lake that contain the user’s data.

When you’re collecting terabytes or petabytes of data, you need a data lake that enables you to perform these actions quickly. Otherwise, you’ll struggle to find the data and could even risk getting fined.

Keep these best practices in mind to ensure access to reliable data, remain compliant with data privacy laws, and keep your data lake secure.

Data governance encompasses policies and practices that control how a business uses data across its lifecycle. Before you get started with data lake implementation, you need to formalize data governance to protect data quality and minimize security or regulatory risks.

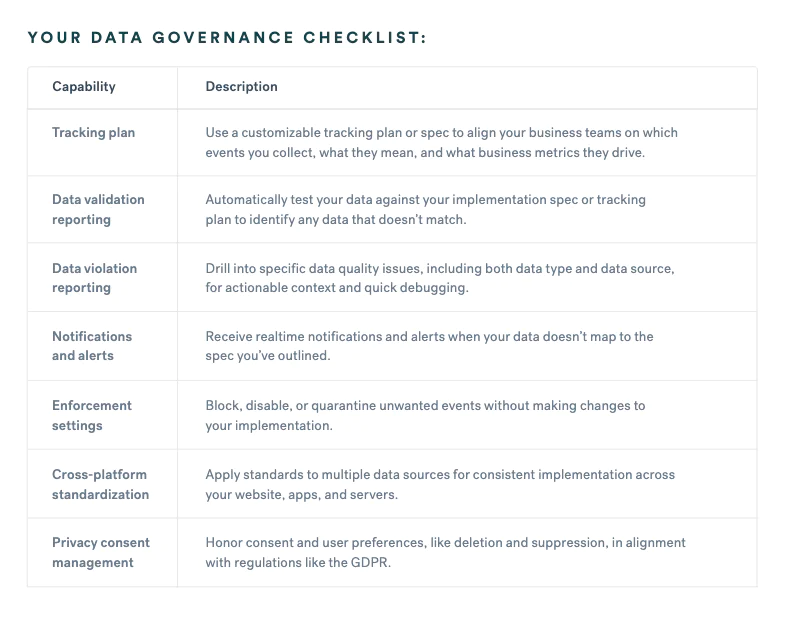

Use Segment’s data governance checklist to remember key steps as you move forward with the process:

You can deploy your data lake in the cloud or on-premises. Amazon Web Services (AWS), Microsoft Azure, Google Cloud Storage, and other cloud-based data lake providers allow you to get started with the setup process quickly without making large upfront investments in hardware and maintenance. But, as your data needs increase, so will the costs.

On-premise deployment is more difficult to scale since you’re responsible for purchasing additional hardware. Still, organizations in some industries (such as healthcare and financial services) gravitate towards an on-prem (or hybrid) data lake architecture so they can have greater control over security and costs.

A data catalog is an inventory of your data that makes your data lake searchable. With a catalog, users can easily find the data they need for decision-making, predictive and real-time analytics, and other use cases. And IT teams will have fewer requests to deal with.

Cataloging your data is also important for regulatory compliance because you’ll have clarity over what kind of personal data is in your data lake.

Some data catalogs also support automation. For example, when you look up a data asset, the catalog will also provide a list of related assets that might be of interest to you.

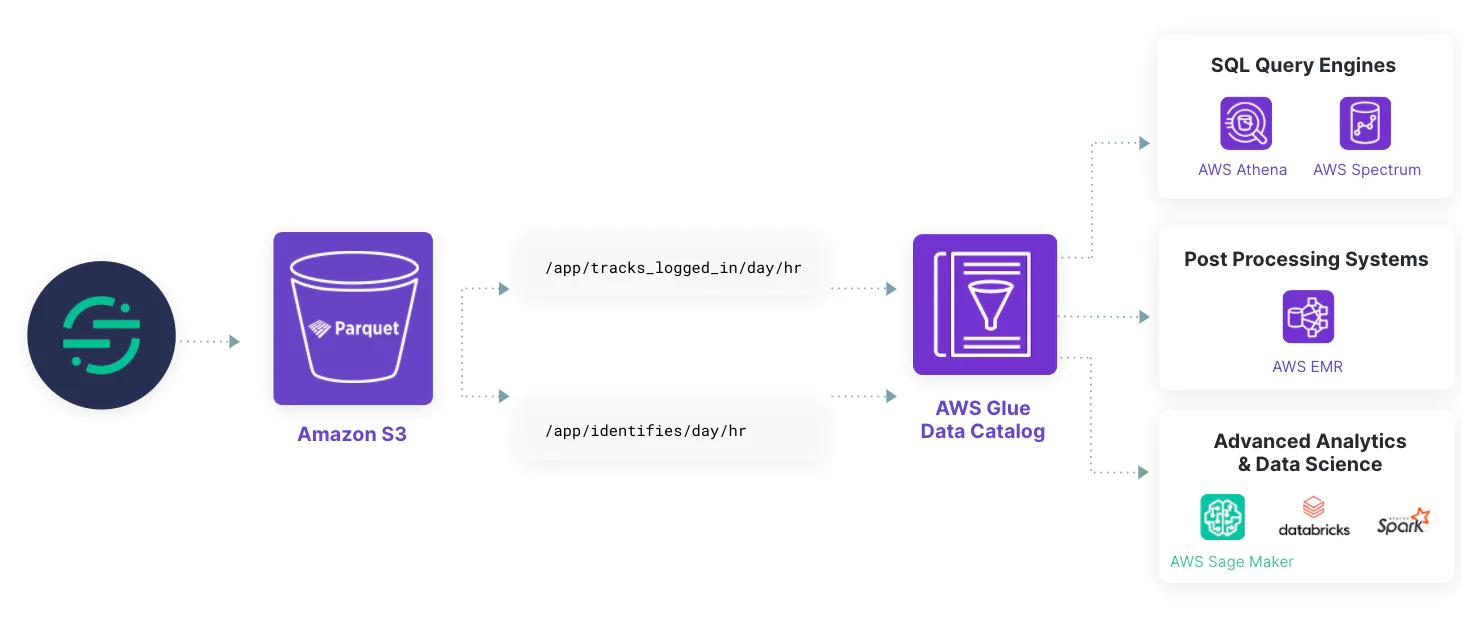

With Twilio Segment Data Lakes, you can send clean and compliant data from Segment’s customer data platform (CDP) to a cloud data store: Azure Data Lake Storage Gen2 (ADLS) or AWS Simple Storage Service (S3). The data is forwarded in a format that supports lightweight processing for data science and data analytics.

Content monetization platform Rokfin implemented Twilio Segment Data Lakes to create a single source of truth for their customer data (in Amazon S3), and decrease data storage costs by 60%. As their lead infrastructure engineer said:

“Twilio Segment Data Lakes saves us time by cutting a step out of moving data to a warehouse. We can now query directly from Amazon S3. Twilio Segment also saves us money because we can now query what we want when we want without worrying about costs. In fact, we no longer need a data warehouse.”

Connect with a Segment expert who can share more about what Segment can do for you.

We'll get back to you shortly. For now, you can create your workspace by clicking below.

Data lakes store raw data from multiple sources in its native format. They can store unlimited data volumes, which makes them ideal for organizations that deal with big data. Data lakes also store diverse types of data from sensor logs to images and transactional data.

Twilio Segment Date Lakes integrates with AWS S3 and ADLS. It stores the data in a read-optimized encoding format, which simplifies data management and enables easy data access.

Twilio Segment encrypts data in transit and at rest. Segment also conducts regular pen testing to identify any potential vulnerabilities. The platform automates compliance with data privacy regulations with real-time personal data detection and masking, handling user deletion or suppression requests at scale, and more.

Enter your email below and we’ll send lessons directly to you so you can learn at your own pace.