Introducing Twilio Segment Data Lakes

Today we’re excited to announce the launch of Segment Data Lakes, a new turnkey customer data lake that provides the data engineering foundation needed to power data science and advanced analytics use cases.

Today we’re excited to announce the launch of Segment Data Lakes, a new turnkey customer data lake that provides the data engineering foundation needed to power data science and advanced analytics use cases.

There’s a competitive advantage within reach for companies who have the foundation in place to develop rich customer insights and unlock the next level of personalized customer experiences.

Last year we shared a common technique for architecting this foundation - cultivating your data lake. Since then we’ve spoken to many Data Engineers and Architects who began designing and building a data lake themselves. While companies like AWS, Azure, and Snowflake provide powerful components of production-ready data lakes, it can take anywhere from 3 months to 1 year to build one in-house.

In a world where customer expectations for relevant and personalized experiences aren’t willing to wait, companies need to make tough trade-offs between quality and speed to build and deliver a production-ready customer data lake to the business immediately.

Today we’re excited to announce the launch of Segment Data Lakes, a new turnkey customer data lake that provides the data engineering foundation needed to power data science and advanced analytics use cases.

With Segment Data Lakes, companies are now able to optimize the cost and time to unlock scaled analytics, machine learning and AI insights with a well-architected data lake. Segment Data Lakes individually optimizes each layer of a high-performing scaled customer data lake to provide you with powerful data architecture deployed within minutes with little effort.

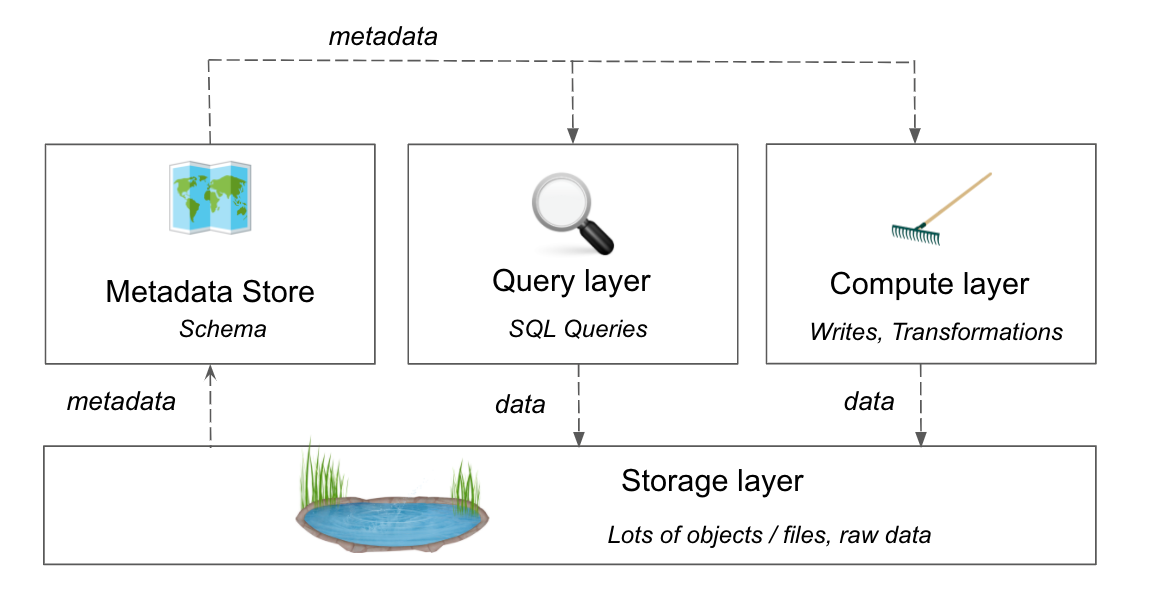

Segment Data Lakes builds a storage layer to hold optimized and schematized customer data in a scalable object store. This layer is joined with a metadata store to provide easy data discoverability and integration into a de-coupled compute and query platform.

Support for Amazon Web Services (AWS) is available today, with support for Microsoft Azure and Google Cloud to follow.

Segment Data Lakes is launching today built on top of powerful AWS services to increase the performance and utility of your data architecture.

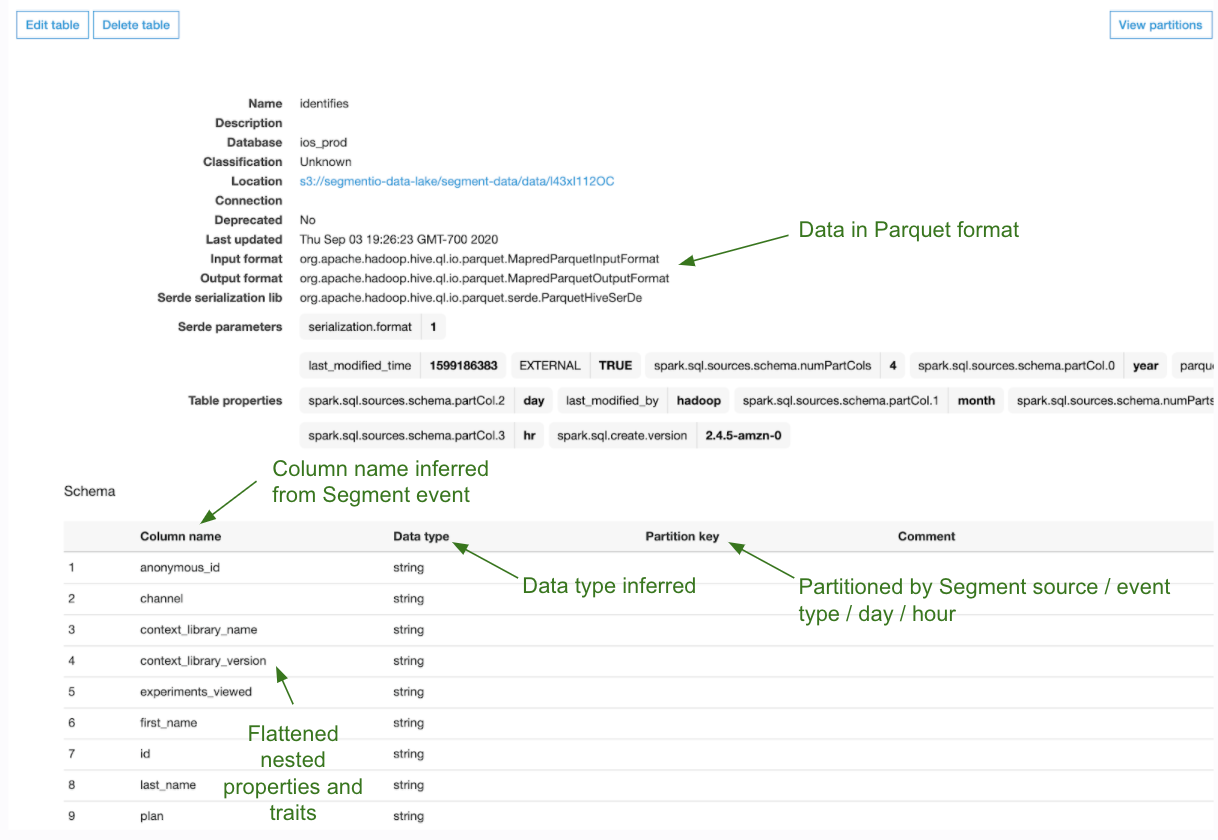

Segment data is stored in Amazon S3’s object store which provides cheap storage for massive volumes of data. To ensure that the data storage is not only economical but also performant when querying the data, raw data is converted from JSON format into compressed Apache Parquet. As a result, users can take advantage of the column-based data format optimized for quicker and cheaper queries.

To eliminate the manual schema discovery and management work Engineers do today, Segment Data Lakes mimics one of the key benefits of a data warehouse and breaks down what is typically unstructured data and creates a schema that is accessible via a metadata store - AWS Glue Data Catalog in this case.

Every event is inspected to infer the schema and created into new tables and columns in Glue Data Catalog. From here data is further partitioned by day and hour to significantly reduce the amount of data which needs to be scanned to return even faster query results.

The metadata store provides easy data discoverability for different tools to target subsets of your customer data. From here, it becomes very easy to plug in all the standard tools in the Data Science and Analytics toolkit.

For the first time Data Scientists and Analysts can easily query data using engines like Amazon Athena, or load it directly into their Jupyter notebook with no additional set up. Distributed frameworks like Apache Spark and Hadoop can be easily used to transform and model the data at scale. Finally, vendors like Databricks integrate with the AWS Glue metadata store so it’s even easier to build on top of your customer data in S3.

As a result, you have an out-of-the-box powerful customer data lake to serve as the data foundation to power even the most advanced use cases on top of customer data.

For those seeking to take deeper advantage of the customer data they have, Segment Data Lakes provides the foundational architecture to deeply understand and predict customer behaviors to unlock the next level of personalization. As a result,

Data teams can unlock richer customer insights with less effort

Data Engineers can reduce time building and maintaining their data lake

Companies can optimize

data storage and compute costs

Data Engineers can future-proof architecture to build the fundamentals for machine learning investments

Data Analytics and Data Science teams are able to get more value out of customer data by leveraging the complete dataset to derive richer customer insights than previously possible without extra Engineering effort.

For example, Rokfin’s Infrastructure Engineering team previously split storage of all customer data between a data warehouse and Amazon S3. Innovation began slowing down due the SQL-only limitation when querying warehouse data. As a result, Rokfin began replicating warehouse data into S3 to marry both datasets and leverage a wider tool stack for querying the data.

However this added a redundant step for the Engineering team and extra dependency for downstream teams relying on this data set.

Segment Data Lakes eliminated the work required to bridge this gap between their datasets, and created an easy way for all of Rokfin’s customer data, from behavioral interactions to payment data, to be stored in S3. Rokfin was finally able to use these datasets together to stitch together a much richer dataset than possible before.

Segment gave us access to more and better data in our data lake which opens up the ability to pull different types of customer insights we couldn’t previously access. Casey Kent, Lead Infrastructure Engineer

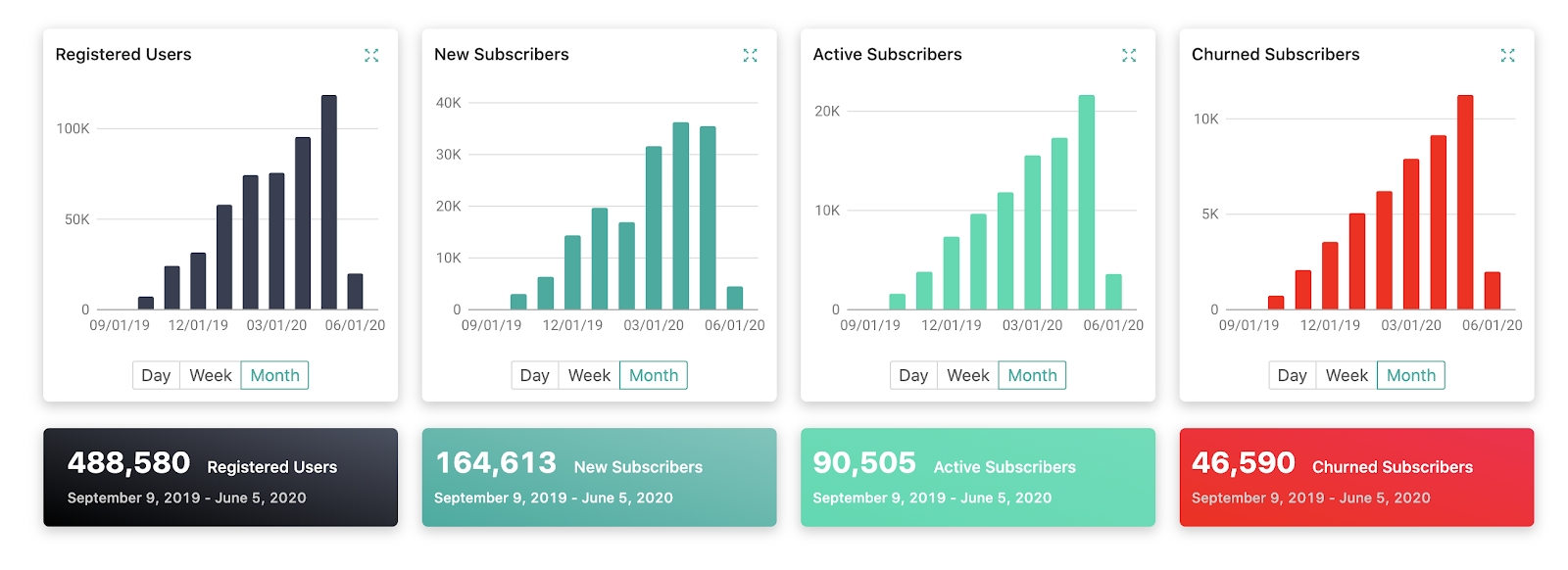

Internally, Rokfin built better product-level and key company dashboards. Externally, Rokfin productized the data into improved, powerful customer dashboards to arm content creators with deeper insights generated from a higher-depth data set to optimize their own publications and acquire more subscribers.

The richer insights increased dashboard engagement by 20% as it provided content creators with more valuable information about what drives new subscribers and retains subscribers, helping put more dollars into creators pockets for the content they produce at the end of the day.

Data Engineers can leverage the Segment Data Lakes product to provide the an out-of-the-box foundation for their downstream consumers in Analytics and Data Science. Today when many businesses are forced to make large resource and time investments to design, build and maintain a custom data lake internally, Segment Data Lakes takes this work away from Engineering teams.

This can significantly cut down on time spent on data ingestion, optimizing data warehouse configurations and queries, managing schema inference and evolution, or connecting to a data catalog so that the data is discoverable by downstream tools.

As a result, many Data Engineering teams are turning to Segment to help provide a baseline data lake architecture rather than needing to design, build and maintain it themselves.

From a Data Engineering perspective, Segment Data Lakes enabled us to move a roadmap item forward by a full quarter. Anders Cassidy, Director of Data Engineering

By relying on a cheap data store such as Amazon S3 instead of a data warehouse to store all customer data, companies are able to significantly reduce their data storage cost. As Rokfin migrated from a data warehouse to Segment Data Lakes, Rokfin was able to reduce their data storage cost by 60%.

This new cost savings is opening up doors for companies - they’re able to leverage this storage cost reduction as another way to cut down spend across the business, or re-purpose this cost to invest more deeply in compute resources to run more EMR jobs and downstream queries to gain more value from putting their data to use.

Data Engineering teams are now able to feel confident that their data architecture is future-proofed to not only meet today’s business needs, but also support growingly complex changes.

The fundamental pre-requisite for machine learning, segmentation, and analysis is complete, accurate and accessible data. Historically, data architecture constraints forced companies to prioritize keeping only subsets of the data to reduce storage costs or optimize compute performance.

With Segment Data Lakes, all historical, current and future customer behavioral data can be easily stored without consequence to cost or resource contention. Additionally, Data teams who have already unloaded historical Segment data can re-build a complete, high-depth behavioral dataset in S3 using Segment replay.

With the foundational data set in place, data consumers now have the flexibility to plug in just about any compute layer they need, from Databricks, to Athena and EMR, to even a warehouse with external tables.

Segment Data Lakes provides the fundamentals to innovating businesses, regardless of where they are on the machine learning maturity journey. Whether businesses are focused on advanced segmentation today, or machine learning tomorrow, both require the same building blocks.

Segment Data Lakes is available to all Segment Business tier customers as part of the current plan. Get started today by checking out our technical documentation and set up guide.

If you’re not a Segment customer or not on the Business plan, contact us to us to learn more or sign up for our upcoming webinar.

It’s free to connect your data sources and destinations to the Segment CDP. Use one API to collect analytics data across any platform.