Hosting Fleet on AWS EKS

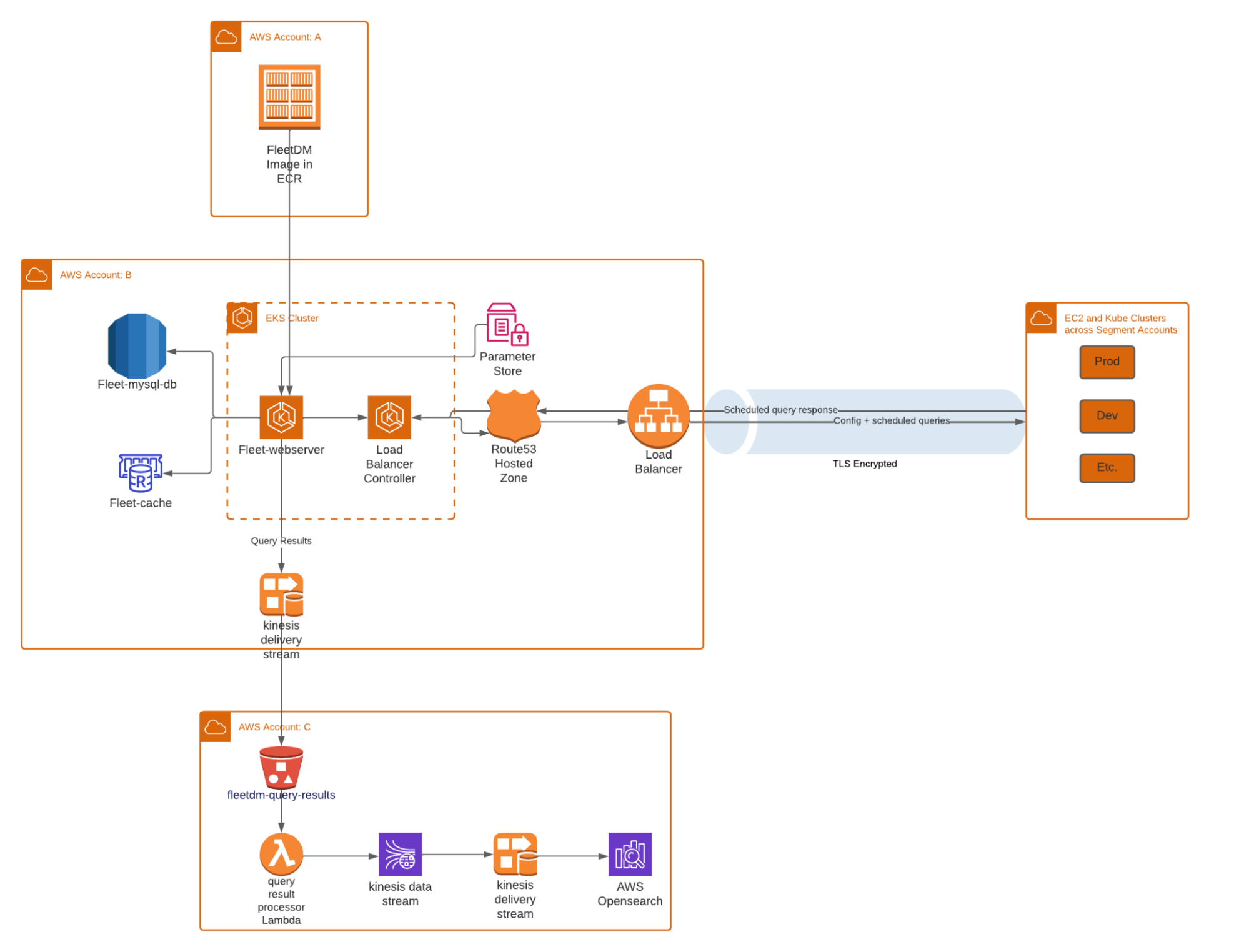

We detail how to host Fleet on an EKS cluster and send scheduled query logs to an AWS Opensource destination entirely created and managed as code.

We detail how to host Fleet on an EKS cluster and send scheduled query logs to an AWS Opensource destination entirely created and managed as code.

Endpoint Monitoring and visibility is an essential building block for the success of any Detection & Response team. At Segment our tools of choice for Endpoint monitoring are Osquery paired with Fleet for orchestration.

Osquery exposes an operating system as a high-performance relational database that allows you to write SQL-based queries to explore operating system data. It runs as a simple agent and it supports OSx, Windows or any of the Linux operating systems. This functionality is very powerful in order to be able to quickly get data about a host’s activity during a security investigation or pro-actively run queries on it at a regular interval that lets security teams monitor for malicious activities on a host.

Fleet is the most commonly used open-source Osquery manager across Security and Compliance teams in the world. Once the device/s running Osquery on them are enrolled, Fleet enables us to run queries through the Osquery agent across 100,000+ servers, containers, and laptops at scale.

There are many ways of hosting Fleet in your environment. At Segment, we decided to host it entirely as code on an EKS cluster, which is a new Amazon Web Services offering that makes it easy to run Kubernetes at scale. This post will show you how to host Fleet on an EKS cluster and send scheduled query logs to an AWS Opensource destination entirely created and managed as code.

Fleet has two major infrastructure dependencies - a MySQL Database and a Redis Cache. We set up both these pieces of infrastructure using Terraform. In this post I will be able to share some of those configurations but not all. That’s because at Segment our wonderful Tooling team has abstracted away a lot of commonly used infrastructure modules such that we have many default standard settings applied to our infrastructure in terms of setting up the relevant Security Groups and more.

Note that the configurations below aren't copy-pastable. You will be able to reuse some components and settings as-is but not all of them. For the purpose of this guide we are going to show a bare-bones minimal configuration for each piece of infrastructure that you can elaborate on using a variety of additional terraform modules as you see fit for your environment.

Amazon enables us to build a virtual network in the AWS cloud. We can define our own network space, and control how our network and the resources inside it interact with each other. Creating a VPC is an essential first step because every object we create in the subsequent steps will be a part of this VPC. Ideally we’d want to create a VPC with a public and private subnet.

The standard approach on setting this up in the AWS ecosystem is to set up a MySQL database in RDS and set up a Redis Cluster in AWS Elasticache. Here is how you can create a basic MySQL DB with a few lines of code using Terraform:

It’s best practice to store the password either in Parameter Store / KMS or any equivalent secure credential storage mechanism.

Here is how you can set up a basic Redis cluster:

Please DO NOT set an authentication token when setting up your Redis cluster in AWS or else your app will not be set up at all. Fleet does not yet support the Redis connection over TLS. However, AWS supports an auth token setup only over TLS. So, if you set up the auth token later when you try to set up the Fleet Web App it will be unable to connect to Redis and your App will never be up and running.

This is a lesson we learnt in a very hard way because our Web App would deploy only to go in a crash loop later and there were no logs or any sort of indication suggesting why the App was constantly crashing. It took us a long time to debug this error and ultimately we found the root cause to be the Redis connection failure.

A large portion of the EKS Platform setup has been standardized and abstracted away by the Tooling team at Segment such that any engineer can set up an EKS cluster with just a few input variables. This setup involves creation of an EKS cluster, a Kube drainer, a few namespaces as well as the CI/CD integration setup. The entire setup would be out of scope for the purpose of this post but I can show you roughly what the configuration for the Cluster looks like:

You can set up a simple Cluster with EKSCtl using the following command and Flags:

The Application Load Balancer is how various endpoints connect to the Fleet web server. ALB serves as the single point of contact for all the endpoints and smartly distributes incoming application traffic across multiple nodes. Here is a rough example of how we have set it up at Segment:

If you want to host Fleet on a custom Domain you have the option to get your domain registered and set up the Route53 Hosted Zone. Make sure to add a CNAME record to connect your newly registered domain with your ALB created in the step above in order to successfully enable the ingress/egress traffic on the Fleet cluster.

At Segment we have a standardized central repo for all our Docker images. Here is an example of what the image can look like. In this example you can see that along with Fleet and FleetCTL we are also installing a Binary called ‘Chamber’. Chamber is in fact a secret management tool built at Segment and open sourced. We use Chamber for secrets management during the app installation.

At Segment we deployed this image to the EKS Cluster using an internal tool. However, you can also deploy it with kubectl using a config file with the following command:

Here is what the config file can look like:

Once the cluster is up and running and you have successfully enrolled a few hosts in it you can start thinking about where you might want to forward your scheduled query results. It is a standard practice to forward these results to your centralized logging ecosystem in order to analyze them further and set up alerting on top of it.

At the moment Fleet lets you enable log forwarding to any of the following destinations:

Filesystem (Default)

Firehose

Snowflake

Splunk

Kinesis

Lambda

PubSub

Kafka REST Proxy

Stdout

You can find instructions for how to enable this for all the destinations here.

This is what your app will roughly look like once it’s up and running!

This was a first attempt of its kind in many ways so it wouldn’t have been possible without the support of many engineers across teams inside Segment as well as the developers at Fleet. Big shout out to the devs at Fleet and individuals on the Segment Engineering teams including, but not limited to, Infrastructure Engineering, Cloud Security, and Tooling for supporting me in this adventure.

We hope you found this guide useful. If so, please share it with your peers and friends. If you’d like to join us and build/implement cool technologies please check out Twilio Careers page for open roles across our Security teams.

It’s free to connect your data sources and destinations to the Segment CDP. Use one API to collect analytics data across any platform.