What is Data Integration? Definition, Examples & Systems

Data integration combines critical data from multiple sources into a unified view. Learn how to leverage this for powerful insights, actionable discoveries, and efficient data management.

Data integration combines critical data from multiple sources into a unified view. Learn how to leverage this for powerful insights, actionable discoveries, and efficient data management.

Data integration is the process of combining data that exists across an organization to create a unified view, which can then be leveraged for analytics and insights.

Often, data becomes scattered across the various tools and databases a business uses in its day-to-day operations. To further complicate things, these tools and databases are often owned by different departments (e.g., Sales owns the CRM, Customer Support uses a help desk, the Analytics team works with the data warehouse). On top of that, the data stored within these tools often varies in how it’s formatted (e.g., structured, semi-structured, unstructured).

It’s a setup that makes data silos inevitable, with each team only having a partial view into how the business functions. This is where data integration comes in.

Data integration consolidates this otherwise fragmented data to create a single source of truth. Integration can be done via ETL pipelines, which extract data from various sources, transform it into a standardized format, then load it into a central repository like a data warehouse or data lake. (You can also use ELT pipelines to transform data after you load it into a repository, depending on your business needs.)

Other options for data integration include the use of APIs or middleware to communicate between different tools and systems, or using a standardized programming language like SQL (Structured Query Language) to query multiple different databases at once. (We’ll dive deeper into these different methods below.)

A single platform to collect, unify, and connect your customer data.

Data integration got its start with ETL (extract, transform, load) tools in the ‘80s. It was able to automate data transfers and make otherwise heterogeneous databases interoperable (that is, able to exchange information even if data was structured differently – hence the need for transformation).

In 1991, the University of Minnesota designed the first data integration system – Integrated Public Use Microdata Series (IPUMS) – which consolidated thousands of population databases and international census data (showing the promise of large-scale data integration).

Over time, data integration has become even more complex with growing data volume and velocity, increased use of the internet and cloud-based technologies, and the growing need for real-time data (via event streaming) along with batch processing. As a result, we’ve seen new technologies and methods develop, from using APIs to help communicate between different tools and sources, to leveraging data lakes for storage, or using data hubs to help manage, unify, and process data in a secure and compliant way (as just a few examples).

Below, we’ll get into more detail about these current methods for data integration.

Before we dive into specific tactics and terminology, we wanted to cover a few overarching categories for data integration:

Consolidation: This is when data is combined from different sources and transferred into a repository, like a data warehouse.

Propagation: When data is copied from its sources, and pasted into a central repository. In this method, data continues to live in its original source, but a copy of it exists in a repository (where it's been combined with other data to form a unified view).

Federation: When data is combined from multiple different databases or sources into a single, virtual view. This method doesn’t actually extract or transfer data from its original sources– instead it queries data to pull it in from these disparate locations.

Common storage integration is when data is copied from various different sources and then sent to a central repository (usually a data warehouse). True to its name, this method combines data from different storage systems (e.g., on-premises servers and cloud-based apps) to ensure data is formatted and organized in the same way to form a unified view.

Middleware is software that allows different applications to communicate with one another (Microsoft referred to it as a “translation layer”).

In respect to data integration, middleware acts as the intermediary: able to connect to multiple data sources, transform data, and transfer it to a central repository. You can also use middleware to query data from multiple sources to create a unified view (with this option, data remains in its original source, and isn’t copied or moved to a central repository).

To explain application-based integration, let’s briefly cover APIs (or an application programming interface). An API is able to communicate and exchange data between two different tools or software components. For example, a weather app on your phone is usually an API at work: pulling data on local temperatures and forecasts.

So with application-based integration, a business is essentially creating links between its various different apps to share, merge, or transform data. Application-based integration is concerned with how different tools and apps work together, allowing these different systems to share data and functionality.

Application-based integration has been helpful in connecting legacy, on-premises solutions with newer cloud-based applications so that they can work in tandem. This method also allows for real-time data sharing and updates, whereas some data integration methods are done with batch-processing, losing that real-time element as a result.

Uniform access integration is when data is left in its original source, but can be accessed (and uniformly presented) through a single interface – no matter the format that data is in. A few examples of interfaces that can be used for uniform access integration include ODBC (Open Database Connectivity), REST (Representational state transfer), GraphQL (query language for APIs).

Choosing the right data integration strategy for your business will depend on a couple of factors, including: scalability. That is, will your data integration strategy continue to be the best choice as the business grows, updates its tech stack, and increases data volume

Second, does your business depend on access to real-time data? While every business benefits from having the right insights at the right time, for some businesses a lag in data delivery could be disastrous (i.e., Crisis Response initiatives, financial forecasting and stock trading, healthcare, and more).

Other factors to consider are cost and time commitment (e.g., manually building and maintaining ETL pipelines will require more resources from your engineering team), security (i.e., how are you protecting data from both a legal and ethical standpoint), and data governance (i.e., how are you guaranteeing the integrity of your data at scale)?

Data integration establishes a single source of truth within an organization. Without this, businesses are often basing decisions, strategies, and even product development off incomplete (and as a result) incorrect data. Below, we’ve listed a few reasons as to why data integration is so important for businesses today.

Data democratization is when every team within an organization, regardless of their technical skill set, is able to access, leverage, and understand data – and breaking down data silos is a fundamental step to achieve this.

Data democratization removes bottlenecks that could otherwise crop up with fragmented data. In these scenarios, engineers and analysts often have to custom-build integrations and maintain pipelines to ensure the continuous flow of data and create an interconnected tech stack. Should marketers need an audience list to run a campaign, analysts often have to manually pull this data before exporting it (a process that loses the real-time component as a result, making personalization less effective).

With data democratization, every team within the organization has the ability to access data they know is accurate, saving hundreds of engineering hours in the process.

While it makes sense, operationally, for every team to own a specific part of the business, only having access to the data that’s collected in individual tools is a recipe for inconsistency. Something as simple as referring to an event by two different nomenclatures can wreak havoc on reporting (e.g., log_in vs. logged_in).

Data integration establishes a (sometimes automated) process for merging data records, normalizing data, removing duplicate entries, and aligning everyone around universally defined naming conventions. It also prevents data distrust, which can undermine decision making and collaboration.

Real-time data has become essential for business performance. As we mentioned above, everything from product development, to marketing campaigns and machine learning models depends on having accurate and timely insights. When we talk about real-time data, there’s often two things being referred to: the first being employees’ ability to access and act on insights autonomously (reducing the reliance on engineers and analysts while removing bottlenecks). Then there’s actually capturing and processing that data in real time. With a data integration strategy, you’re solving for both of those things.

Now, let’s get into real-time data capture. This is done via event streaming, when data is processed and analyzed as it’s generated. Then there’s batch processing, when data is processed in sets (or batches) over a specific period of time (e.g., overnight) rather than in real time. Businesses can use both batch processing and event streaming to collect and integrate different types of data at different times. For example, someone sending a customer support ticket might be an event to capture in real time, whereas information pertaining to monthly billing cycles makes sense to be done in batches.

You can take a look at how Segment orchestrates event streaming and batch processing here.

When data is scattered across an organization, it doesn’t just create blindspots when it comes to overarching trends or the customer experience. It creates blindspots when it comes to security. When data is integrated, businesses can have complete control over which information is available to which user(s), by creating a single point access.

A single point of access is when every person within an organization accesses data from one interface or centralized system. By establishing this, businesses are able to set role-based access controls to ensure people aren’t accidentally viewing personally identifiable information (PII). In fact, businesses can even automatically mask or block PII before it’s integrated into a larger data set, for added security.

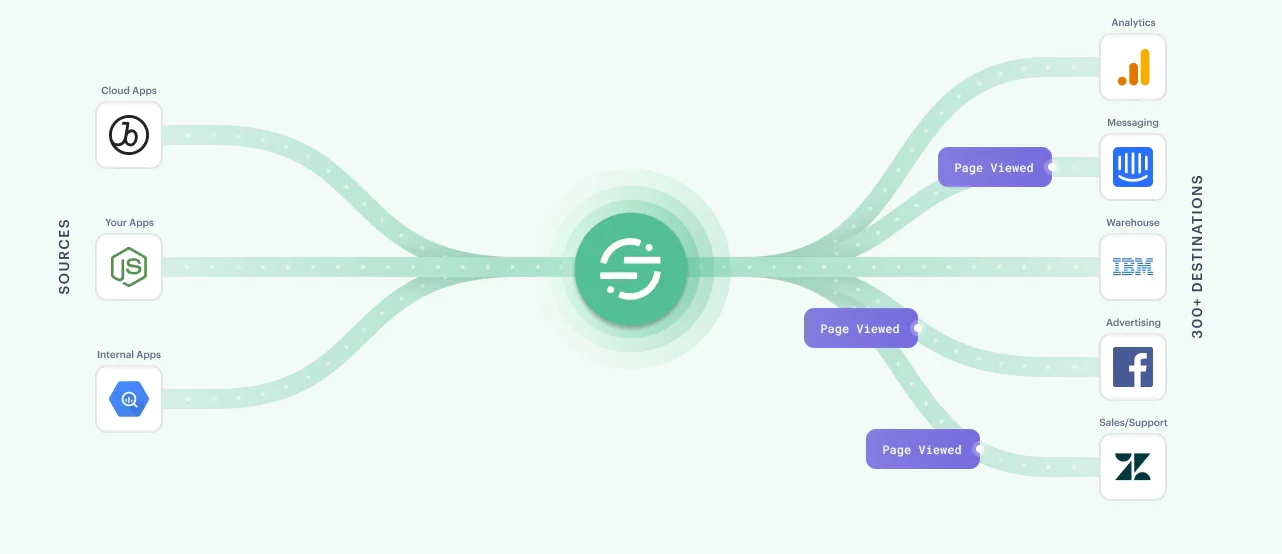

Twilio Segment’s customer data platform (CDP) helps businesses consolidate data at scale and ensure its cleanliness. With hundreds of pre-built integrations, engineers can connect tools and apps within their tech stack in a matter of minutes (and with replay, teams can even test new tools before committing to them). With Segment’s Source Debugger, you can also confirm in real time that API calls from your website, servers, or apps are arriving to your Segment Source (this is a great way to confirm that events are being fired successfully).

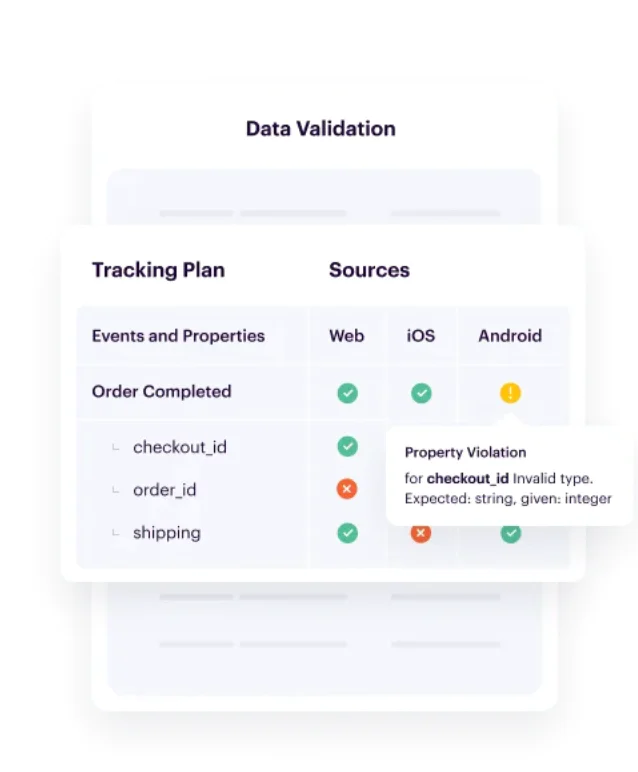

With Segment Protocols, you can also automate QA checks by aligning everyone within the organization around a single tracking plan (and immediately block bad data from being processed). And with the introduction of Segment Unify, businesses can merge complete histories of each customer into a single (deterministic) profile. Teams can then enrich these profiles with data from customer support, CRMs, and more in their data warehouse, and then activate this data in downstream tools with the help of reverse ETL.

Data integration can be a complex process with its own set of challenges. While the goal of data integration is to combine data, businesses are also tasked with ensuring this data is clean and accurate (e.g, de-duplicating entries, normalizing data). Companies also have to consider the scalability of their data integration strategy (e.g., if it's manual, will they have the time and resources to build and maintain point-to-point integrations as the business grows). Last but not least, businesses need to establish firm security protocols to ensure they’re protecting customer data and limiting access to PII.

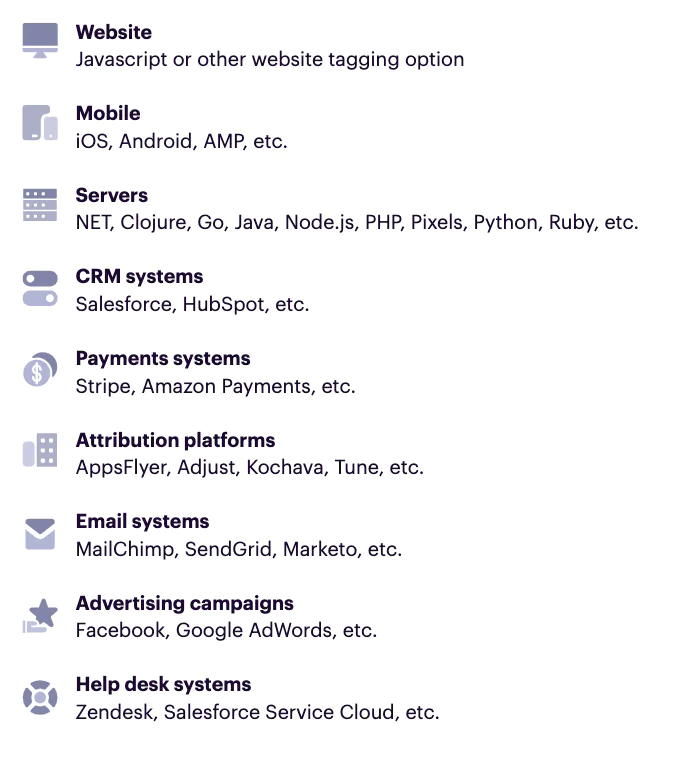

An example of data integration is combining data collected between a company’s email service provider, CRM, help desk, payment systems and servers into a cloud-based data warehouse using ETL (extract, transform, load).

The purpose of data integration is to create a single, unified view within an organization. Without data integration, teams are often operating in silos, with access only to the data in their owned tools and systems. With a unified view of data, businesses are able to base decision making on complete and correct data, and ensure consistency in reporting across tools and applications.

ETL (extract, transform, load) is a method of data integration in which data is extracted from various different sources, temporarily copied to a “staging area” for transformation (e.g., cleaning and validating data, removing personally identifiable information, etc.), and then loaded into a system like a relational database or data warehouse.

Data integration is a larger term used to describe the various different methods for combining data from disparate sources and loading it into a central repository to create a single, unified view. Different types of data integration include:

Common storage integration

Uniform access integration

Middleware

Application-based integration

Manual integration

Data warehousing is a type of data integration, also known as common storage integration. With this method, data is copied from its original source, transformed, and then loaded into a central repository like a data warehouse. Data integration is a broader term to describe different methods, strategies, and processes for combining data across an organization (e.g., via middleware, APIs, manually building ETL pipelines and point-to-point integrations, data virtualization, etc.).