The right way to manage secrets with AWS

The way companies manage application secrets is critical. Even today, improper secrets management has resulted in an astonishing number of high profile breaches.

Having internet-facing credentials is like leaving your house key under a doormat that millions of people walk over daily. Even if the secrets are hard to find, it’s a game of hide and seek that you will eventually lose.

At Segment we centrally and securely manage our secrets with AWS Parameter Store, lots of Terraform configuration, and chamber.

While tools like Vault, Credstash, and Confidant have gotten a lot of buzz recently, Parameter Store is consistently overlooked when discussing secrets management.

After using Parameter Store for a few months, we’ve come to a separate conclusion: if you are running all of your infrastructure on AWS, and you are not using Parameter Store to manage your secrets, then you are crazy__! This post has all of the information you need to get running with Parameter Store in production.

Before diving into Parameter Store itself, it’s worth briefly discussing how service identity works within an AWS account.

At Segment, we run hundreds of services that communicate with one another, AWS APIs, and third party APIs. The services we run have different needs and should only have access to systems that are strictly necessary. This is called the ‘principle of least privilege’.

As an example, our main webserver should never have access to read security audit logs. Without giving containers and services an identity, it's not possible to protect and restrict access to secrets with access control policies.

Our services identify themselves using IAM roles. From the AWS docs:

An IAM role … is an AWS identity with permission policies that determine what the identity can and cannot do in AWS.

IAM roles can be assumed by almost anything: AWS users, running programs, lambdas, or ec2 instances. They all describe what the user or service can and cannot do.

For example, our IAM roles for instances have write-only access to an s3 bucket for appending audit logs, but prevent deletion and reading of those logs.

How do containers get their role securely?

A requirement to using ECS is that all containers must run the EC2 Container Service Agent (ecs-agent). The agent runs as a container that orchestrates and provides an API that containers can communicate with. The agent is the central nervous system of which containers are scheduled on which instances, as well as which IAM role credentials should be provided to a given container.

In order to function properly, the ecs-agent runs an HTTP API that must be accessible to the other containers that are running in the cluster. The API itself is used for healthchecks, and injecting credentials into each container.

To make this API available inside a container on the host, an iptables rule is set on the host instance. This iptables rule forwards traffic destined for a magic IP address to the ecs-agent container.

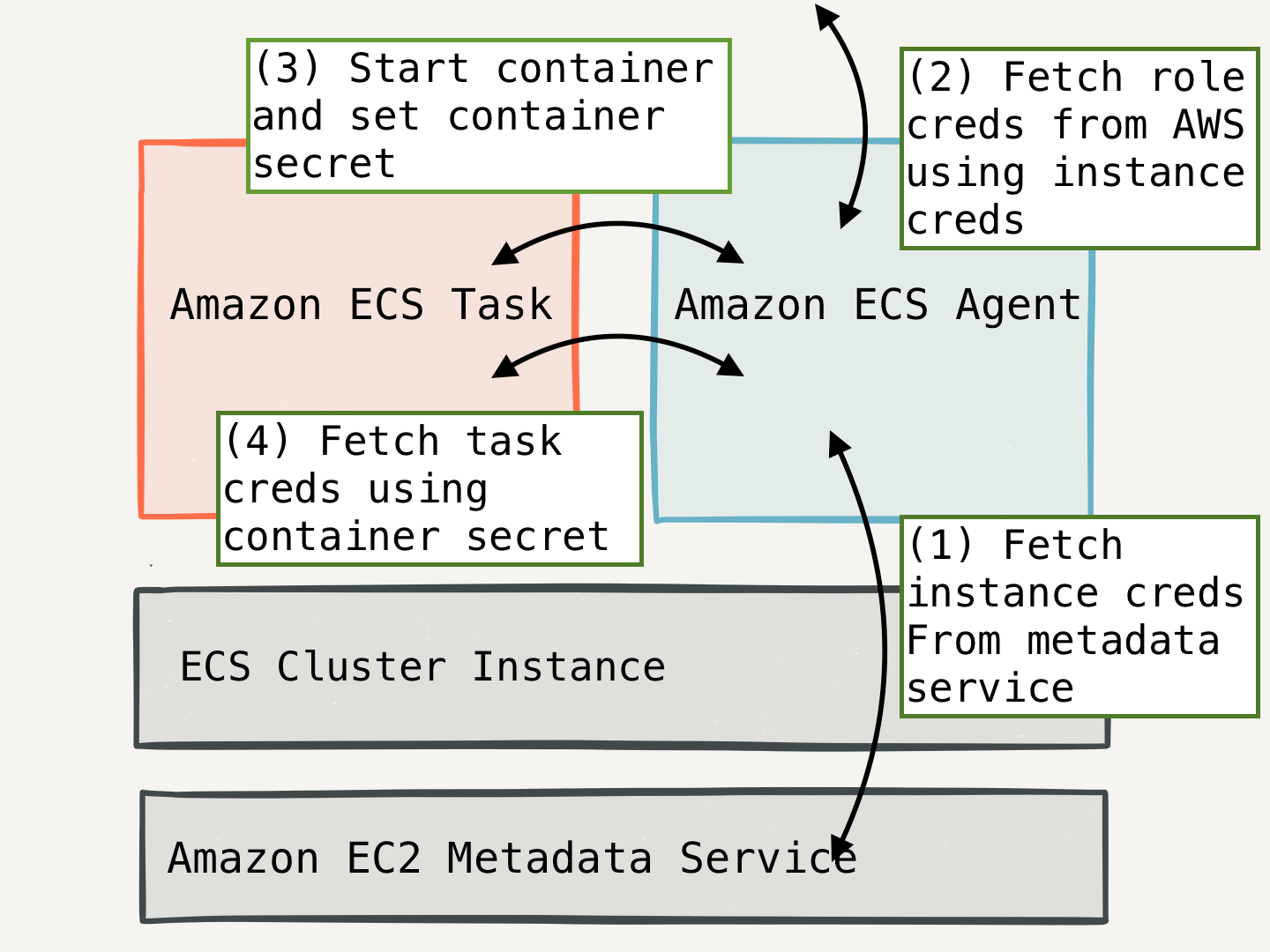

Before ecs-agent starts a container, it first fetches credentials for the container’s task role from the AWS credential service. The ecs-agent next sets the credentials key ID, a UUID, as the AWS_CONTAINER_CREDENTIALS_RELATIVE_URI environment variable inside the container when it is started.

From inside the container, this variable looks like the following:

Using this relative URI and UUID, containers fetch AWS credentials from the ecs-agent over HTTP.

One container cannot access the authentication credentials to impersonate another container because the UUID is sufficiently difficult to guess.

Additional security details

As heavy ECS users we did find security foot-guns associated with ECS task roles.

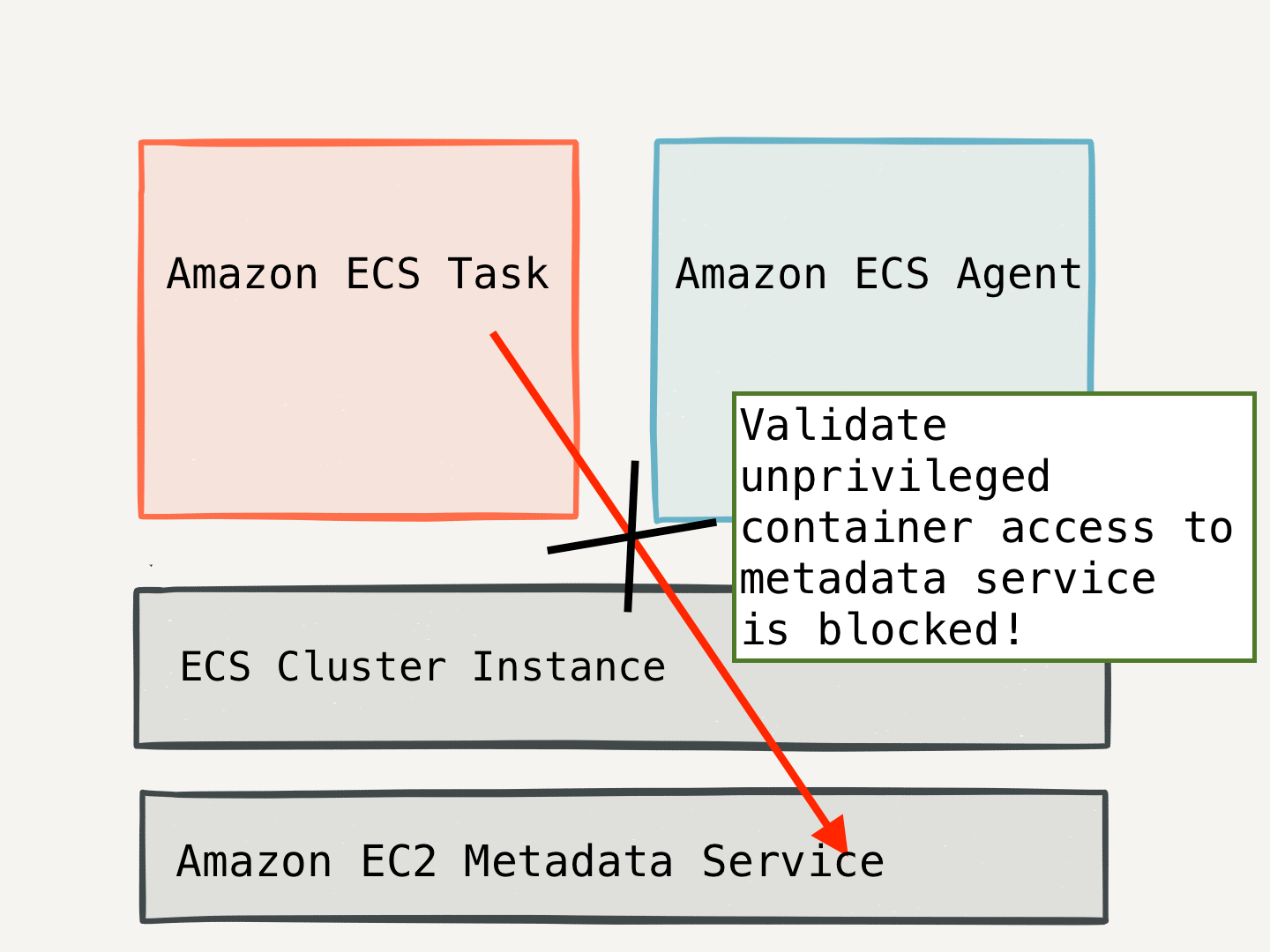

First, it’s very important to realize that any container that can access the EC2 metadata service can become any other task role on the system. If you aren’t careful, it’s easy overlook that a given container can circumvent access control policies and gain access to unauthorized systems.

The two ways ways a container can access the metadata service are a) by using host networking and b) via the docker bridge.

When a container is run with --network='host', it is able to connect to the EC2 metadata service using its host’s network. Setting the ECS_ENABLE_TASK_IAM_ROLE_NETWORK_HOST variable to false in the ecs.config prevents containers from running with this privilege.

Additionally, it’s important to block access to the metadata service IP address over the docker bridge using iptables. The IAM task role documentation recommends preventing access to the EC2 metadata service with this specific rule.

The principle of least privilege is always important to keep in mind when building a security system. Setting ECS_DISABLE_PRIVILEGED to true in the host’s ecs.config can prevent privileged Docker containers from being run and causing other more nuanced security problems.

Now that we’ve established how containers establish identity and exchange secret keys, that brings us to the second part of the equation: Parameter Store.

Parameter Store is an AWS service that stores strings. It can store secret data and non-secret data alike. Secrets stored in parameter store are “secure strings”, and encrypted with a customer specific KMS key.

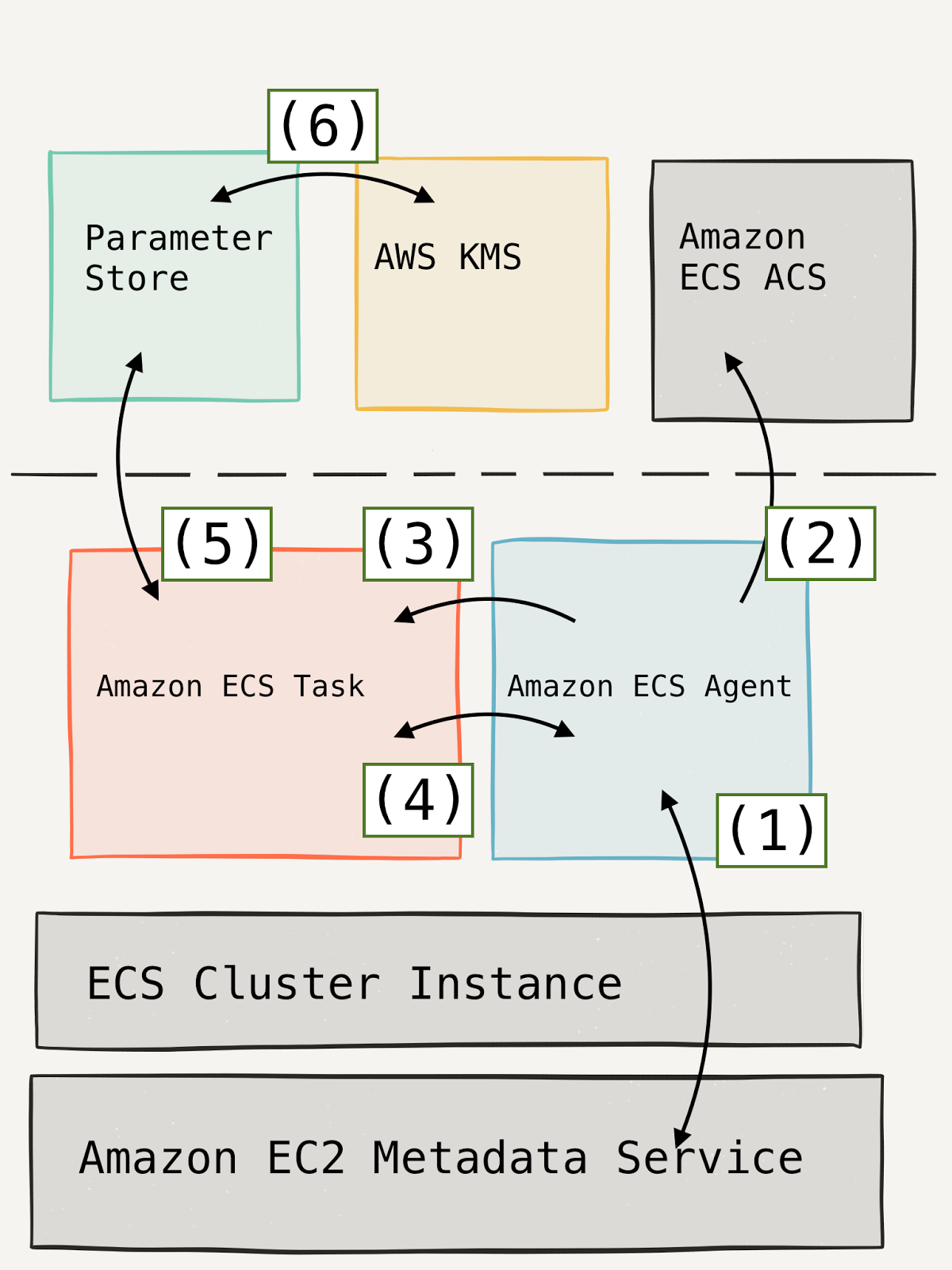

Under the hood, a service that requests secure strings from the AWS Parameter Store has a lot of things happening behind the scenes.

ecs-agent requests the host instance’s temporary credentials.

The ecs agent continuously generates temporary credentials for each ecs task role running on ECS, using an undocumented service called ECS ACS.

When the ecs agent starts each task it sets a secret UUID in the environment of the container.

When the task needs its task role credentials, it requests them from the ecs-agent API and authenticates with the secret UUID.

The ecs task requests it’s secrets from the parameter store using the task role credentials.

Parameter store transparently decrypts these secure strings before returning them to the ecs task.

Using roles with Parameter Store is especially nice because it doesn’t require maintaining additional authentication tokens. This would create additional headache and additional secrets to manage!

Parameter Store IAM Policies

Each role that accesses the Parameter Store requires the ssm:GetParameters permission. “SSM” stands for “Simple System Manager”, and is how AWS denotes Parameter Store operations.

The ssm:GetParameters permission is the policy used to enforce access control and protect one service’s secrets from another. Segment gives all services an IAM role that grants access to secrets that match the format {{service_name}}/*.

In addition to the access control policies, Segment uses a dedicated AWS KMS key to encrypt secure strings within the Parameter Store. Each IAM role is granted a small set of KMS permissions in order the decrypt the secrets they store in Parameter Store.

Of course, creating all of these pieces of boilerplate by hand quickly becomes tiresome. Instead, we automate the creation and configuration of these roles via Terraform modules.

Segment has a small Terraform module that abstracts away the creation of a unique IAM role, load balancers, DNS records, autoscaling, and CloudWatch alarms. Below we show how our nginx load balancer is defined using our service module.

Under the hood, the task role given to each service has all of the IAM policies we previously listed, restricting access to the parameter store by the value in the name field. No configuration required.

Additionally, developers have the option to override which secrets their service has access to by providing a “secret label”. This secret label will replace their service name in their IAM policy. If nginx were to need the same secrets as an HAProxy instance, the two services can share credentials by using the same secret label.

All Segment employees authenticate with AWS using aws-vault, which can securely store AWS credentials in the macOS keychain or in an encrypted file for Linux users.

Segment has several AWS accounts. Engineers can interact with each account by using the aws-vault command and executing commands locally with their AWS credentials populated in their environment.

This is great for AWS APIs, but the AWS CLI leaves a bit to be desired when it comes to interacting with parameter store. For that, we use Chamber.

Using Chamber with Parameter Store

Chamber is a CLI tool that we built internally to allow developers and code to communicate with Parameter Store in a consistent manner.

By allowing developers to use the same tools that run in production, we decrease the number of differences between code running in development with staging and production.

Chamber works out of the box with aws-vault, and has only a few key subcommands:

exec - a command after loading secrets in to the environment.

history - of changes of a secret in parameter store.

list - the names of all secrets in a secret namespace.

write - a secret to the Parameter Store.

Chamber leverages Parameter Store’s built in search and history mechanisms to implement the list and history subcommands. All strings stored in Parameter Store are automatically versioned as well, so we gain a built-in audit trail.

The subcommand used to fetch secrets from the Parameter Store is exec. When developers use the exec subcommand they use it with aws-vault.

In the preceeding command, chamber is executed with the credentials and permissions of the employee in the development account, and it fetches the secrets associated with loadbalancers from Parameter Store. After chamber populates the environment it will run the nginx server.

Running chamber in production

In order to populate secrets in production, chamber is packaged inside our docker containers as a binary and is set as the entrypoint of the container. Chamber will pass signals to the program it executes in order to allow the program to gracefully handle them.

Here’s a diff of what it required to make our main website chamber ready.

Non-docker containers can also use chamber to populate the environment before creating configuration files out of templates, run daemons, etc. We simply need to wrap their command with the chamber executable, and we’re off to the races.

As a last piece of our security story, we want to make sure that every action we described above is logged and audited. Fortunately for us, all access to the AWS Parameter Store is logged with CloudTrail.

This makes keeping a full audit trail for all parameters simple and inexpensive. It also makes building custom alerting and audit logging straightforward.

CloudTrail makes it possible to determine exactly what secrets are used and can make discovering unused secrets or unauthorized access to secrets possible.

AWS logs all Parameter Store access for free as a CloudTrail management event. Most Security Information and Events Management (SIEM) solutions can be configured to watch, and read data from S3.

By using Parameter Store and IAM, we were able to build a small tool that gives us all of the properties that were most important to us in a secret’s management system, with none of the management overhead. In particular, we get:

Protect the secrets at rest with strong encryption.

Enforce strong access control policies.

Create audit logs of authentication and access history.

Great developer experience.

Best of all, these features are made possible with only a little configuration and zero service management.

Secrets management is very challenging to get right. Many products have been built to manage secrets, but none fit the use cases needed by Segment better than Parameter Store.

Our annual look at how attitudes, preferences, and experiences with personalization have evolved over the past year.