Top 6 Big Data Tools for Success in 2023 (Open-Source)

Explore the top big data tools to accelerate business insights. Discover how these innovative solutions can transform your data analytics and profit margins.

Explore the top big data tools to accelerate business insights. Discover how these innovative solutions can transform your data analytics and profit margins.

The amount of data being generated each day is exponential (roughly 328.77 million terabytes). To scale alongside this ever-expanding volume of data, businesses need to use the right tools. They need fault-tolerant yet open and efficient systems that play well with other data tools (and minimize the use of computing resources). These also need to be interfaces that developers — and, in some cases, non-technical analysts — can use. And, of course, you need them to fit your budget.

To that end, here’s a list of open-source big data tools to consider adding to your big data tech stack.

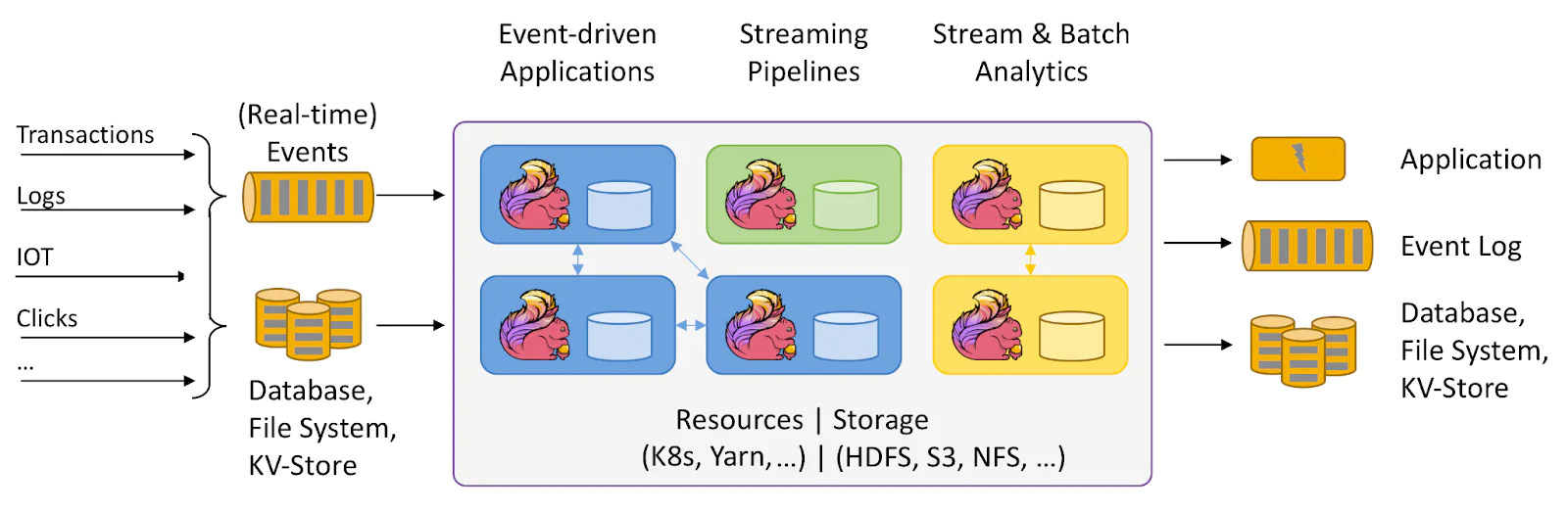

Flink is a data processing framework and distributed processing engine released by the Apache Software Foundation in 2011. It has gained popularity as a big data tool as it allows for stateful computation over bounded (finite) and unbounded (infinite) data streams.

Programs for Flink can be written in SQL, Python, Java, and Scala. Flink isn’t tied to one particular data storage system and instead uses connectors to data sources and data stores.

While Flink supports both batch and stream processing, it’s the latter that sets it apart from other big data tools. Flink allows for data transformation and computations as soon as a data event is captured – without disrupting the data stream.

Another key characteristic of Flink is fault tolerance through the use of checkpoints, which capture an application’s state and stream positions at given intervals (for example, every 10 minutes). In case of unexpected failures, you can reprocess events from a particular time instead of all events in the app’s history.

While checkpoints are managed by Flink, you control when to trigger savepoints – manually captured snapshots of an application’s state. This feature lets you keep business-critical applications available even while you’re running a migration, an upgrade, or an A/B test of different app versions.

Thanks to its high throughput capacity combined with very low latency, Flink is becoming a preferred engine for continuous analyses of large data volumes from multiple sources. Flink also supports advanced business logic, such as setting customized window logic – making it a versatile tool that you can adapt to different analytics scenarios.

E-commerce giant Alibaba uses Flink to gain real-time visibility into inventory, while French telco leader Bouygues Telecom uses it to monitor networks across the country and immediately detect outages. Other complex use cases include stock market and forex analysis, personalized content recommendations on video streaming apps, and fraud detection across global financial systems.

Flink's stream-processing capability speeds up the reaction time for critical recommendation and alert systems and could boost the large-scale adoption of exciting technologies like IoT and augmented reality.

Computer scientist Matei Zaharia began developing Spark at the University of California, Berkeley's AMPLab in 2009. He later donated the project to the Apache Software Foundation, which released the codebase to the public in 2014.

Like Flink, Spark is a distributed processing framework for fast, large-scale data analytics. There’s one key difference: Spark emulates stream processing by processing events in micro-batches, while Flink delivers real-time stream processing by triggering computations on event data as soon as it is received.

The reason for Spark's fault tolerance and efficiency lies in its data structure, which is called a Resilient Distributed Dataset (RDD). RDD keeps track of data transformations in a lineage graph. That means the system can go back and recompute the latest transformation in the event of a data loss. Data records are distributed across multiple nodes, which can run in parallel, resulting in faster operations.

Spark is a multi-language engine that works with Python, SQL, Scala, Java, and R. Use it as an SQL query engine for business intelligence reporting or for running exploratory data analysis on petabytes of data.

Plenty of use cases that apply to Flink can also be performed with Spark, especially if true real-time processing is not a critical requirement.

For instance, Spark can support:

Dynamic, in-app shopping recommendations

Targeted advertising during live-streamed sports events

Natural language processing of large amounts of text

Spark integrates with tons of data and business tools. You can connect it to Segment to understand customer journeys, Kubernetes to run apps at scale, and MLflow to manage machine-learning models.

HPCC (High-Performance Computing Cluster) Systems is a data-intensive system platform built for high-speed, massive data engineering. It was developed over a span of 10 years at LexisNexis Risk Solutions before being released to the public in 2011.

Also known as DAS (Data Analytics Supercomputer), HPCC achieves parallel batch processing for big data applications via two cluster processing environments. It uses ECL (Enterprise Control Language), a highly efficient and powerful programming language that was purpose-built for data analytics by Seisint, a database service provider.

Much of HPCC Systems’ performance efficiency comes from the use of ECL, which reduces the time and IT resources needed to crunch data. When Seisint first implemented ECL on a data supercomputing platform, it took six minutes to answer a problem that had taken 28 days to solve with a SQL-based process on a conventional computer. LexisNexis acquired Seisint in 2004, including its technologies like ECL.

Businesses use HPCC Systems to glean continuous insights from massive amounts of data. It powers use cases like:

A financial analytics platform that ingests data from multiple sources – such as payment channels, banks, and websites – and reconciles them in seconds to create customer profiles

A system of IoT hardhats that continuously transmits data to a safety control center

An AI-based solution that enables personalized omnichannel customer communications at a massive scale

HPCC helped lower the costs of big data analytics by reducing the time and computing resources needed to solve data problems. Thanks to HPCC's speed, more companies are able to make decisions based on up-to-date data. That's something we value at Segment – enabling businesses to make decisions based on what customers did minutes ago, not last week.

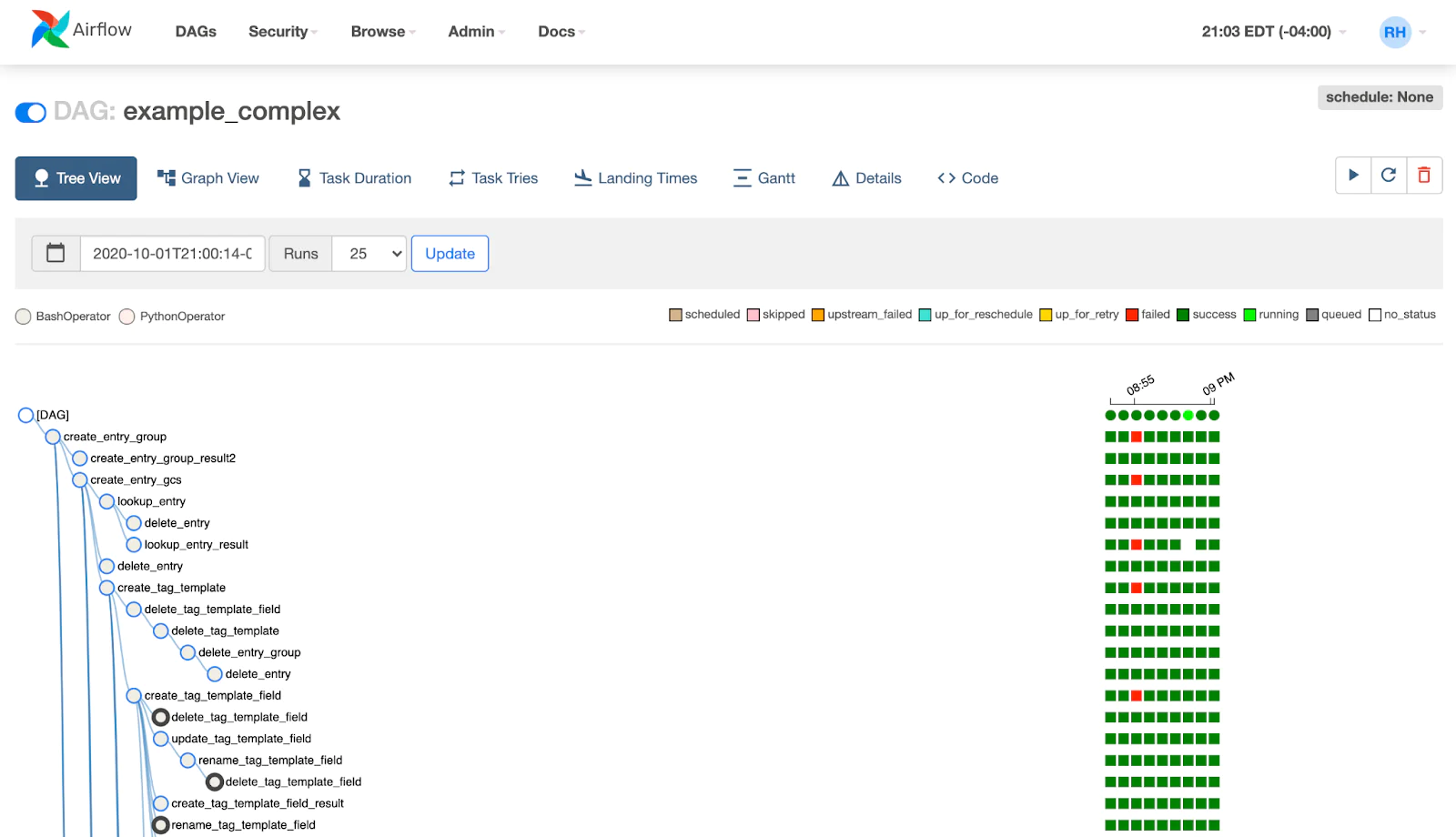

Airflow is a Python-based workflow engine that automates tasks in your data pipeline. It was developed at Airbnb in 2014 to author, orchestrate, schedule, and monitor batch-processing workflows. Airbnb open-sourced Airflow from the start, and the engine became an Apache project in 2016. Airflow has since become one of the more popular big data tools, with roughly 12 million downloads a month.

On Airflow, each workflow is organized as a directed acyclic graph (DAG), which consists of tasks. Each task is a single operation written as an individual piece of code and represents a node in the graph. You can manage, monitor, and troubleshoot DAGs through Airflow’s user-friendly built-in UI, which provides different views like lists, trees, graphs, and Gantt charts.

Airflow uses modular architecture to achieve scalability. It's also extensible, providing plug-and-play operators for integration with data warehouses like Azure and AWS, as well as third-party cloud services like Tableau.

Airflow is popular among bigger companies, with 64% of Airflow users working at organizations with over 200 employees. More than half (54%) of Airflow users are data engineers.

Airflow only works for scheduled batch-processing workloads, though. For example:

Auto-generated sales reports at the close of business for a retail chain

Scheduled data backups

Results analysis at the end of an A/B testing period

With its graphical approach, easy-to-use UI, and automated workflows, Airflow makes pipeline management less tedious and painful.

Drill is a query engine for large-scale datasets, including NoSQL databases. It was released in 2015 and added to Apache’s portfolio of top-level projects the following year.

The beauty of Drill is that it simplifies the process of querying data. Drill lets you query raw data where it is. You don’t need to transfer data to another platform, create formal schemas, or transform it – and this minimizes wait times, labeling errors, and incompatibility issues. A Drill query lets you join data from multiple data stores and analyze data from different sources in different formats.

To top it off, Drill was designed to handle heavy loads. It can scale to 10,000 or more servers and process trillions of records or petabytes of data in mere seconds.

Any data professional who uses SQL will likely find Drill user-friendly. Even when dealing with databases you haven’t used before, you can run complex queries with Drill and get the information you’re looking for.

Drill is particularly useful for data teams that support business intelligence (BI) requirements across large organizations. Non-technical users with SQL knowledge can use Drill, so you can lessen the need for developers to code applications to extract data. Consider use cases like generating ad-hoc sales, marketing, and financial reports or analyzing operational data in a warehouse to identify bottlenecks, productivity trends, and anomalies.

There’s one thing you need to add to your data stack if you want to consistently make customer-facing and operational decisions based on data: a customer data platform (CDP).

A CDP like Twilio Segment gathers data from online and offline sources and consolidates it in a centralized database. Marketers, sales reps, customer support teams, and analysts can then leverage that data to identify customer segments and create unified, real-time customer profiles – the foundation for designing personalized marketing and engagement campaigns.

Below, we show how a CDP can help operationalize big data. (For a deeper look at Segment, check out our infrastructure page).

IoT sensors, customer events (like pages viewed or products purchased), social media engagement, mobile app activity – there’s no shortage of sources when it comes to customer data. At Segment, we’ve made it a point to not have any blindspots when it comes to data collection, building libraries in twelve major languages, offering pre-built connectors, and creating Source functions, which allow you to create custom sources and destinations with just twenty lines of JavaScript.

You can also use Destination Insert Functions to transform, enrich, or filter data before it lands in a downstream destination (a key feature to help with compliance, like performing data masking, encryption, decryption, among other things.).

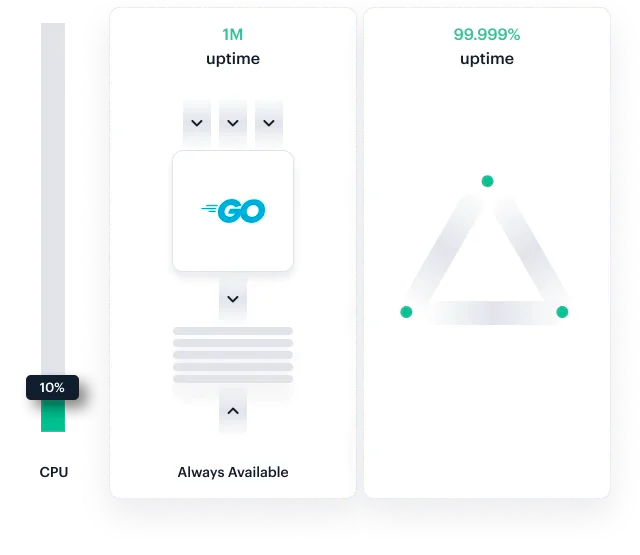

Overly complex data pipelines are difficult to manage and even harder to diagnose and fix when a part of the system breaks. Segment’s high-performance Go servers have a 30ms response time, 99.9999% availability, and can handle tens of thousands requests concurrently.

With Event Delivery, you can make sure data is reaching its intended destination, and spot any issues that might have popped up with data delivery.

Learn more about how Segment built a reliable system for delivering billions of events each day.

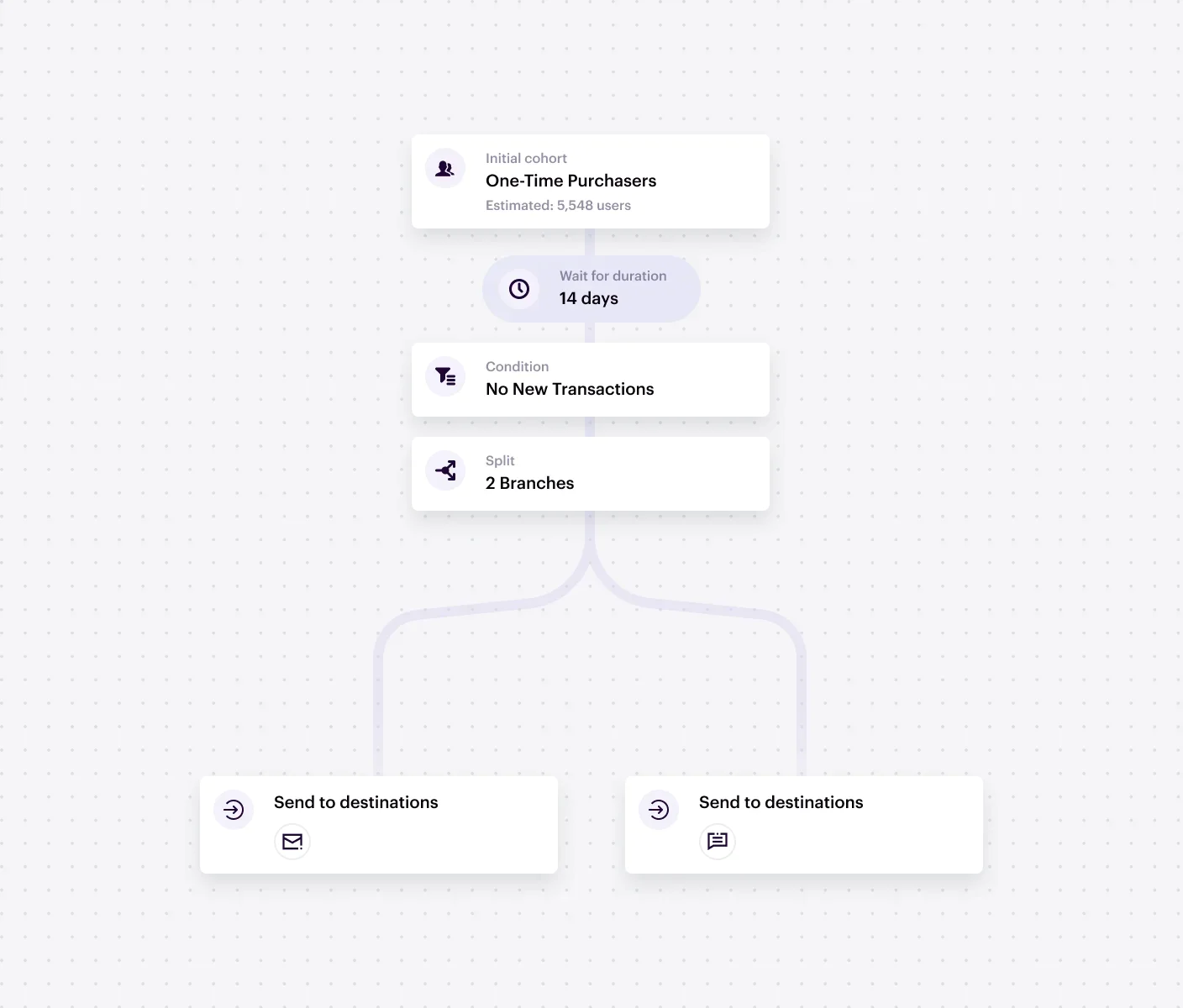

Today’s customers use multiple devices and channels to interact with a brand. Segment helps you collect and consolidate this data in one place, and identify the same user across channels to gain a multi-faceted view of each customer’s journey. Whether it's SMS, web, in app, email, (you name it), Segment allows teams to activate their data in real time, and create highly tailored experiences.

For example, Segment can help a business create an audience of users who are likely to make a repeat purchase, and use real-time behavioral data to nudge customers into action at exactly the right time.

Data has clearly become a sought-after resource for companies – but being able to effectively manage that data can be an enormous challenge. With changing privacy legislations, businesses need to think through how they’re handling important matters like data residency, protecting personally identifiable information, and ensuring sensitive information is kept under tight control.

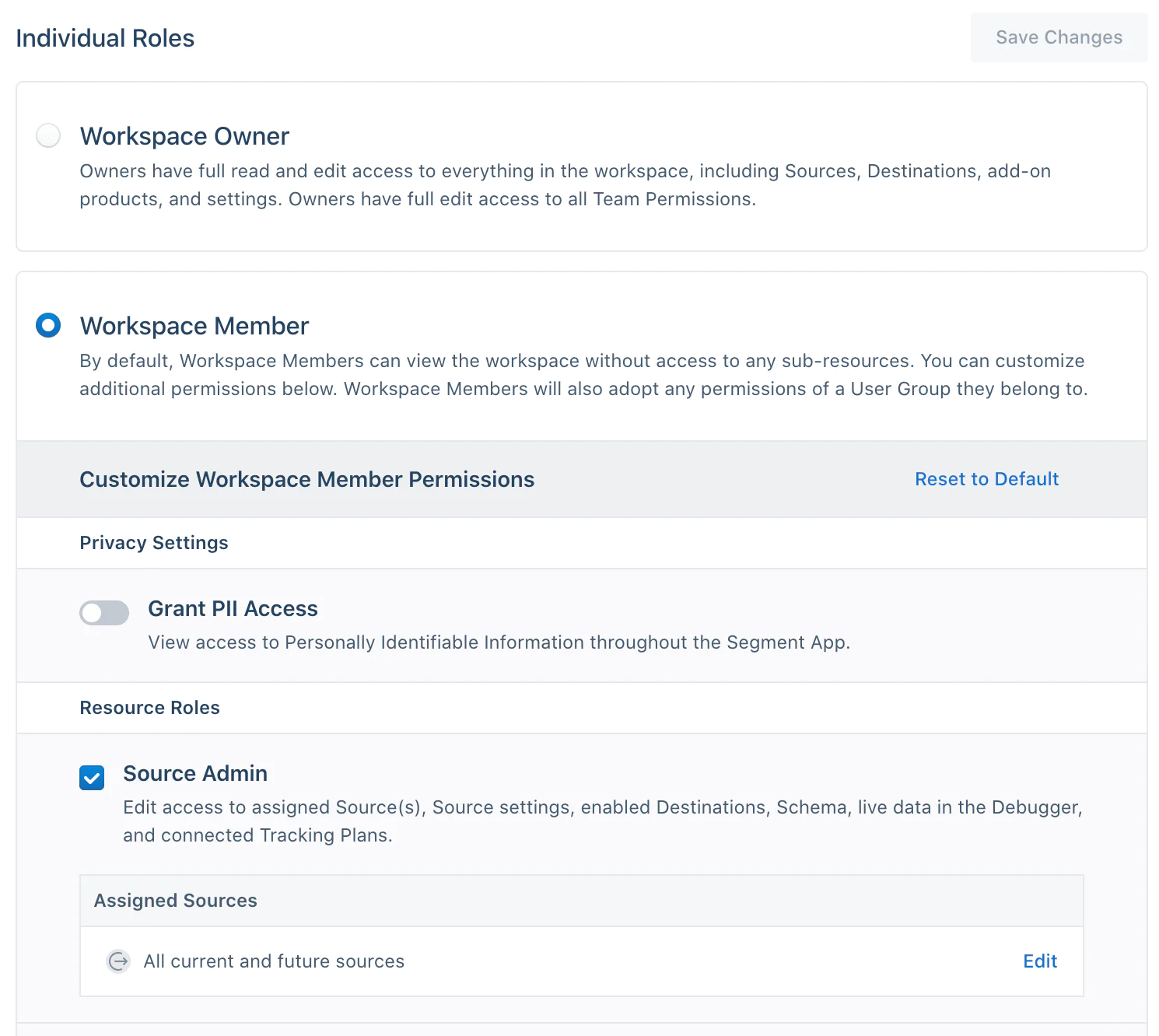

With Protocols, businesses are able to implement data governance at scale to ensure they’re always handling their data appropriately and protecting its accuracy. Protocols ensures teams adhere to a universal tracking plan to block bad data before it reaches downstream destinations. It also offers features like data masking and user access controls to help businesses remain compliant.

Connect with a Segment expert who can share more about what Segment can do for you.

We'll get back to you shortly. For now, you can create your workspace by clicking below.

Companies use big data tools like Flink for stream processing, Spark for micro-batch processing, and Airflow for pipeline monitoring. Data lakes like Azure and Kylo store massive amounts of data, while connectors and reverse ETL tools enable the downstream activation of data. Businesses have also been increasingly turning to CDPs to effectively manage their data, creating unified customer profiles and implementing automated data governance at scale.

Big data processing takes place either in a continuous stream or in micro-batches. Data tools apply transformations and computations on data and send them to data stores, analytics platforms, developer tools, and business apps.

Big data tools help businesses glean insights from massive amounts of structured and unstructured data. This makes data measurable and actionable. Lightning-fast data processing frameworks also make it possible to gain real-time visibility into data and make decisions based on up-to-date information.

Big data refers to massive data sets of structured and unstructured data. Big data analytics tools let you make sense of that data and turn it into concrete, measurable information that can lead to useful insights. Analytics software applies statistical techniques to big data in order to describe historical performance, identify trends, measure correlations between variables, and predict the likelihood of a particular event taking place (e.g., a machine failure or a purchase).

Enter your email below and we’ll send lessons directly to you so you can learn at your own pace.