Introduction to Data Lakes: Your Solution to Big Data (2023)

Data lakes are central repositories used to store any and all raw data. They are key components of modern data management strategies.

Data lakes are central repositories used to store any and all raw data. They are key components of modern data management strategies.

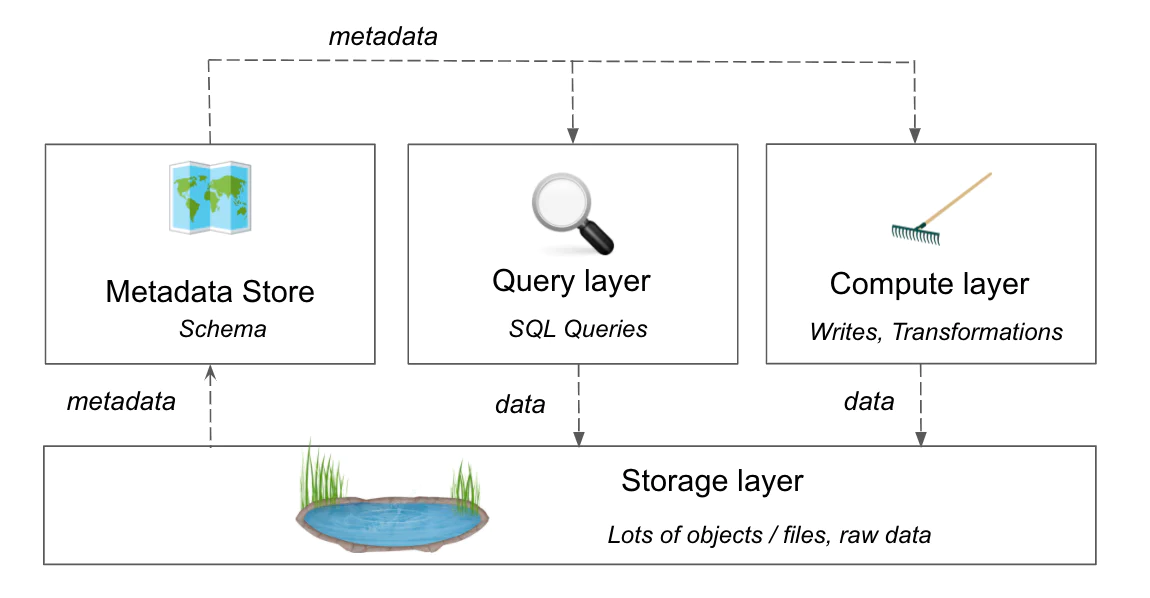

A data lake is a central repository for storing any and all raw data. Data lakes allow for the easy, flexible storage of different types of data since the data doesn’t have to be processed on the way in. (However, it’s essential to ensure you have data governance practices in place. Otherwise, you can end up with a data swamp, making it hard to access data and get any real value out of it.)

Since a data lake has no predefined schema, it retains all of the original attributes of the data collected, making it best suited for storing data that doesn’t have an intended use case yet.

A single platform to collect, unify, and connect your customer data.

As data volume continues to grow at an exponential rate, companies need a scalable database where they can store their data until it’s ready for use. Data lakes have emerged as a cost-effective solution for big data that provides many other benefits as well – ranging from cost savings to advanced analytics.

Benefits include:

Centralized location. Data scientists and analysts are able to access and analyze data more easily when it’s consolidated into a single repository. This also prevents data silos from creating blindspots across teams. Data lakes provide access to historical data analysis, so every department can gain a complete and deep understanding of customers and business performance.

Data exploration. By combining all of your data into a data lake, you can power a wide range of functions, including business intelligence, big data analytics, data archiving, machine learning, and data science. Using predictive analytics, you can use the data inside the lake to forecast future trends, anticipate personalized product recommendations, forecast staffing needs, and more.

Real-time data processing. Data lakes are able to process data in real time, to empower organizations with immediate insights.

Flexibility. Data lakes are schema-free, giving you the flexibility to store the data in any format. Because they keep all data in its native form, you can send the data through ETL (extract, transform, load) pipelines later, when you know what queries you want to run, without prematurely stripping away vital information.

Simplified data collection. Data lakes can ingest data of any format without it needing to be structured as it flows in. The flexibility allows you to easily collect as much data as you want and process it later for a specific use case. This puts more data at your disposal to run advanced analytics.

The benefits of data lakes, especially regarding business performance, are undeniable. But there are a handful of challenges that you might need to navigate as you build a data lake.

Good data governance encompasses security and compliance, but it also addresses all of the data-related roles and responsibilities in your organization across the data lifecycle. If you invest in a data lake without a data governance framework in place, it opens up issues with data quality, security, and compliance.

In scenarios where several departments work off of the same data lake, the lack of governance could lead to conflicting results and create issues around data trustworthiness. But a data governance framework will ensure all teams follow the same rules, standards, and definitions for data analysis that produce consistent results.

Managing data quality in your lake is challenging because it’s easy for poor quality data to slip in undetected until it snowballs into a larger issue. Without a way to validate the data in your lake, it will turn into a swamp, introduce dirty data into your pipeline, and create data quality issues that could impact important business activities.

One method of data validation is to create data zones that correspond with the degree of quality checks the data has undergone. For example, freshly ingested data arrives at the transient zone. Once it passes quality control and is stripped of personal information and other sensitive data, you can label it as trusted and move it further down the pipeline.

Data management grows more complex with the volume of collected data. Without strong security measures, your company data (including your customer’s personally identifiable information) could end up in the wrong hands and cause lasting damage to your reputation.

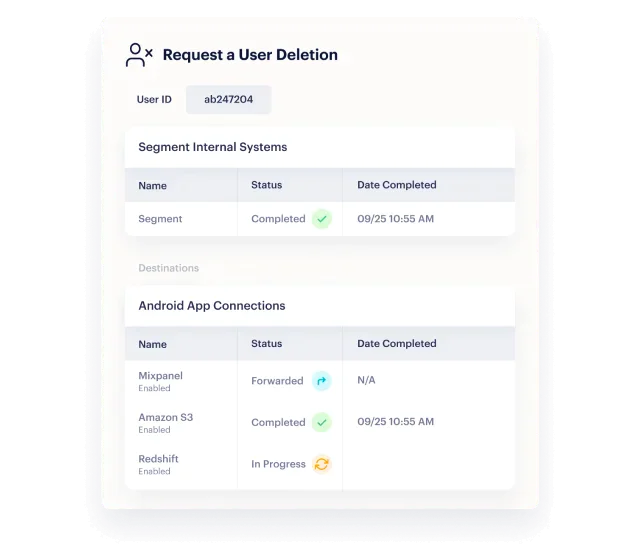

Considering many organizations invest in data lakes to collect more customer data for their marketing campaigns, compliance with data privacy laws like the GDPR or CCPA is also critical. For example, the GDPR provides data subjects (your customers) the right to be forgotten. To comply with a request for data erasure, you must be able to find the customer’s data in your lake and show proof that you’ve removed their information from all of your databases. A data lake that doesn’t support these actions will make compliance difficult.

Your exact data lake security strategy will depend on whether you’re using a cloud-based, on-premise, or hybrid architecture. You’ll need strong encryption protocols that protect data when it’s in transit and at rest, as well as controls who internally can access the data lake and when.

Data integration can become a challenge without proper data governance in place (e.g., missing values, inconsistencies, duplicates can all skew data accuracy) – which in turn, can diminish the trust you have in the data stored in your data lake. Depending on the volume and velocity of incoming data, it can also be difficult to process, integrate, and store at scale.

Addressing these challenges often involves a combination of effective metadata management, scalable integration architectures, and the use of appropriate tools for data quality assurance and transformation. Regular monitoring and maintenance are also essential, especially as things like data sources and data types evolve.

When data users run queries in a large data lake, they may run into performance issues that slow down analysis. For example, numerous small files in your data lake can create bottlenecks due to the limits on the number of information units that can be processed at one time. Deleted files also create a bottleneck if they remain stored for a period of time before being permanently removed.

If you build your data lake in the cloud, be aware that the costs of cloud infrastructure can be a concern for business leaders. Seventy-three percent of finance and business professionals report that spending on cloud infrastructure was a C-suite or board-level issue, while 49% say their cloud spend is higher than it should be.

There are multiple reasons for the growing costs of the cloud, from supply chain disruptions and energy prices to the lack of competition in the cloud technology market.

Although data lakes are intended to store unprocessed data for later analysis, improper data ingestion leads to a data swamp where it is difficult to access, manage, and analyze data. Best practices for data ingestion start with a plan – while you don’t need to know the exact use case for the data, you do need to have a rough idea of the data’s purpose. It’s also important to compress the data and limit the number of small files your lake is ingesting due to the performance issues discussed above.

Companies host data lakes on different types of solutions – cloud, on-premise, hybrid, and multi-cloud.

Cloud: Most organizations choose to store their data lakes in the cloud, a solution where a third party (such as Google Cloud) provides all the necessary infrastructure for a monthly fee.

Multi-cloud: Multi-cloud data lakes are a combination of several cloud solutions, such as Amazon Web Services and Google Cloud.

On-premise: The company sets up an on-premise data lake – hardware and software – using in-house resources. It requires a higher upfront investment compared to the cloud.

Hybrid: The company uses cloud and on-premise infrastructure for their data lake. A hybrid setup is usually temporary while the company moves the data from on-premise to the cloud.

When you are evaluating data lake solutions, there are a few important criteria to consider: from the cost, to scalability, and ease of use. We break down these different factors in more detail below.

When considering a data lake, you have to think about how it would handle growing volumes of data, processing power, computing resources (and more) to make sure that the infrastructure can scale with your company.

A couple things to consider would be storage capacity or how many users would be accessing or querying data (i.e., the data lake can handle multiple, concurrent requests). A distributed architecture is often beneficial to process data across multiple nodes and servers, so the system can grow horizontally.

Strong cybersecurity standards. If you’re looking for a third-party data lake provider, run a detailed comparison of the security features and certifications of your top vendors. It’s important that security and privacy controls don’t just address outside threats but also control internally access.

The cost-effectiveness of a data lake will depend on a few factors, like data volume and the complexity involved in data processing. While data lakes can store large amounts of data at a relatively low cost, things like maintenance, investing in distributed architecture and backup solutions, or training and upskilling employees can add to your overall price tag.

Another thing to look for is how well the data lake can integrate with your existing architecture. When your data lake has the ability to seamlessly connect with your other data systems, you will avoid silos and ensure you’re collecting all the data in one location.

Traditional data lakes require engineers to build and maintain the data lake and its pipelines and can take anywhere from three months to a year to deploy. But the demand for relevant and personalized customer experiences, which require well-governed data, won’t wait. Companies need a data lake solution that can be implemented right now to attain deeper insights into their customers with their historical data.

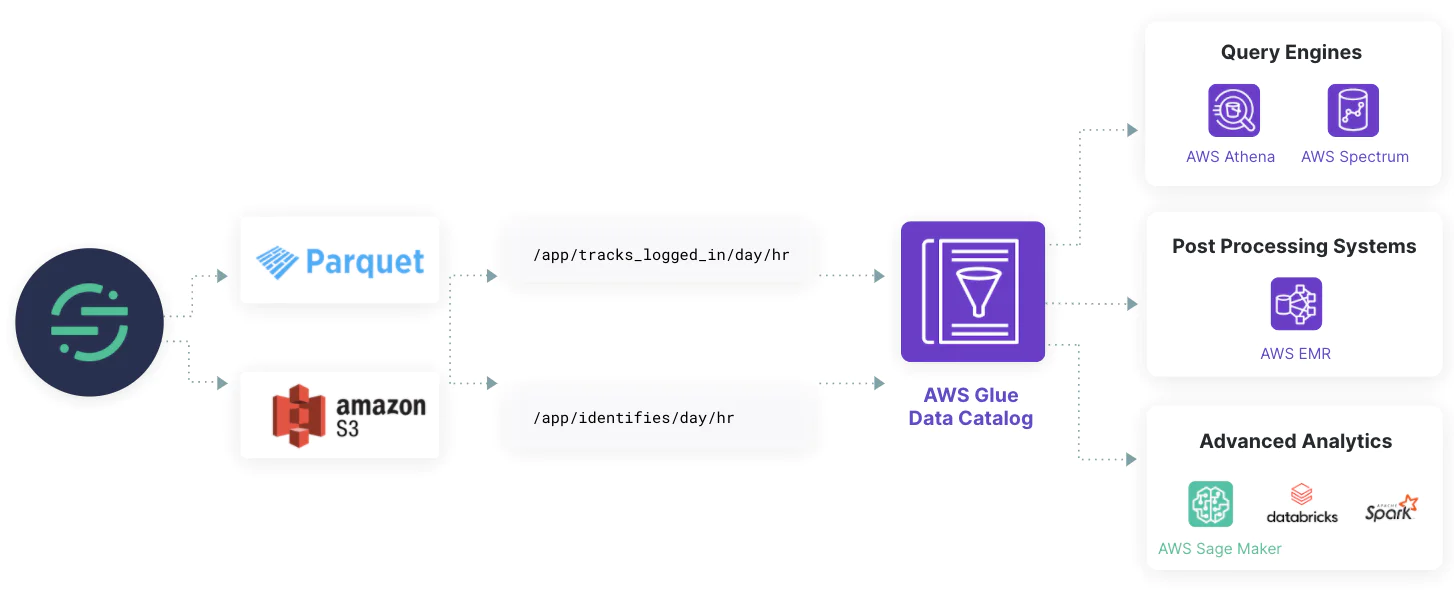

Segment Data Lakes is a turnkey customer data lake solution hosted on either Amazon Web Services (AWS) or Microsoft Azure, providing companies with a data-engineering foundation for data science and advanced analytics use cases. Segment automatically fills your data lake with all of your customer data without additional engineering effort on your part. It’s optimized for speed, performance, and efficiency. Unlike traditional data lakes, with Segment Data Lakes, companies can unlock scaled analytics, machine learning, and AI insights with a well-architected data lake that can be deployed in just minutes.

Additionally, Segment Data Lakes makes data discovery easy. Data scientists and analysts can use engines, like Amazon Athena, or load it directly into their Jupyter notebook with no additional setup for easy data querying. And Segment Data Lakes converts raw data from JSON into compressed Apache Parquet for quicker and cheaper queries.

When Rokfin implemented Segment Data Lakes, the company was able to decrease data storage costs by 60%. Furthermore, Rokfin unlocked richer customer insights by leveraging the complete dataset without extra engineering effort. These richer insights provided content creators at Rokfin with valuable information about the factors that led to higher acquisition and retention rates and helped them increase dashboard engagement by 20%.

Segment Data Lakes provides foundational data architecture to enable companies to create cutting-edge customer experiences using raw customer data.

While data lakes are essential for storing archival data, you also need to be able to put that data to use. By pairing your data lake with a customer data platform (CDP), like Segment’s, you can combine your historical data with real-time data to power and optimize your marketing and product teams with actionable customer insights based on a complete customer profile.

Segment’s CDP improves data accessibility across the business. Segment’s CDP automatically cleans and standardizes your data before sending it on to third-party systems, such as your analytics, marketing customer service tools, customer engagement platforms, and more. So IT and engineering teams can use the data for broader data insights to form a long-term strategy. At the same time, nontechnical users, such as marketing and product teams, will be able to draw actionable insights and supercharge personalized engagement strategies with historical and real-time data.

With a customer data platform, you can make even more informed decisions with a comprehensive, single customer view. Through identity resolution, Segment’s CDP gathers data points from your data lake and other data sources and merges each customer's history into a single profile. With identity resolution, you can glean actionable insights, power your customer interactions, and create relevant, personalized experiences with data.

Segment Data Lakes and Segment’s CDP activate all of the historical data you have on a customer, with new data collected more recently for accurate insights and meaningful customer interactions.

Data lakes and data warehouses differ in their design, purpose, and the types of data they store. Mainly, a data lake stores raw, unstructured, and structured data in its native format.

A data warehouse primarily stores structured and processed data. Data in a data warehouse is typically organized into tables and follows a schema that is optimized for query performance.

A data lake can be hosted on the cloud, on-premise, on multiple cloud solutions (i.e., multi-cloud), or in a hybrid environment (i.e., a combination of on-cloud and on-prem infrastructure).

Segment is a customer data platform (CDP) that offers a turnkey data lake solution. Segment Data Lakes sends Segment data to AWS S3 or Azure Data Lake Storage Gen2 (ADLS), in an optimized format to reduce processing times.