Data Processing Guide

Explore the essentials of data processing and its impact on businesses today. Learn about challenges, benefits, and the role of automation in managing vast data streams.

Explore the essentials of data processing and its impact on businesses today. Learn about challenges, benefits, and the role of automation in managing vast data streams.

Data processing refers to the collection and interpretation of raw data to gain deeper understanding and insight. Data processing isn’t a standalone task, but a multi-step process that spans data collection, validation, transformation, aggregation, and storage.

People rely on data processing to make sense of otherwise complex data sets that would be cumbersome and time-consuming to sift through and manually analyze. With data processing, teams and organizations are able to rapidly sort through data and translate it into charts, graphs, and dashboards to identify trends, patterns, and important takeaways.

A single platform to collect, unify, and connect your customer data

Data opens doors to incredible insights, cost-savings opportunities, and pathways for growth. But even the best data processing efforts fall short when these obstacles aren't addressed head-on.

The term “big data” refers to the ever-growing and complex bodies of information that the average organization has to deal with. The sheer volume of data creates bottlenecks as leaders try to manage what to process and how to best use it. (Roughly 2.5 quintillion bytes worth of data are being generated each day.)

As data volume grows, organizations need more storage space, memory, and processing power. This is where having a scalable data infrastructure comes into play, which is able to handle an increase in events or even a sudden influx (e.g., cloud-based data warehouses that easily scale up or down depending on volume).

Simply collecting data isn’t enough to derive value from it. That data is likely coming into your organization from a variety of different sources, and in a variety of different formats. Data processing helps classify and categorize all this incoming data so that it can be made sense of and give end-users the complete context.

For instance, data coming in from a social media management tool won’t automatically be compatible with data from sales records – it would need to be transformed and consistently formatted so that an analytics tool or reporting dashboard can recognize it.

This is where data mapping can play a key role, which provides instructions on how to move data from one database to another (e.g., any transformations that need to take place to ensure proper formatting, which specific fields this data will populate in its target destination).

There’s a common saying that: garbage in is garbage out. While data has the ability to drive insights and hone strategies, the major caveat here is that: the data needs to be accurate.

Ensuring quality data at scale requires proper planning and the right technology. We recommend aligning your team around a universal tracking plan and validating data before it makes its way to production as two key steps in ensuring data accuracy.

With Segment Protocols, you can automatically enforce your tracking plan so that bad data is blocked at the Source (not discovered days, if not weeks later, when it’s already skewed reporting and performance).

There are numerous laws and industry regulations in regards to handling and processing data, with the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act of 2018 (CCPA) being two of the most notable.

Every person has rights when it comes to their personal data and how it’s being processed, stored, and used. Meaning: businesses must practice good stewardship from both a legal and ethical standpoint.

For example, a business outside the EU may still be subject to the GDPR if their customers are EU residents. Recently, the European Commission adopted the Adequacy Decision for the EU-US Data Privacy Framework (DPF), which stipulates that personal data transferred from the EU to the US must be adequately protected (in comparison to EU protections).

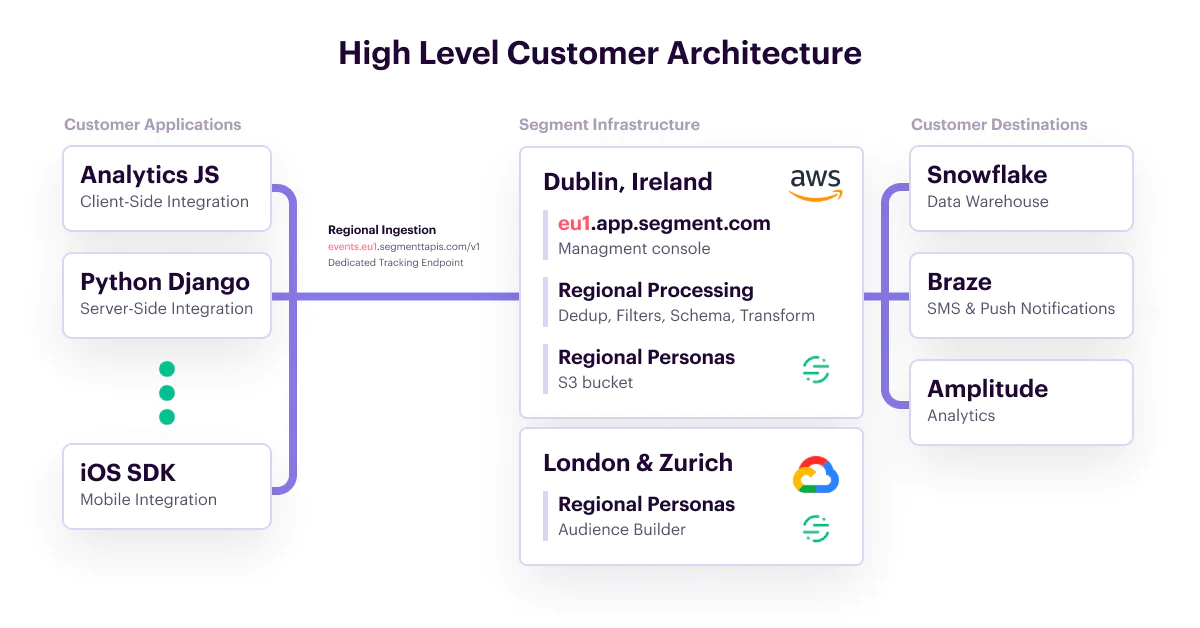

Not only is Twilio Segment DPF certified, but it also offers regional infrastructure in the EU to ensure businesses can remain fully compliant with data residency laws.

Automated data processing uses software, apps, or other technologies to handle data processing at scale through the use of machine learning algorithms and AI, statistical modeling, and more.

By taking out the need to manually complete data processing, businesses are able to reap the benefits of insights and analysis at a faster rate. Let’s go over a few more benefits.

It’s possible to use traditional data entry methods to account for all your information, but that doesn’t mean it’s the most efficient way. In fact, manual data processing pales in comparison to automated software and tools that allow you to perform these actions swiftly and at scale.

A great example of this is with Retool, a B2B platform that helps businesses build internal apps. Retool was rapidly growing, and needed a scalable data infrastructure in place to help them wrangle their data. By using Segment, they were able to save thousands of engineering hours it would have otherwise taken to build an infrastructure in-house that could adequately handle the collection, unification, democratization, and activation of their data.

Whether it's a simple miskey that affects one data record or an incorrect file name that mis-categorizes an entire data type, human error can pose a risk to data quality. To prevent this, and protect the integrity of your data, businesses need to quickly identify and correct bad data before it wreaks havoc on analysis, reporting, and activation.

This is why we recommend that every team is aligned around a universal tracking plan to help standardize what data is being tracked, its naming conventions, and where it’s being stored.

The best data processing tools and software are built with privacy and security in mind.

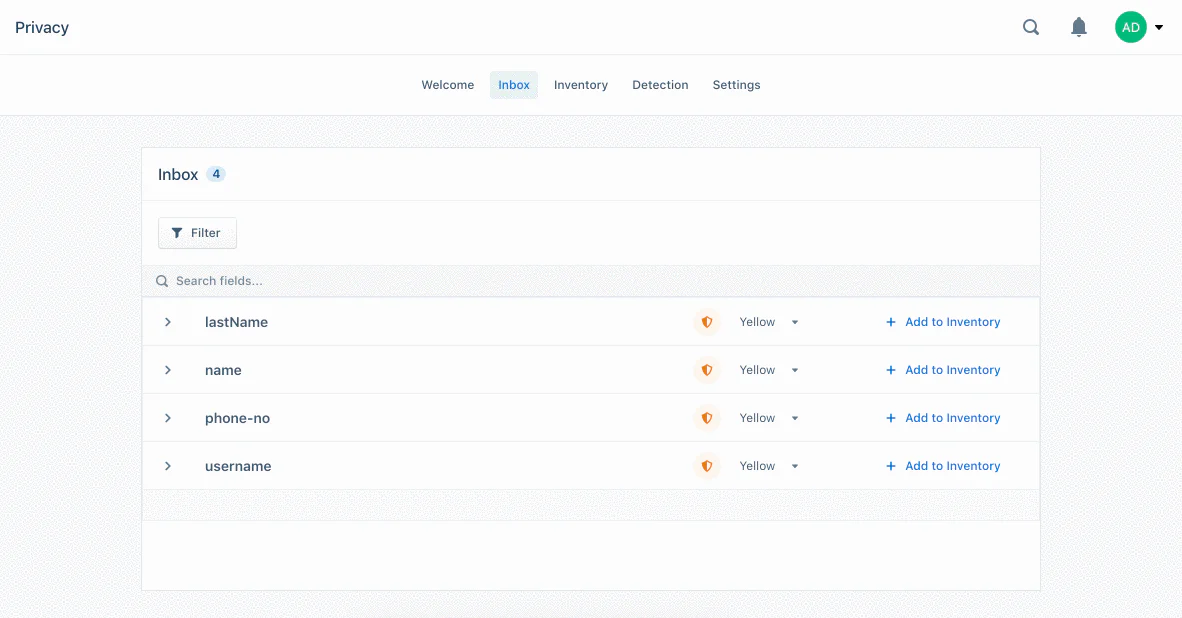

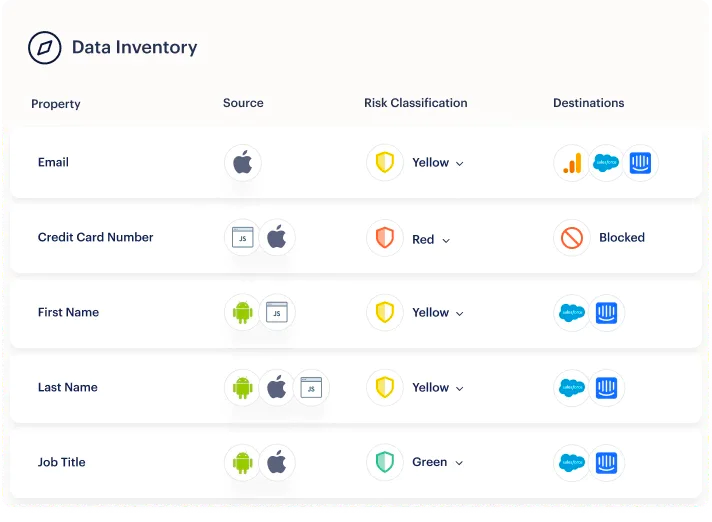

Take the matter of personally identifiable information (PII). Businesses processing that type of data have an obligation to protect it from outside risks like theft, ransomware, or data breaches. Luckily, businesses can automatically classify data according to risk level with the right tools to help strengthen security.

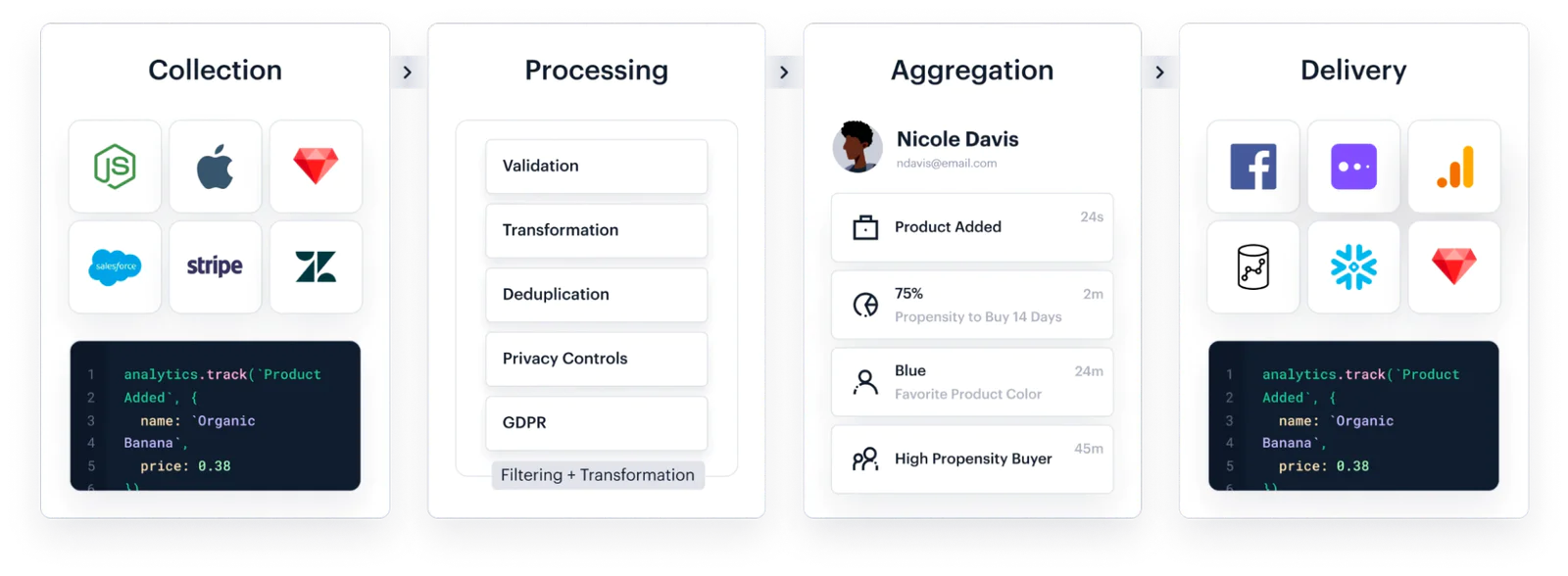

Segment’s CDP empowers businesses to collect, process, aggregate, and activate their data at scale and in real time. Here’s a look inside Segment’s data processing capabilities.

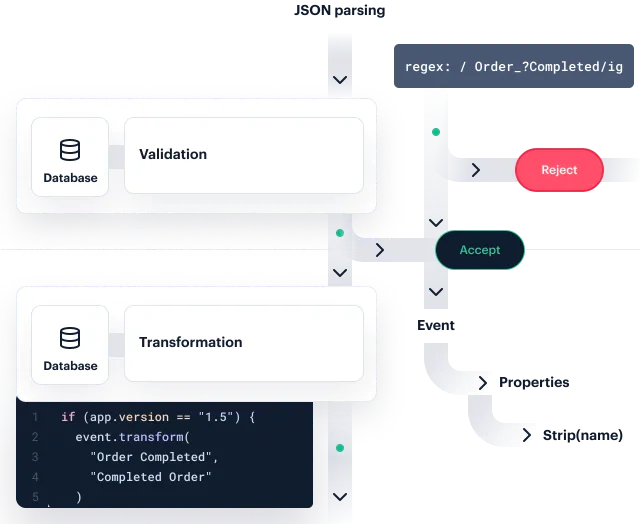

Twilio Segment allows businesses to apply transformations to data as it’s being processed, along with blocking any data entries that don’t adhere to a predefined tracking plan.

We also built our own custom JSON parser that does zero-memory allocations to make sure your data keeps flowing.

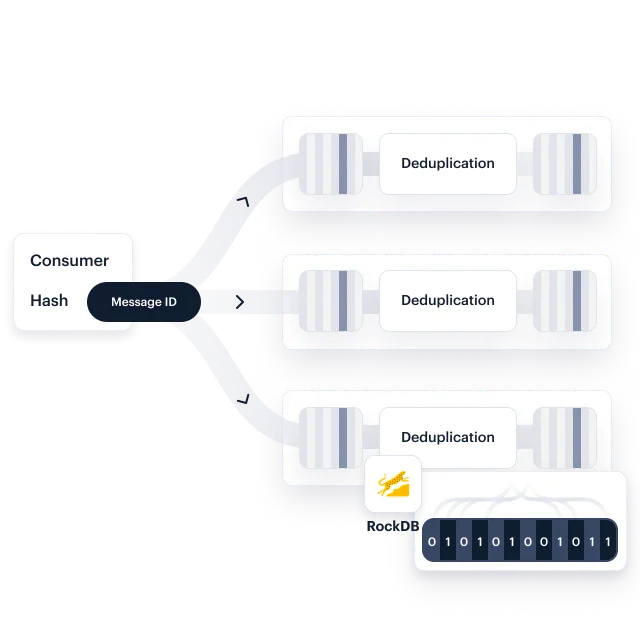

Segment deduplicates data based on the event’s messageId (rather than the contents of the event payload). Segment stores event messageIds on a 24-hour basis, and deduplicates data based on that time period. However, if a repeated event is more than 24 hours apart, Segment deduplicates data in the Warehouse or at the time of ingestion for a Data Lake.

Twilio Segment’s Privacy Portal offers features like the ability to handle user deletion requests at scale, automatically classify data according to risk level, and mask data entries to uphold the principle of least privilege.

Along with being DPF certified, Segment offers regional data processing in the EU to help companies stay compliant with the GDPR.

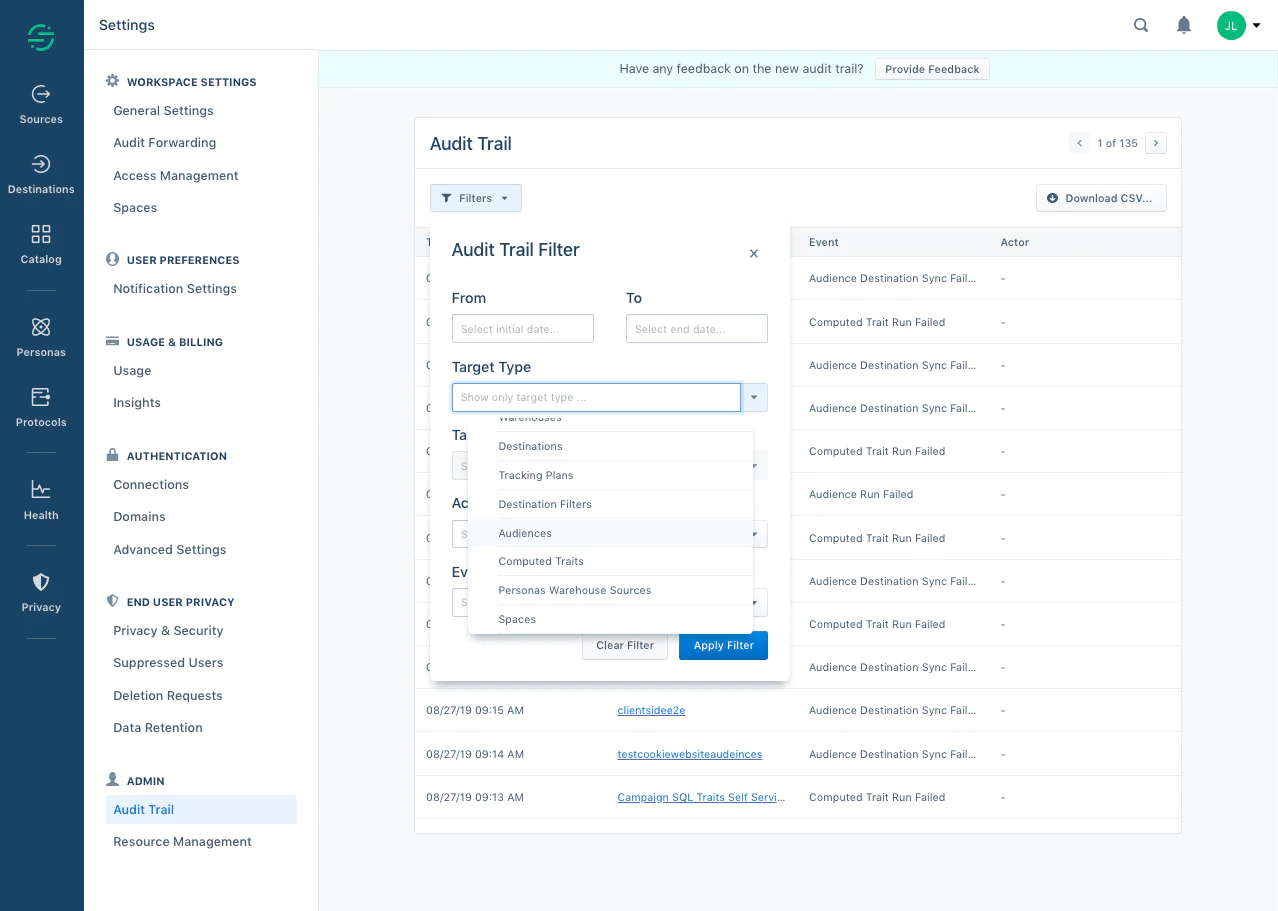

Every time a user deletion or suppression request is logged, Segment also stores the receipt in a database – creating an audit trail. This provides proof and peace of mind that a user’s data has actually been deleted.

The typical organization collects and stores massive amounts of data, and data processing helps them unlock meaning from that data. Without it, the information wouldn’t be easily accessible or digestible, making analysis increasingly more difficult.

Data processing is not a standalone function but works within the context of the entire data pipeline or lifecycle. You can find data processing in almost any part of that cycle, including collection, transformation, and transfer. Any time a piece of data is manipulated to become something more useful, it’s being “processed,” making it essential to every part of the data life cycle. A few hallmark stages of data processing include:

Collection

Transformation

Consolidation

Analysis

Storage

Segment’s Connections has pre-built integrations with hundreds of tools, including CRMs, along with offering the ability to create customer integrations.

A data processing agreement (DPA) is an agreement between the company or entity that owns data and a third-party data processor. This can be between a business and a software company that provides reporting, for example. Designed to comply with various regulations regarding data and privacy, this contract sets forth the parameters for how data is collected, stored, changed, and shared. DPAs are considered legally binding and can signal compliance with the General Data Protection Regulation (GDPR) and other privacy and security laws.

Segment has a comprehensive suite of certifications and attestations to further demonstrate our commitment to security and privacy, including:

ISO 27001

ISO 27017

ISO 27018

HIPAA eligible platform

Segment offers a Data Processing Agreement (DPA) and Standard Contractual (SCCs) as a means of meeting contractual requirements of applicable data privacy laws and regulations, such as GDPR, and to address international data transfers