We know big data refers to the massive amounts of structured, semi-structured, and unstructured data being generated today. But in a landscape marked by huge and complex data sets, it’s time to dig deeper into what big data actually is and how to manage it.

Below, we cover the defining characteristics of big data, or the 5 V’s.

There are several challenges associated with managing, analyzing, and leveraging big data, but the most common roadblocks include:

The need for large-scale, elastic infrastructure (e.g., cloud computing, distributed architecture, parallel processing).

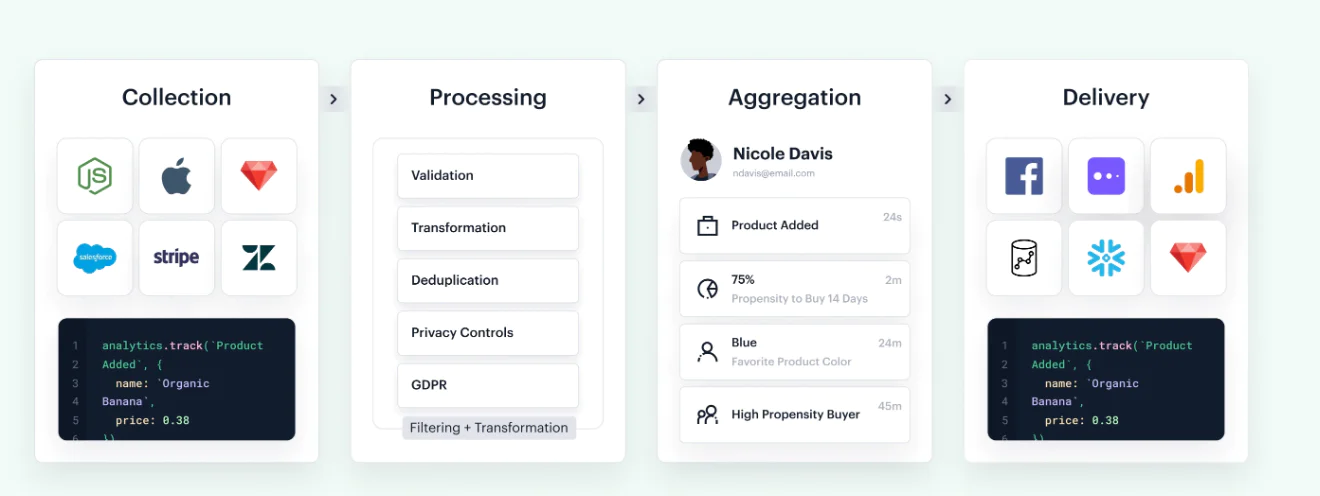

The need to integrate data from various sources and in various formats (e.g., structured and unstructured).

A crowded and interconnected tech stack that creates data silos.

The preservation of data integrity, including keeping it up-to-date, clean, complete, and without duplication.

Ensuring privacy compliance and data security.

Big data is often defined by the 5 V’s: volume, velocity, variety, veracity, and value. Each characteristic will play a part in how data is processed and managed, which we explore in more detail below.

Volume refers to the amount of data being generated (at a minimum, many terabytes but also as much as petabytes).

Because of the staggering amount of data available today, it can create a significant resource burden on organizations. Storing, cleaning, processing, and transforming data requires time, bandwidth, and money.

For data engineers, this increased volume will have them thinking about scalable data architectures and appropriate storage solutions, along with how to handle temporary data spikes (like what an e-commerce company might experience during holiday sales).

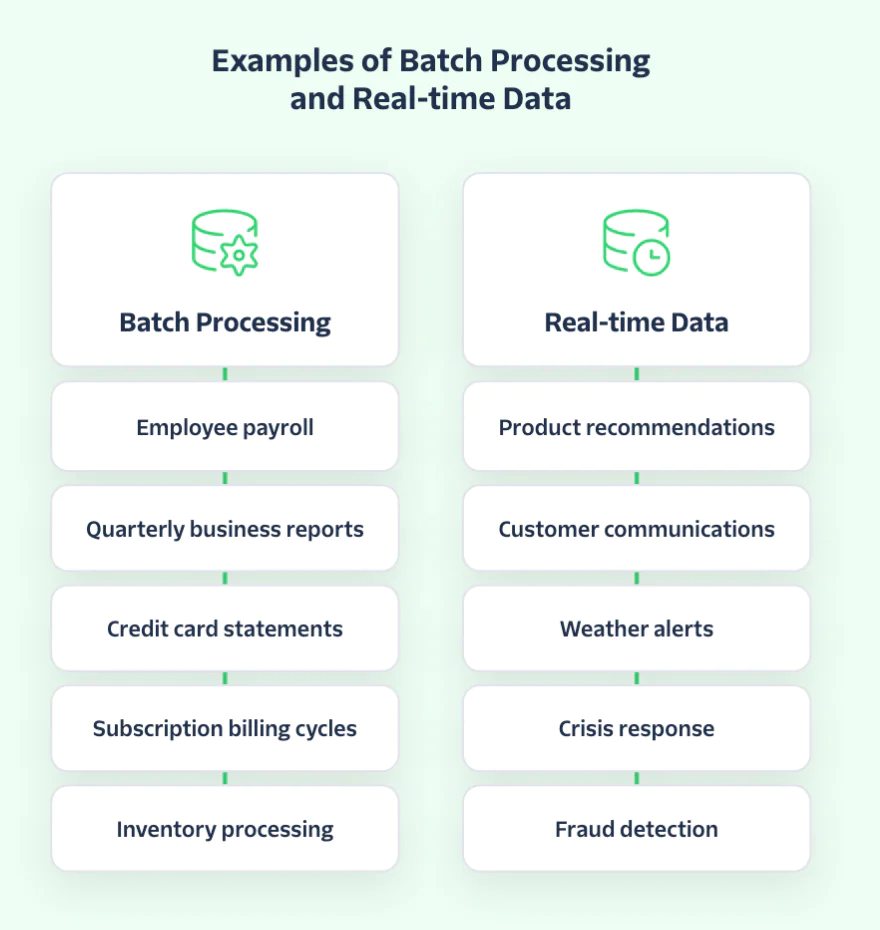

The word velocity means “speed,” and in this context, the speed at which data is being generated and processed. Real-time data processing plays an important role in this regard, as it processes data as it’s generated for instantaneous (or near instantaneous) insight. Weather alerts, GPS tracking, sensors, and stock prices are all examples of real-time data at work. Of course, when working with huge datasets, not everything should be processed in real time. This is one of the considerations an organization would have to think through, what should be processed in real time vs. batch processing?