Big Data Management Trends, Challenges, and Innovations

Dive into the significance of edge computing, AI, ML, and data governance and discover Segment's unique approach to navigating the complex landscape of large data sets.

Dive into the significance of edge computing, AI, ML, and data governance and discover Segment's unique approach to navigating the complex landscape of large data sets.

When it comes to managing big data, there needs to be a scalable infrastructure and data governance in place. Otherwise, engineers and analysts will be overrun trying to orchestrate, validate, and make sense of these massive data sets – not to mention making that data accessible to other teams.

Learn how data management has evolved to keep pace with growing volumes, and helpful tactics to better wrangle big data sets.

The dawn of big data management can be traced back to the 1950s when mainframe computers became commercially available. Yet for decades, the growth of data still remained relatively stagnant as computers and data storage were very expensive.

Then came the World Wide Web. Data grew in complexity and volume, demanding advanced data management solutions. Businesses began implementing data warehouses to unify and manage their data and support advanced analytics.

In the mid-2000s, the internet became ubiquitous. Users were generating vast volumes of data via digital touchpoints – ranging from social media sites to the Internet of Things (IoT). Data poured in from sensors, digital transactions, offline touchpoints, and came in various different formats (e.g., structured, unstructured, or semi-structured).

As a result, big data infrastructure continued to evolve. Cloud data storage solutions created more flexibility, allowing organizations to store all of their data without purchasing any hardware (or opt to combine their on-rem solutions with the cloud, for a hybrid approach).

The launch of Hadoop, a framework for big data analytics and storage, made data easier to wrangle. Hadoop allowed businesses to store different types of data formats and easily scale up as needed.

Data lakes also grew in popularity, for their ability to store all raw data (in any format) for later processing and use.

The shift from so-called “traditional” data management to the more modern approach we see today is marked by a few factors. First, modern data management sees a greater variety of data types from a diverse set of sources whereas traditional data management often relied on structured data that was stored in relational databases.

Modern data management was also defined by the emergence of the cloud, which provided both scalability and elasticity (e.g., provisioning or de-provisioning compute resources as needed). This made teams more agile and adaptable, while also supporting an increasingly dispersed or remote workforce.

Other trends in modern data management include:

Decentralized and modular architecture, supporting microservices and independent components.

Real-time data processing and streaming analytics

Moving into more advanced reporting with the help of machine learning and AI (e.g., predictive and prescriptive analytics).

The key trends that are shaping big data management include:

Artificial intelligence (AI): Artificial intelligence is able to rapidly analyze big data sets, discover patterns, and make forecasts that support different business operations.

Edge computing: A distributed type of computing architecture, edge computing processes data closer to its source rather than on the servers. It can process larger amounts of data at faster speeds.

Event-driven architecture: In an event-driven architecture, events (e.g., user actions like signing up for an account or completing a purchase) are the basis for communication between applications.

DataOps: DataOps refers to a practice that automates data workflows and improves data integration and delivery across an organization.

In edge computing, data processing happens locally – that is, closer to the data source. A car with an autonomous braking system uses edge computing to quickly process sensor data that indicate a collision is imminent. Then, it applies the brakes to prevent an accident.

POS systems, smartwatches, and other types of Internet of Things (IoT) devices are also examples of edge computing.

A key advantage of edge computing in big data processing is reduced network latency. Data doesn’t have to travel to the server and back to set off a specific response, creating more efficiency and supporting real-time responses.

Edge computing may also support greater data privacy and cybersecurity since the data is processed by an edge device. This doesn’t make them immune to breaches, but the data is kept out of the main servers.

Twilio Segment’s Edge SDK allows developers to gather first-party customer data and use customer profiles (updated in real time) to tailor the user experience. Edge SDK is built on the Cloudflare Workers platform, which is characterized by high scalability and can handle billions of events per day.

Our infrastructure also queues data locally on Android, JavaScript, and iOS, so no event goes uncaptured.

Local data queuing allows for data to be processed even in the case of a network partition. On an iOS device, for example, Segment can queue up to 1000 events. If a user closes the app, Segment will save the event queue to the device’s disk. When the user relaunches the app, the data will be reloaded, which prevents data loss.

Twilio Segment’s CDP enables organizations to automate and scale key aspects of their data infrastructure, ranging from hundreds of pre-built connections for seamless integrations to automatic QA checks for data governance. Segment processes 400,000 events per second, and is able to handle huge fluctuations in data volume (e.g., on Black Friday last year, 138 billion API calls passed through Twilio Segment).

Let’s break down key ways that Segment helps businesses better manage big data.

The effectiveness of AI hinges on data quality. Twilio Segment’s customer data platform (CDP) streamlines the process of data ingestion, collection, cleansing, and integration. The platform allows you to:

Automatically enforce an internal tracking plan to ensure data has the same naming conventions (and that bad data is proactively blocked and flagged for review).

The ability to transform data as it flows through the pipeline.

The ability to create Linked Profiles, which unify customer’s streaming event data with historical data stored in the warehouse. These profiles can then be activated in any downstream destination.

Features like Predictive Traits, which can predict the likelihood a user will perform a certain action (based on any event tracked in Segment).

Recent breakthroughs in AI (or more specifically, generative AI and LLMs) have thrust artificial intelligence even more so into the mainstream. McKinsey projects that Gen AI could add 4.4 trillion, annually, to the global economy. The speed between idea and execution has become more narrow than it ever was before.

But with all this AI acceleration it’s important not to lose sight of protecting data privacy. Already, issues have come up around copyright law when it comes to the data that AI is being trained on. And for highly regulated industries like healthcare, artificial intelligence can lead to huge benefits like more precise and proactive diagnoses – but how will that personally identifiable information be protected?

Already, the EU has worked on AI regulations. According to the European Parliament, the goal is to make sure that, “AI systems used in the EU are safe, transparent, traceable, non-discriminatory and environmentally friendly.”

When it comes to protecting customer data, you need to have fine-grained controls over what you’re collecting, who has access to that information, along with automated privacy controls to ensure scalability.

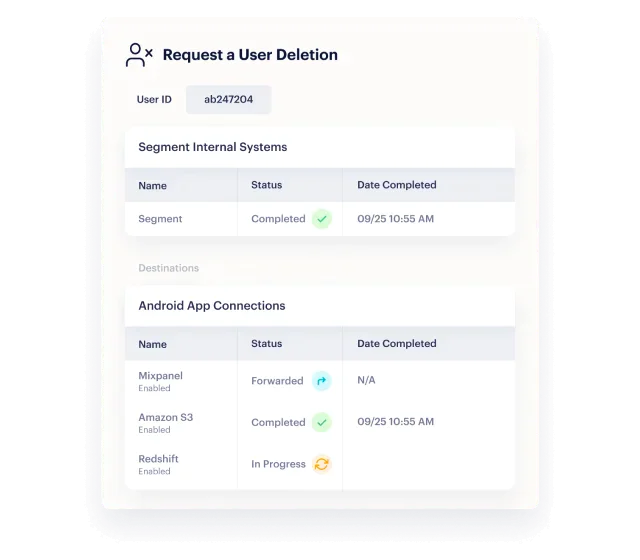

Segment’s Privacy Portal offers several features to protect customer data, including streamlining data subject rights management (e.g., user suppression or deletion requests). You can also learn more about our open-source consent manager here. On top of that, Segment can automatically detect and classify personal information in real time, and automatically block restricted personal data from being collected.

As well, companies can comply with GDPR data residency laws via Regional Segment, in which businesses can configure their workspaces to ingest, process, deduplicate, and archive data via Segment-managed archives hosted in AWS S3 buckets in Dublin, Ireland.

Connect with a Segment expert who can share more about what Segment can do for you.

We'll get back to you shortly. For now, you can create your workspace by clicking below.

Big data management involves collecting, organizing, storing, and analyzing large volumes of data. This includes structured, unstructured, and semi-structured data.

Edge computing is important in big data management because it minimizes the risk of data breaches and prevents network congestion while processing large amounts of data in real time.

Emerging trends in big data management include DataOps, AI, edge computing, cloud-based data warehouses, and event-driven architectures.

Twilio Segment’s approach to big data management allows businesses to collect all their data in a single database while automating data governance best practices and ensuring compliance with privacy regulations at scale.

Enter your email below and we’ll send lessons directly to you so you can learn at your own pace.