When we initially launched our Reverse-ETL solution to fill the gap of activating warehouse data natively through Segment, we initially set a limit of up to 30 million records being processed in a single sync, which was a reasonable limitation at the time.

However, over time, as the adoption of warehouse-centric CDPs grew and more companies leveraged their data warehouses for activation, the scale our customers needed grew as well.

As a result, we had to consider how to scale up our Reverse-ETL data pipeline. In our previous implementation, when the sync size exceeded 30 million records, the process would fail and display an error message, prompting the customer to adjust their setup to retrieve a smaller dataset.

This can be frustrating, as the problem may occur without warning, and fixing it can be time-consuming, potentially resulting in stale data.

Factors we considered for increased scale

Customer warehouse compute costs: Excessive data scans or overly complex queries can significantly increase warehouse compute costs. We were mindful of this during our decision-making process to minimize unnecessary costs for our customers while scaling.

Batch-based system and network error tolerance: Maintaining open connections when reading large volumes of data increases the likelihood of network failures. We developed creative approaches to handle these failures efficiently while maintaining scalability.

Downstream destinations restrictions and limits: We support hundreds of destinations, each with its own rate limits. Sending all the extracted data at once, would have caused overloads. We designed our system to respect these limits by batching and throttling the data transfer appropriately.

Data residency: We prioritize data privacy and only retain customer data for as long as necessary, unless the customer explicitly configures the pipeline to store it longer. This principle was integral to our scaling strategy, ensuring that we maintained this quality even as we grew.

Why not simply increase the limitation (or completely remove it)?

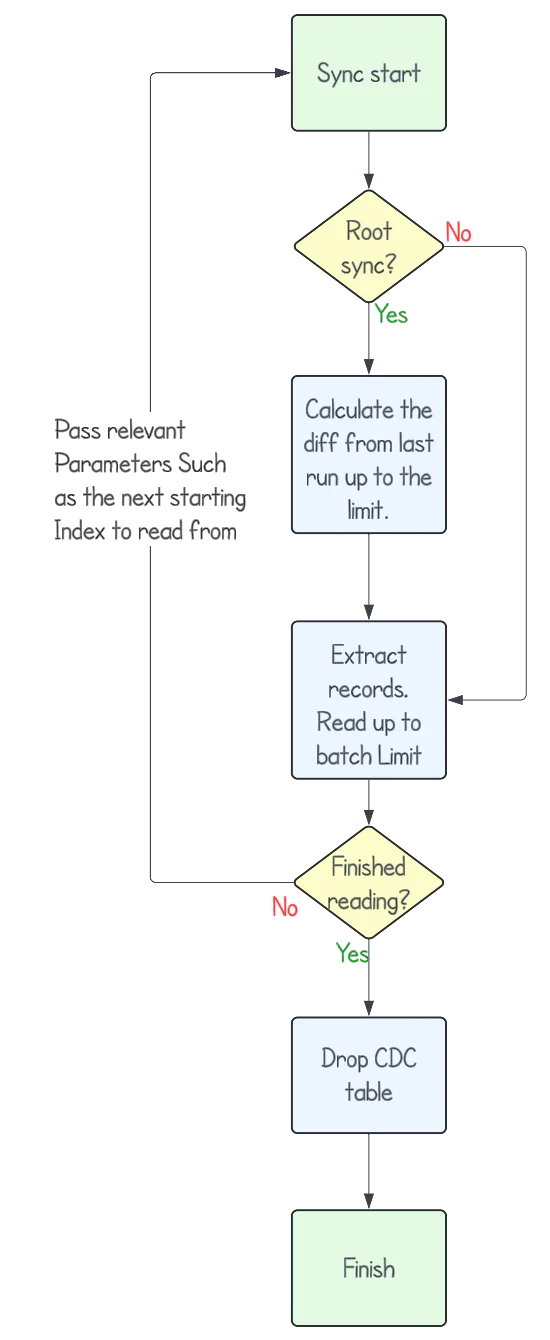

This is how our system worked before the recent changes: First, we would detect the data needed to be extracted from the warehouse. Then, we would check if there were more records to read than our limit. If so, we would fail the sync with a warning message.

So why not simply increase the limit? There are multiple reasons why continuing to raise the limit without making architectural changes is problematic, including:

Network failures:

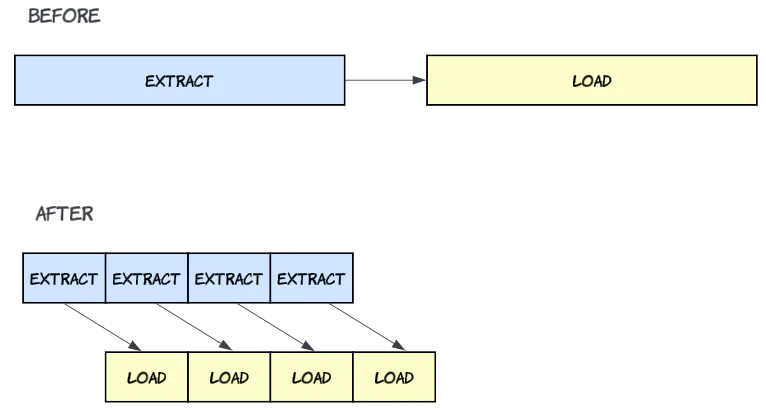

The nature of the system is batch-based, meaning we perform operations on the data warehouse to capture data changes. We then read all the data as one batch and activate it by sending it to the connected downstream destinations.

The first issue with simply increasing the limit is the potential for network failures. For instance, if we identify 1 billion records that need to be read from the warehouse, each with hundreds of columns, reading that amount of data could take hours or even days. The likelihood of network failures during such an extended period is significant.

Service reboot:

Even if the network remains stable throughout the entire process, the service that reads the data might need to be rebooted due to deployment, auto-scaling, etc. Reading 99% of the data and then failing—only to restart the process—can result in significant delays in data delivery.

Overwhelming the data pipeline:

Scaling up by simply allowing more data to flow into the system means that the underlying services must be prepared for that increase as well. Otherwise, issues can disrupt the system. Some examples include queues getting filled up, out-of-memory failures, and noisy neighbor problems. Therefore, we had to ensure that when we scale up, we do so responsibly.

Under the Segment hood

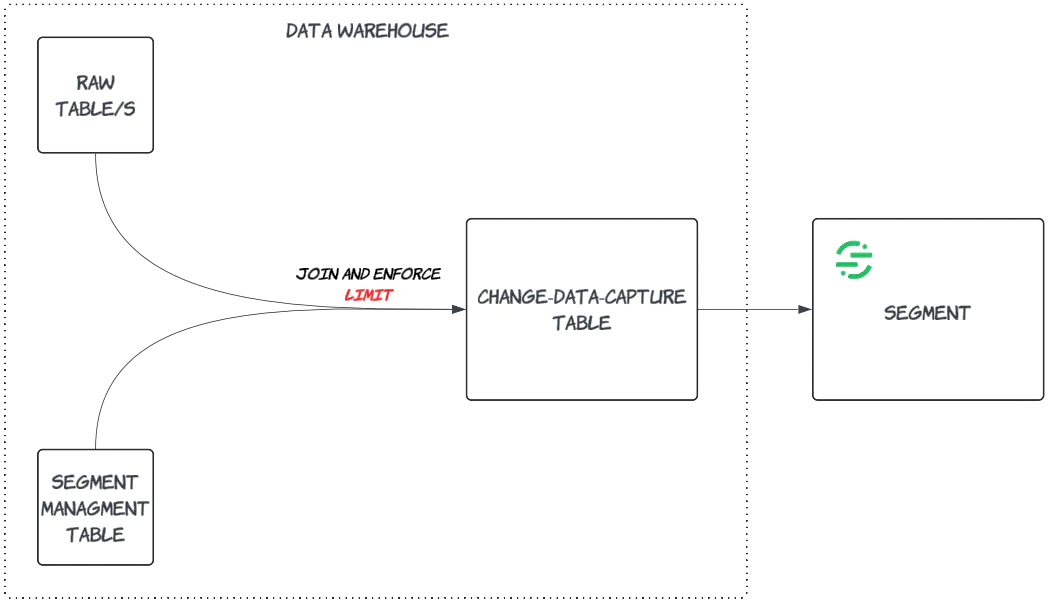

1. Limiting Processed Data to Maintain Stability:

The first step we took to scale up the data pipeline was to limit the maximum amount of data processed in the warehouse with each run. This step might sound counterintuitive: why add a new limit when we want to scale up? The rationale was to ensure that any amount of data we process while operating in the warehouse can be handled by our services.

This represents a change from our previous logic, where we did not implement any limitations on the amount of data processed in the warehouse. Instead, we checked if we had reached our internal limits right before starting to extract the data, and if we had, the process would fail.

Our improvements now enable us to process a larger Change Data Capture (CDC) up to 150 million records. And if we detect a CDC resultset larger than that, we limit the process to the first 150 million and queue the rest for the next scheduled sync. The key benefit here is that we can process significantly larger datasets without failure, ensuring seamless delivery of data downstream, with better reliability for our customers.