Last week, over 1,000 of the industry’s most talented engineers, analysts, product managers, and marketers gathered in San Francisco to discuss key trends touching all things data at Segment’s annual user conference, Synapse.

From data quality to privacy to activation, we heard from 50+ world-class speakers about the techniques, tools, and strategies they use to leverage their most critical asset: customer data. We also had the opportunity to shine a light on some of our most innovative customers through the inaugural Segment Data Momentum Awards.

On top of that, we unveiled two brand new features – Functions and the Privacy Portal – that are now available.

We could list hundreds of takeaways, but as the saying goes, the plural of anecdote is data, so we’ve narrowed it down to five themes and lessons that came up across several talks over the two days.

Privacy is a right. Use it to your advantage.

Consumer privacy was a theme that was top of mind for many folks at Synapse. Apart from a few notable sighs of relief when the Privacy Portal was unveiled, the theme of proactively addressing customer privacy was reflected in several sessions throughout the day.

With new data privacy regulations popping up all over the world, respecting customer privacy takes more than just reactive remedies. Companies everywhere need to take a proactive approach to privacy.

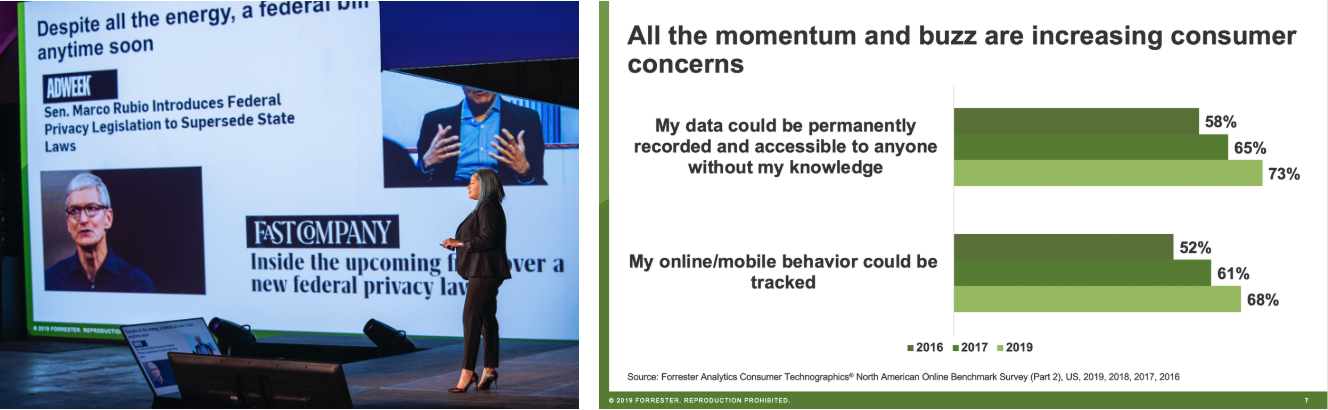

Fatemeh Khatibloo, VP and Principal Analyst at Forrester presented some insightful consumer statistics that added color to the privacy conversation. She shared that the percentage of US online adults who are concerned about their online or mobile behavior being tracked has grown from 52% in 2016 to 70% this year. Meanwhile, the percentage who are concerned their data could be permanently recorded and accessible to anyone has grown from 58% in 2016 to 74% this year.

If we continue on this trajectory, nearly every American will be concerned about their data being accessible to companies in three years.

Fatemeh Khatibloo, Forrester, on stage at Synapse

This imperative was echoed by Daryl Bowden, SVP of Technology at Fox, who argued a centralized data infrastructure is the best way to ensure that private user data isn’t shared with tools in your stack that don’t require it. Privacy was also referenced by George Jeng, Head of Internal Product at Vice, who explained how Vice is using privacy regulations like the CCPA and GDPR to spur more user-centric product development.

“We shouldn’t look at these changing times and think about how to circumvent or bypass these restrictions. We need to take this opportunity to be transparent and do what’s right for our users.”

- George Jeng, Vice

Flexible data infrastructure makes companies nimble. Good data governance keeps them nimble.

Another common thread throughout the sessions was on the tension with regards to data flexibility—what you can do with your data—and data quality—how reliable and accurate your customer data is.

To adapt quickly, your business needs a complete set of customer data flowing into each of your marketing and analytics tools. However, without protective measures to make sure your data stays high quality, that effort you’ve invested in new integrations and data pipelines becomes wasted.

Brian Healey, Principal Engineer at RetailMeNot, shared his experience with Segment Functions and how it made his data infrastructure more flexible.

“With Functions, Segment has given us the flexibility to redesign our marketing stack with minimal impact to our engineering team. We managed to change the tires of our car while we are driving into the holiday season, where we see a 35% increase in traffic and events captured.”

-Brian Healey, RetailMeNot

Similarly, Ethan Kaplan, GM of Fender Digital and long-time Segment user, shared his story about joining the Fender team. Ethan took on the job of digitally transforming Fender with new interactive learning experiences for users. They needed a flexible data infrastructure to build new digital products that would eventually reach 8.8M users and a platform that would give them confidence in the data being collected. This all helped generate user insights—like how 90% of first-time buyers stop using their instrument in the first three months—and make the best product decisions possible.

“When I got to Fender, Segment was one of the first things we put in place. I wanted to establish a robust data architecture even before our first app launched.”

-Ethan Kaplan, Fender

Machine learning is driving better customer experiences, but requires a strong data foundation.

Another hot topic at Synapse this year was the application of machine learning to both improve customer experiences and boost revenue. The sessions led by IBM, Warner Brothers, Norrøna, and Sun Basket tackled a common issue: how can we help customers navigate our extensive product catalogs more quickly and effectively?

Simply put, the answer was data (go figure)! They all needed a complete and reliable data set to train models and make real time decisions about what to highlight for each user based on their past experiences and current context, like the time of day or season.

“There is no artificial intelligence without information architecture.”

-Maia Sisk, IBM

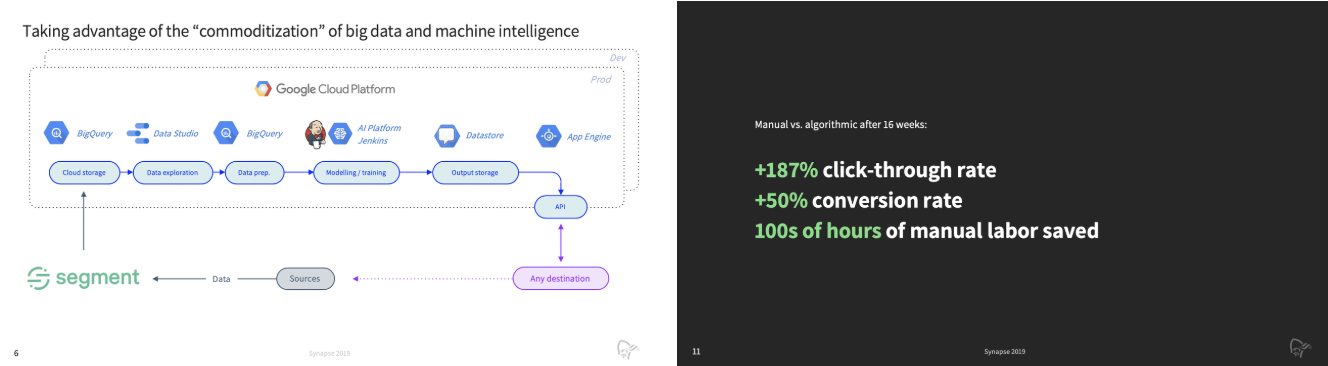

Thomas Gariel, Product Manager at Norrøna, a Scandinavian outdoor retailer, shared his story about building a machine learning engine to power personal and relevant e-commerce experiences for its customers. The challenge he faced was recommending the right gear from their extensive catalog of products that varied dramatically season-to-season. They used Segment to implement the data infrastructure that made recommendations on their product pages possible. Over 16 weeks, they compared manual recommendations to ones delivered algorithmically. The results were incredible (see below)!

Snapshots from Thomas Gariel’s presentation at Synapse

Experimentation is the best way to debunk assumptions and determine real growth drivers.

Lex Roman, former Senior Product Designer at The Black Tux, framed this problem extremely well during her Synapse session. Here’s the setting: Lex enters a tense conference room. She’s asked to design a new product experience that will increase signs-up by 50%. She asks what the baseline is. Silence.

Unfortunately, the case Lex outlines is all too common. The best experimentation frameworks are rooted in good data. Setting baselines and accurately measuring each experiment is critical to making sure you’re picking up the right signals. Without the right data foundation in place, it’s impossible to determine the success (or failure) of the experiments you run.

Gustaf Alströmer, Partner at Y Combinator, took the stage and shared learnings from his five years leading growth at Airbnb. He explained the critical mistake that most make — assuming customers will flock to you just because you’ve built a shiny new product. While that’s not true (sadly), what is reliable is setting metrics that represent the value that users receive from your product and experimenting aggressively via paid search, SEO, referral programs, and A/B testing to see what moves those metrics.

Similarly, Patti Chan, VP of Product and Engineering at Imperfect Produce, stressed the importance of aligning around a key metric and strong internal feedback loops when it comes to experimentation. Her take: companies need to communicate learnings broadly across product and marketing so each team can internalize and benefit from learnings. Experimentation is useless if it happens in a silo.

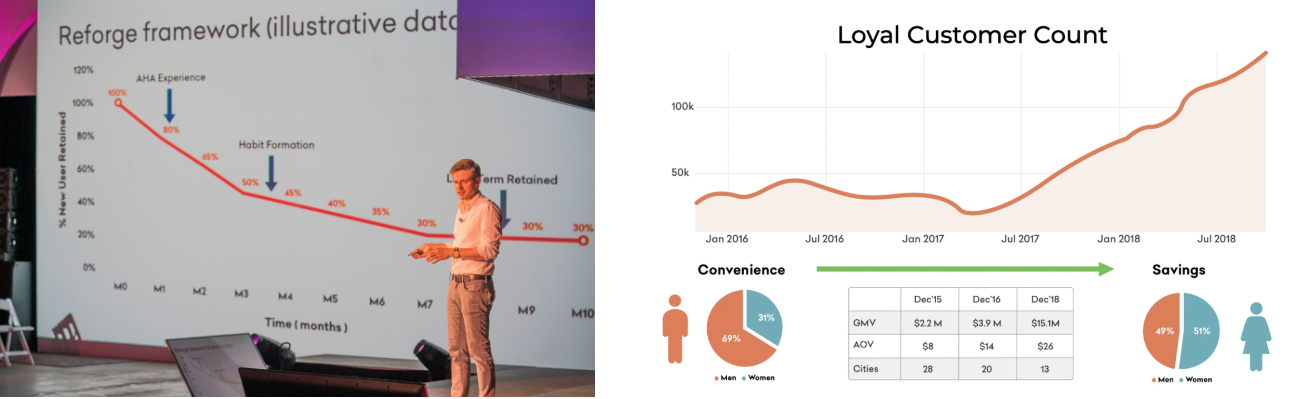

Jacob Singh, CTO at Grofers, India’s largest grocery delivery service, shared some of his team’s findings. They originally assumed a larger product assortment would drive the biggest boost to the business. In reality, customer satisfaction and repeat purchases more closely correlated with value. So, instead of increasing their catalog of products or investing in same day delivery (like their competitors), they invested in technology and a product assortment to maximize the savings they could offer to customers. The results was a massive uptick in customer retention.

Jacob Singh, Grofers, on stage at Synapse

Accessibility and understanding are key for data democratization.

The last hot topic covered at Synapse was the concept of data democratization, or making data universally accessible across an organization. Data democratization enables data-driven decision making across all teams and individuals — from the marketing teams deciding what channels to invest in to the product teams deciding what products to build next.

According to the experts that spoke at Synapse, getting to a place where your data is successfully democratized requires two things: 1) data accessibility and 2) data understanding. Essentially, your data needs to be accessible in the right tools and easily understandable. Poor data hygiene and inconsistent event naming are the primary blockers of this ideal end state.

According to Brian Donaldson, Sr. Manager, Development Engineering at Docker, a tracking plan is the key to their success with data democratization. It enabled his team to stay consistent when it came to tracking events, while ensuring everyone at Docker could easily understand what each data point represented.

“The tracking plan was the single most important part of our process at Docker. Building a data dictionary was key.”

-Brian Donaldson, Docker

That’s a wrap!

As the curtain falls on Synapse 2019, we’d like to thank our speakers, customers, partners, and friends for making the event such a success. If you missed it, we’ll have videos of the sessions available soon on the Synapse website.

We’ve got plenty of exciting events planned for the coming months ahead. Watch this space!

The State of Personalization 2023

Our annual look at how attitudes, preferences, and experiences with personalization have evolved over the past year.