Fixing IAM policies is hard, and what have we done to solve it

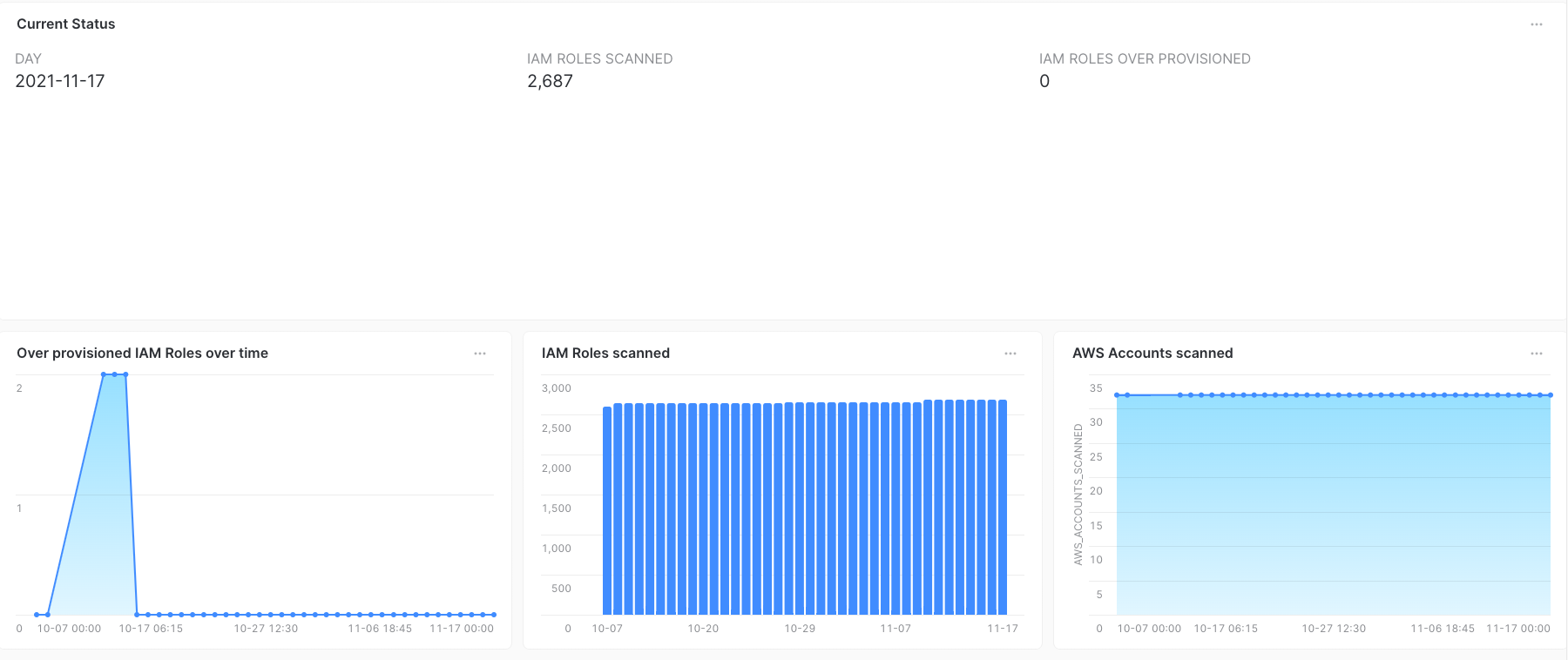

Our engineers created IAM Roles that posed risks to security. We took inventory & resolved the problem.

Our engineers created IAM Roles that posed risks to security. We took inventory & resolved the problem.

Enablement has always been a mantra at Segment. We strive to give engineers a great developer experience, which would make them more productive, engaged, and knowledgeable on the systems they build and maintain.

At Segment we use Infrastructure as Code to manage our AWS infrastructure. We want our engineers to be knowledgeable of the whole stack, so we all use Terraform to manage AWS resources and have a gitops flow to make changes to infrastructure.

In the past, we (security) haven’t provided enough safeguards for engineers to safely iterate on their infrastructure on AWS. At present, our engineers have the ability to create/update/delete resources at their disposal. But we didn’t previously have a feedback loop to inform them of the risk of the changes that they were applying.

Without proper feedback, developers unknowingly created IAM Roles that posed security risks. And after years of not providing safeguards to engineers, we ended up with many resources on AWS that had misconfigurations or were over provisioned.

At the beginning of the year, we ran manual scripts that identified hundreds of IAM Roles in production AWS accounts that were heavily over provisioned. We had roles with dangerous permissions like: iam:PassRole, sts:AssumeRole, ssm:GetParameters, s3:* or dynamodb:* applied to any resources (*).

Permissions like these could allow an attacker to easily elevate permissions or exfiltrate Segment data.

The problem didn’t end there. We were still creating IAM Roles with over provisioned permissions at a fast pace, so delaying a solution meant that the problem would only get bigger.

As I mentioned before, we didn’t provide any inline feedback for engineers to be aware of the risks associated with the IAM Roles they were creating.

In order to prevent engineers from having misconfigurations and to provide a better user experience, we encouraged engineers to use Terraform modules, which is an abstraction layer to provide good defaults and prevent mistakes like creating a public s3 bucket.

One of the main contributors to the problem was that the Terraform modules themselves had over provisioned IAM policies. Initially, it made sense to have broad IAM Policies on shared modules to accommodate all the use cases engineering had, but we ended up giving too much for ‘enablement sake’ and ended up with IAM Policies that were too permissive.

It was the perfect storm.

When engineers used Terraform modules to create autoscaling groups, the IAM Role assigned to the instance profile of the EC2 instance would already have bad IAM policies.

Over time, more and more IAM Roles were created using the existing modules. This resulted in hundreds of roles with dangerous permissions.

In all this process, security didn’t have any input or visibility into what was going on, so it wasn’t possible for engineers to have awareness of the risks of the resources that they were creating.

When we ran a manual script to have an idea of how big the problem was with over provisioned IAM Roles, we quickly came to the conclusion that we needed to upgrade.

Programmatically identifying bad IAM Policies is not as straightforward as it might seem. IAM Roles can have multiple IAM Policies attached and even inline policies. On top of that, IAM policies can have multiple statements with Allow or Deny clauses as well as conditions applied to them. That makes identifying the effective permissions really difficult.

Fortunately, IAM provides an IAM Simulator API that provides exactly that functionality. Given an IAM Role, you can query whether the role can perform an operation. There is no need to even look at the policies!

You can run something like: “Can this IAM Role perform sts:AssumeRole on any (*) resources?”

The API would tell you whether the role can perform those permissions.

These are the IAM Policies we wanted to alert on when paired with any (*) resource:

The next step was setting up a pipeline to query all the IAM Roles from our AWS Organization and use the IAM Simulator API to check for over provisioned policies. We used multiple AWS Lambda functions to collect all that information and keep it on a DynamoDB table.

From there, we wanted all these metrics to end up in a single warehouse, so we used Segment to make that happen.

We configured a Segment source and a destination (Snowflake.) Then we implemented a Lambda function to run every day that would make a “Track” API call for every IAM Role to a Segment source we had configured, which syncs hourly with Snowflake, our warehouse.

This provided us metrics on the same warehouse and created graphs that could be easily understood by our leaders.

With this reporting mechanism, we identified more than 200 IAM Roles in production with the over provisioned APIs that we listed above.

In order to fix them, we had to update Terraform modules, the infrastructure that used those modules, and also rotate a lot of infrastructure. It was a multi-quarter effort led by the cloud security team that was made possible by all the engineering teams that collaborated on it.

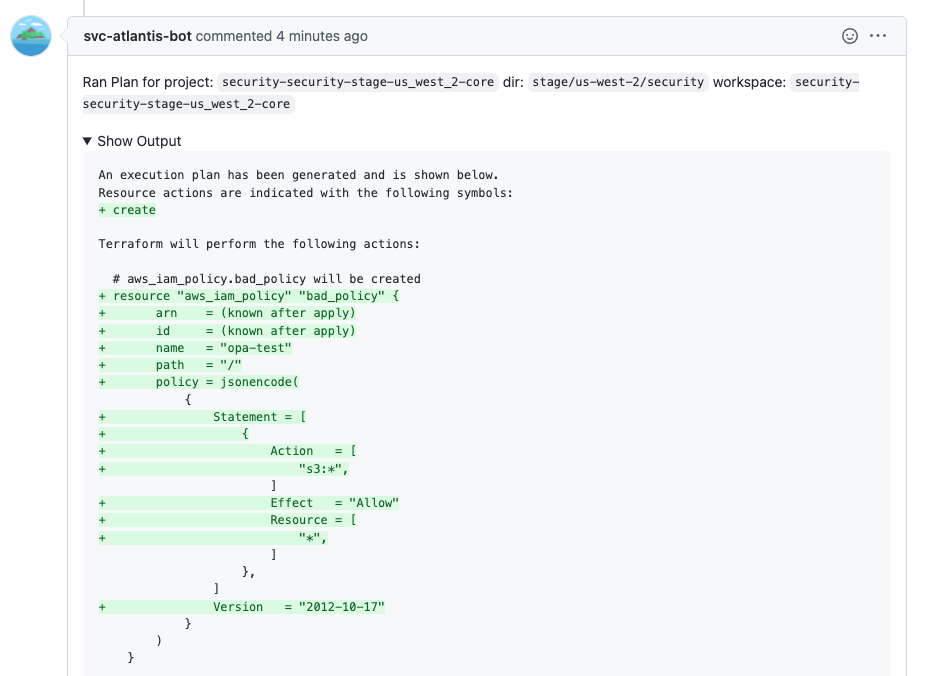

When engineers make infrastructure changes through Terraform and Atlantis, they are given information on the resource changes that would be applied.

In the example below, when creating an IAM Policy, you can identify the permissions that this policy will have.

We didn’t have a way to warn the engineers about the consequences of their changes.

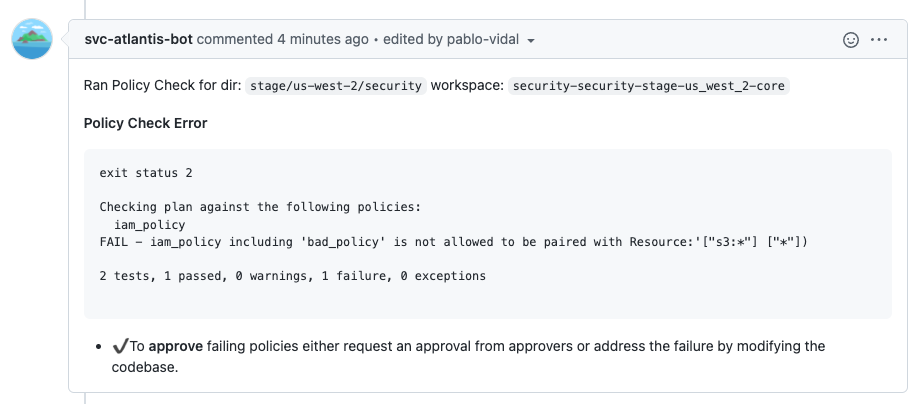

Providing feedback at the right time is critical to provide a good developer experience, which is why we wanted to include that as part of the Atlantis pipeline. Since we are using Atlantis for applying Terraform changes, we could easily enable Conftest to do OPA policy checks.

We created an OPA policy check to prevent use of the APIs such us iam:PassRole paired with any resources *.

Engineers that want to create a role with such permissions are now blocked from making that configuration change and given feedback on what the issue is. Since there are situations where making those changes is needed, we also maintain a list of users that can overwrite the OPA Policy checks.

This IAM Roles metric we generate is not currently integrated with other metrics we pull from our AWS Accounts. Moving forward, we want to to include this as part of a greater system that provides visibility into security risks of a given AWS account.

We also are looking to expand the number of terraform checks we perform at the moment, to ensure developers have a feedback look for provisioning infrastructure securely.

Our annual look at how attitudes, preferences, and experiences with personalization have evolved over the past year.