Observability in the AI Era

Learn why observability remains such a challenge for businesses, and how to overcome these obstacles to better protect data quality and the accuracy of your AI & ML models.

Learn why observability remains such a challenge for businesses, and how to overcome these obstacles to better protect data quality and the accuracy of your AI & ML models.

Do you know what’s happening inside your tech stack? Most businesses don’t. A recent study found that only 1 in 10 organizations have full observability into their application environments.

It’s the statistical equivalent of a jump scare. Not having visibility into the inner-workings of your tech stack – and how data moves between these different tools and systems – means being late to know about data quality issues or security risks. In short, it has you relying on guesswork to troubleshoot a problem (meaning, you’ll spend a lot more time searching for a solution).

But why is observability such a challenge? The answer boils down to several factors, like the complexity of tech stacks, the surge in data volume and variety (i.e. big data), along with the recent breakout of more advanced AI and ML models, whose accuracy depends on the data being used to train them.

It all began with: monolithic architectures.

As their name suggests, monolithic architectures act as a single unit where every component is connected. If you want to make a change, that means redeploying the entire application. As you can imagine, this quickly becomes complicated and cumbersome. Changing one component can impact the performance of the entire system, making it difficult to stay agile or scale.

So, there was a pivot to a more modular architecture with microservices. With this approach, every component or service functions independently of one another. This means updates and redeployments for one service won’t negatively impact another component should something go wrong.

While microservices allowed development to become more dynamic, it also made tech stacks (and observability) more complex. For one, cloud-native applications scale up and down based on demand. Now, you had to ensure observability solutions could handle these fluctuations as well. Then, there’s the sheer amount and variety of data being generated. Businesses often struggle to consolidate the data that exists in different tools and systems, which can make it difficult to identify performance issues as they arise.

Continuous integration and delivery (CI/CD) pipelines also mean that application environments are constantly evolving. Attempting to manage performance manually is too time-consuming, significantly slowing down application release cycles. As a result, organizations have to embrace a new approach to data observability that prioritizes:

Automated performance management using AI & ML to dynamically monitor environments with minimal manual effort.

Real-time monitoring to quickly pinpoint root causes when issues arise.

Automatically handling diverse data formats and dynamically configuring alerting rules as environments change.

As businesses make large language models (LLMs) and generative AI integral to their operations, visibility into these systems remains crucial – and far from clear cut.

AI doesn’t differentiate between good and bad data. It simply learns patterns and makes decisions based on the input provided. Data quality issues like bias, incorrect standardization, improper research methods, or just incorrect information can lead to "bad AI" that produces unreliable, unfair, or even dangerous outputs (as the saying goes: “garbage in, garbage out”.).

These aren’t just hypothetical examples — we’ve seen very real instances of how bad AI can lead to potentially disastrous consequences. In 2018, Amazon scrapped an AI recruiting tool after discovering it was biased against female candidates. The tool was designed to streamline hiring by rating job applicants' resumés and recommending top contenders. But the problem stemmed from the historical data used to train the AI. Amazon's models were trained on 10 years' worth of resumés submitted to the company, and, given the male dominance in the tech industry, most of these applications came from that demographic. The AI learned this pattern and began to systematically favor male applicants.

Incidents like this drive home how significant the impact of data quality is on the performance and behavior of AI models. Gartner estimates that bad data costs businesses $12.9 million annually, but the societal costs of biased or malfunctioning AI could be even greater due to AI’s potential speed and scale.

This is where observability comes in. Observability helps identify issues like biased outcomes, unexpected behaviors, or performance degradation by providing visibility into AI systems. By understanding what data is being used to train AI models, and having a running record of user and system activity (e.g., data transformations, access patterns), businesses can know with certainty that the incoming data is accurate, privacy compliant. (and the AI output should be as a result).

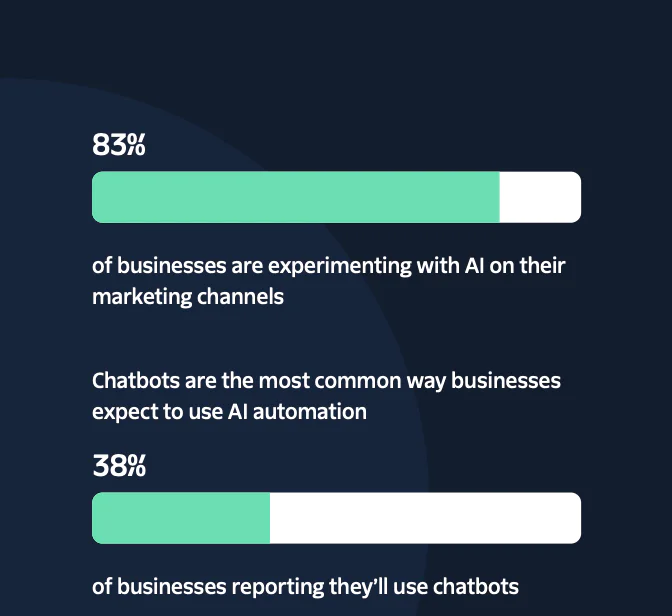

Take genAI chatbots, which are showing a huge surge in popularity due to recent advancements with LLMS. Having insight into how users are interacting with these chatbots is critical to understand how the AI is learning, and to understand how these interactions fit into the larger user experience.

With Twilio Segment, you can instrument your AI copilots to track both prompts, responses between, and conversation IDs. This helps you track everything from what data your copilot pulled from the data warehouse, to the media it generated on a customer’s request. (Here's a closer look at our AI copilot spec.)

Only well-managed data has value, and observability is an investment in your organization’s ability to make data-informed decisions.

As businesses continue to integrate advanced AI models, maintaining clear visibility into their systems becomes essential. Effective observability not only helps manage performance but also mitigates risks like bias or inaccuracies. By leveraging automated performance management, real-time monitoring, and dynamic data handling, businesses can better navigate these complexities and make data-informed decisions with confidence.

Learn more about the comprehensive set of tools Twilio Segment offers for observability.

Our annual look at how attitudes, preferences, and experiences with personalization have evolved over the past year.