Six steps to high quality data: Optimizing AI-driven personalization

Achieving high-quality data is crucial for effective AI-driven personalization. This blog outlines six steps you can take to ensure you are working off of high-quality data.

Achieving high-quality data is crucial for effective AI-driven personalization. This blog outlines six steps you can take to ensure you are working off of high-quality data.

Recently, I received an email from a company offering me 20% off an item I had put in my cart. The problem is, that email arrived days after I already purchased the item.

What did that interaction teach me? Well, it might save me money to wait a few days before making a purchase. I could add the item to my cart and wait for that discount email to roll in.

But retailers don’t want this thought process to become commonplace. With items sitting in carts across my browsers and my phone, I might lose interest in the original item, find a more affordable option, or forget about it all together.

With so many variables, it shouldn’t be a surprise that $4 trillion is lost to shoppers abandoning their cart every year.

Imagine a world where, instead, each customer received personalized marketing communication based on their unique relationship with the brand. Businesses who adopt artificial intelligence are finding that it’s possible to provide that level of personalization. What used to be a one-to-many relationship can start to feel like a one-to-one relationship.

According to the Twilio Segment 2023 State of Personalization report, 92% of companies are already using AI-driven personalization to drive growth in their business.

Yet, in the same report, 31% of users cite poor data quality as the biggest obstacles to leveraging AI in their business.

Not all data is created equal. So in this blog, we will outline 6 steps your business can take to achieve data quality, which can lead to more personalized interactions with your customers, building trust, and repeat business.

Just because you implement AI doesn’t mean you’re immediately going to send top-tier, personalized content. After all, AI is only as good as the data you share with it. And it is high quality data that bridges the gap between receiving personalized content and ensuring that said personalization is indeed accurate.

Unfortunately, poor quality data is all too easy to obtain. Let’s take a look at the havoc it can wreak.

Different teams within your organization rely on a different source of truth for their data. The Marketing team could use a customer relationship management (CRM) system, Analysts a data warehouse, and customer success files all customer information in tickets.

With data in disparate systems, it becomes incredibly difficult to see a single view of the customer. Not only is this time consuming to try and resolve, but it becomes harder to derive meaningful insights and make reliable decisions.

This often results in engineering teammates pulling lists manually to share with marketing teams. This information gets stale quickly, and creates a lag between insight and action.

Having data in multiple forms can:

Result in poor quality data because it introduces inconsistencies, redundancies, and inaccuracies.

Become challenging to ensure data integrity and uniformity as inconsistencies in data formatting, naming conventions, or data types can lead to confusion and errors during analysis.

Be redundant, creating duplication, making it difficult to identify the true and up-to-date information.

Ultimately, bad data can lead to poor customer experiences, loss of loyalty, and a loss of revenue.

In a world where we rely on AI to improve customer experiences and drive growth, we must strive for high-quality data as the cornerstone of accurate predictions, personalized interactions, and informed decision-making. Without it, businesses risk compromising the effectiveness of their AI initiatives.

Good data doesn’t just happen. It’s the result of investing the appropriate time and resources into maintaining it.

We’ve outlined 6 steps we recommend in order to ensure high quality data throughout your organization for a more consistent and reliable AI approach.

1. Audit your existing data

Take the time to look through the existing data that your company relies on. Bring in company stakeholders who can explain how they input data, and how they rely on it for their jobs. Then, map out where your data is stored and run your report.

Search for duplicate data, spelling errors, conflicting naming conventions, and other issues that might disrupt your operations, analyses, or campaign performance. Make a note of these in the report. Then designate a team member to implement these changes.

It is recommended to audit your data twice a year, or once a quarter, to ensure that it’s accurate and the audit task remains manageable.

2. Establish a clear data tracking plan

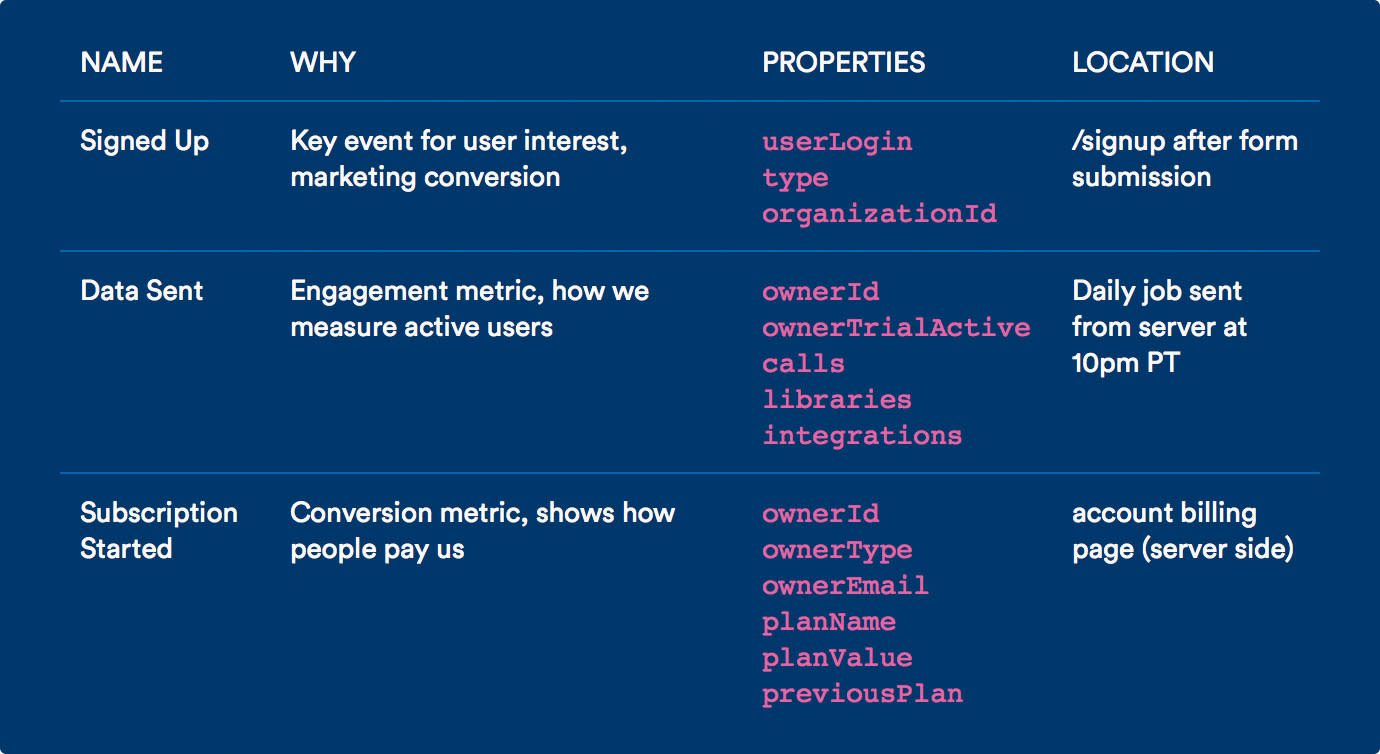

A tracking plan is a “source of truth” document used across teams to help standardize how data is tracked and align teams around one strategy for data collection.

A tracking plan consists of a list of events (i.e., user actions) that are paired with a description for each event. These events are used to map the most important steps of the customer journey, from free trial sign-up to recurring subscription to churn.

3. Standardize naming conventions

Now that you better understand the data collected, it’s time to ensure standardization in naming the data - that the data entries are uniform, and that one event isn’t being counted multiple times.

With standardized naming conventions, you can also help businesses automatically block events that don’t adhere to the tracking plan, protecting data at scale. (You can take a deeper dive and see examples here.)

4. Block bad data at the source

With a CDP like Twilio Segment, you can block data that doesn’t meet your standards from ever entering your downstream destinations. This happens within the Tracking Plan feature. (Watch a video here.)

For example, if you implemented an event that had a typo in it, you could prevent that event from making it to the downstream tool. You have the option to send this event and forward it to different places to be cleaned and replayed later on to make sure that there is no data loss.

Segment is granular enough where you can just block the properties that don’t match the spec, or block the entire event.

5. Choose the right analytics database

An analytics database is a scalable data management platform designed for efficient storage and retrieval of data, typically integrated within a comprehensive data warehouse or data lake. It enables rapid analysis of large datasets, allowing you to identify patterns, trends, and anomalies more swiftly than manual exploration.

These factors collectively contribute to ensuring high-quality data and promoting reliability, accuracy, and trustworthiness in the analytics and insights derived from the data.

6. Communicate the new process to your team.

You’ve done all this work to enable high quality data, now it’s time to keep it clean. We recommend that you host a meeting with your team where you outline your new schema, share the tracking plan, explain your naming convention, and discuss the process for tracking new events. This sets expectations and puts everyone on the same page.

Designate an individual that the teams need to run events by, and establish a time when events are decided within the product development process. Document this process for any onboarding purposes.

Some companies are even designating one leader of data who is responsible for owning the schema and approving all new events that go into apps and websites.

High-quality data leads to better AI usage within a company by improving model performance and enhancing decision-making. When AI models are trained on accurate and representative data, they can make more accurate predictions and recommendations.

High quality data allows for informed decision-making by uncovering valuable insights and trends that might not be immediately apparent. By leveraging high-quality data, companies can harness the full potential of AI, leading to more effective and impactful outcomes.

Our annual look at how attitudes, preferences, and experiences with personalization have evolved over the past year.