On January 30, we announced the availability of Regional Twilio Segment in the EU, which gives you Connections, Protocols, and Personas with data ingestion, processing, storage, and audience creation done on infrastructure hosted in the EU. Before the announcement, we had to make sure that Regional Twilio Segment in the EU would hold up under the usage levels we expected, and that meant doing not just functional testing, but also load testing. In this post, I'll talk about the load testing tool we developed, named Taz, and how it not only succeeded in giving us the confidence we needed in our EU infrastructure, but also found other unexpected uses.

Why we needed Taz

The US-based Twilio Segment infrastructure has been around for several years, so it's been scaled up and improved gradually as more and more customers used it. The infrastructure is now a battle-hardened architecture, and we took the lessons we learned from running it and applied them to the new EU-based infrastructure. However, we took the opportunity to adopt AWS Elastic Kubernetes Service (EKS) for the EU infrastructure,so we hadn’t yet tested the necessary new service configurations. Also, we expected that EU usage would ramp up much more swiftly compared to what the US had experienced, so we needed assurance sooner that it worked as expected.

Twilio Segment already has a set of end-to-end testing tools which each send small amounts of data through the platform to verify correct processing. We still needed a tool to test that the platform could withstand heavy loads, so we built Taz.

Taz's purpose is not to verify correctness, but to stress test its "target" by subjecting it to high amounts of traffic. Taz collects two primary metrics: request / call latencies and error rates. These metrics verify that the "front door" service Taz uses is working, but that service is usually just the first stop in the internal pipeline of services that make up our platform.

Fortunately, we have a vast amount of metrics for all of those pipeline services, so when Taz ramps up its load, we can watch those metrics escalate. Whether those services hold up under the stress or buckle as we make Taz spin harder is another, perhaps more satisfying, aspect of load testing.

A template-based HTTP load tester

The basic initial goal for Taz was to send traffic to our Data Ingestion (Tracking) API endpoint in the EU, the front door for Regional Twilio Segment in the EU. We needed data to send and some code to send it.

For the code part, Taz relies on Vegeta, a tool and library for drilling HTTP services at a sustained rate. Vegeta lets you define a "targeter" which supplies successive descriptions of HTTP requests to make, each called a "target". A typical target for the EU Tracking API looks like this when specified in JSON. (Base 64 strings are shortened for brevity.)

{ "url": "http://tracking-api.euw1.segment.com/v1/track", "body": "bmV2ZXJnb25uYW...dpdmV5b3V1cA==", "method": "POST", "header": { "Authorization": ["Basic: bmV2ZXJnb25uYW...xldHlvdWRvd24="], "Content-Type": ["application/json"] } }

The body of the request in this example is the base 64 encoding of the JSON for a track event. (This is effectively JSON containing JSON.) The value of the Authorization header includes the base 64 encoding of a write key that is already established in Regional Twilio Segment for accepting requests.

Once a targeter is established, an "attacker" reads targets from it and fires them at a specified rate (in requests per second). The attacker returns the results for its attacks, which get compiled into latency and error metrics. So, that takes care of the code needed to send data; now we just need the data to send.

While Taz can read a file containing a canned sequence of targets, we wanted to produce an indefinitely long stream of randomized targets so that Taz could run at a high rate for as long as we wished it to. Vegeta's built-in JSON targeter can read from any Go reader, so we created a specialized template reader that generates new targets from a Go template. Generating targets from templates avoids hardcoding any data characteristics into Taz itself, which makes Taz much more flexible.

Here's an abbreviated version of the Go template for generating a track event. It uses template functions for the Phony library to insert randomized data each time it's evaluated.

{ "type": "track", "userId": "{{ phony "id" }}", "event": "Item Purchased", "context": { "ip": "{{ phony "ipv4" }}" }, "timestamp": "2012-12-02T00:30:08.276Z" }

That template works for an event, but we need another template for the Vegeta target. Here's that template, again slightly abbreviated. You can spot some template functions from the Sprig library, like list and base64.

{ "url": "{{ .BaseURL }}/v1/track", "body": "{{ includeEvent "track.json" "track" . }}", "method": "POST", "header": { "Authorization": [ "Basic: {{ list (selectWriteKey .WriteKeys "track" .) ":" | join "" | toBytes | base64 }}" ], "Content-Type": [ "application/json" ], } }

Let's unpack this template to explore what it's doing.

-

The

.BaseURLtemplate variable points to the base URL for the Tracking API. This lets us use the same template for different deployments of that service. -

The

includeEventtemplate function, defined by Taz, includes the evaluated template for a track event, base 64 encoded, inside this template. The function also has hooks to support some advanced ways to reuse previous events, which I'll explain in a little bit. -

The long expression for the Authorization header picks a write key from a list of them passed to the template and properly base 64 encodes it. Again, there are some hooks for advanced features hiding in that Taz-specific

selectWriteKeyfunction.

That's two layers of templates, but in practice there's one more layer on top. We usually want Taz to send a mix of different event types like track, identity, batch, and so on. One more template can dynamically pick a random type of event to send, using ratios for those events that we observe in production (those ratios are tweaked here for simplicity).

{{ $p := randFloat64 }}{{ if le $p 0.6 }} {{ template "batchFixture.json" . }} {{else if le $p 0.8 }} {{ template "trackFixture.json" . }} {{else if le $p 0.9 }} {{ template "pageFixture.json" . }} {{else if le $p 0.93 }} {{ template "identifyFixture.json" . }} {{else if le $p 0.96 }} {{ template "groupFixture.json" . }} {{ else }} {{ template "aliasFixture.json" . }} {{end}}

The expressiveness of Go templates, along with Vegeta's configurability, makes it easy to change test content and behavior without delving into Taz's code. However, there's a downside with relying on templates so much.

Tool performance

We usually deploy Taz on containers "next to" what we are testing so that the load is focused on what we want to test. For example, for the Tracking API, we run Taz in the same Kubernetes cluster as the Tracking API service so that we don't overload the load balancers and other infrastructure when we aren't interested in exercising them too.

When we first started cranking up Taz's attack rate, we noticed that its reported attack rate would reach a maximum, regardless of whether we configured it to go higher. It became clear that the Taz container had become CPU-bound. Now, we could run multiple Taz replicas to further increase the overall rate for a test - and we did actually do that - but we needed each individual Taz process to go faster so that we wouldn't need to run so many replicas.

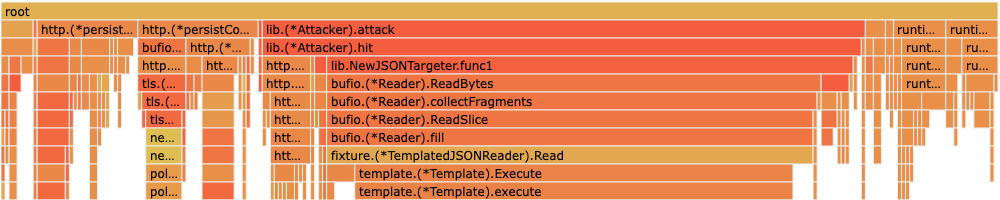

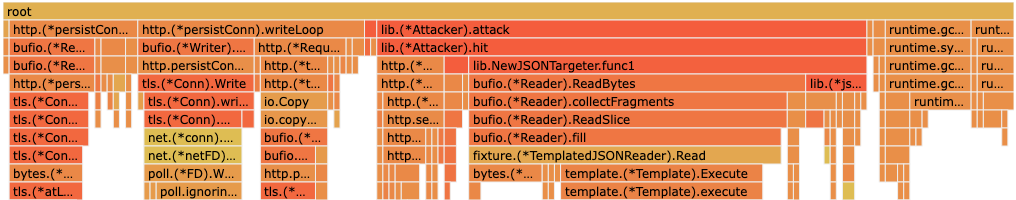

We took a look at Taz's CPU usage using the venerable pprof tool. Flame graphs soon revealed that Taz was spending a majority of its time evaluating all of those wonderful Go templates. Here's an example showing how template evaluation can dominate CPU time when the attack rate is too high.

Reuse events

We decided to reduce template evaluations by letting Taz reuse events that it had already generated for previous attacks. One consequence of this approach is that test traffic has a lot of repeated events, but that's OK for load testing because the focus is on the quantity of the data, not the variety in it.

The amount of reuse is expressed as a factor from 0.0 for none at all up to 1.0 for always (after at least one event per type is generated to get going). Each time the template reader is generating a new target, it has a random chance, controlled by the factor, to reuse the previous event - a reuse event - instead of generating a new one. Otherwise, a new event is created from a template, but that event becomes the next one that's potentially reused.

With reuse in place at a factor of 0.9, CPU time spent on template evaluation is significantly reduced. This gives each individual Taz instance much more power, so that we can achieve higher overall load rates with fewer instances.

Duplicate events

Another way that events are reused is by intentional duplication. A duplicate event has the same event content as an original one, including the common field messageId. Normally, you don't set this field explicitly when using Twilio Segment, but it's automatically assigned and collected. The message ID is used to detect when the exact same event has inadvertently been sent multiple times.

Taz gives a reuse event a unique message ID that doesn't match the one for the original event, so it is not treated as a duplicate event. In contrast, a duplicate event gets the exact same message ID as before. Sending duplicate events lets Taz better simulate real-life traffic and exercises the deduplication portion of the pipeline, which wouldn't have much to do otherwise.

The amount of duplication is expressed by its own 0.0 to 1.0 factor, which is usually set at 0.01. So, it does also contribute a little to reducing template evaluation.

Both reuse and duplicate event support is implemented in the includeEvent custom template function used in the Vegeta target template. The selectWriteKey custom template function is also smart enough to know that when it sends a duplicate event, it needs to pick the same write key used for the original.

Other important features

As Taz evolved, it gained new features that were helpful for both working with it and expanding it to other uses.

Automatic stoppage

You don't want a load testing tool to continue flogging your infrastructure once it has killed it. You might want it to stop while the system is still up but struggling enough to show where the trouble spots are. An attentive tester can easily stop a load test at that point, but why not let the tool handle that?

Taz has an automatic stoppage feature that causes it to cease sending traffic once there are either too few successes (in HTTP terms, responses in the 2xx range) or once call latencies are too high. For example, you could configure a minimum healthy success rate of 98% and a maximum call latency of 100 milliseconds, and as long as Taz metrics stay without those bounds, it keeps going.

Taz decides whether to stop using a simple voting system.

-

When, in a span of time, the success rate is too low or the latency rate is too high, Taz tallies a "stop vote". Once the stop vote count reaches a limit - for example, you could configure the limit to 10 - then Taz stops sending traffic.

-

However, when the success rate and latency rate are both healthy in a span of time, then Taz _removes_ a stop vote (down to a minimum of zero). This lets the test recover from transient problems, instead of terminating prematurely.

Configuring this feature according to a system's service level objectives gives you concrete proof of whether your system achieves them at a given load level.

Template reloading

Taz is usually run in a container which includes all of its test template files. While it's easy to restart Taz to adjust attack rates and other configuration settings, it's not as easy to make changes to those templates without building a new Taz image and deploying it.

As an alternative, Taz can be configured to periodically check an AWS S3 bucket for new templates. When templates have been updated, then Taz downloads them and swaps them in, and then restarts ongoing tests to use them instead.

This avoids the need to create new images to modify existing templates. However, a container running Taz has to have the AWS IAM permissions to read the S3 bucket. So, there's more infrastructure work necessary to use this feature, which makes it a little harder to set up for a test.

Beyond HTTP

To load test a specific component in the Twilio Segment pipeline, it's much better to send data to a point very close to that component, instead of all the way back at the Tracking API. Most of our components do not talk HTTP, but a lot of them do accept messages from either NSQ or Kafka.

So, we adapted the target and attacker code from Vegeta into separate capabilities to support NSQ and Kafka messaging. By sticking with Vegeta's established patterns, we're able to reuse other Vegeta features like rate controls and metrics collection, and overall we can use Taz in the same way regardless of where it's sending data.

For example, here is the target template for a Kafka message that holds a track event.

{ "addr": "{{ .KafkaAddr }}", "topic": "{{ .KafkaTopic }}", "valueBytes": "{{ includeTemplate "kafkaTrackMessage.json" . | b64enc }}" }

This target template tells Taz not to send an HTTP request, and instead to send a message to a Kafka broker address and topic (each specified by a template variable). Each message's content is the base 64 encoding of a separate template for a Kafka message that holds a track event. The message's format essentially holds information about an HTTP request to the Tracking API. A key line in that template is for the request body:

"body": {{ includeEvent "track.json" "track" . | b64dec | nospace }},

Hopefully this looks familiar; it reuses the same track event template that's used for HTTP testing. It also reuses the includeEvent template function, so Kafka tests get "free" reuse event and duplicate event support. Heavy reuse through templating makes it very easy to build new, complex tests quickly.

Beyond load testing

Besides stress testing Regional Twilio Segment in the EU, which we subjected to tens of thousands of events per second (well over 9000), we've employed Taz for other, unforeseen purposes.

Trickle testing

Taz is just as useful for sending a trickle (low volume) of data as it is for sending a flood. You should consider this when:

-

A system is not yet used on a consistent basis, then a constant trickle of test data flowing through it produces metrics that verify it's still working. You might turn off such a test once adoption has picked up.

-

A system is being used consistently, some parts of it might not be. A low volume of specialized data that exercises those parts verifies they're still working. For example, Twilio Segment can cordon off problematic traffic into a separate "overflow" data flow, and while it's very rarely used, it's critical when we need it. So, we run a "persistent" Taz test which intentionally sends a low rate of overflow events.

A trickle test, like an ordinary load test, verifies that a system is responding and processing data, but doesn't fully check it is doing the right work. You still want an end-to-end test to verify the final output. Trickle / load testing and end-to-end testing complement each other.

Personas and synthetic profiles

Twilio Segment Personas works by forming unified views of customers, called "user profiles" or just "profiles". Services that implement Personas need load testing like any other, but the interesting code paths are only followed when there are profiles to be worked on in the incoming test data. The randomized data Taz normally sends doesn't fit the bill here.

We solved this problem by first adding some special state generation to Taz that creates synthetic profiles from combinations of random user IDs, email addresses, and anonymous IDs. Then, we crafted a unique template function that knows how to select a subset of identifiers from a synthetic profile. An event template then uses these identifiers instead of stateless, random data.

Here's what a track event template looks like for Personas profile testing.

{ "type": "track", {{ $idSet := selectPersonasIdSet }} {{ with $idSet }} {{ if .HasUserId }} "userId": "{{ .GetUserId }}", {{ end }} {{ if .HasAnonymousId }} "anonymousId": "{{ .GetAnonymousId }}", {{ end }} {{ end }} "event": "Item Purchased", "context": { {{ if $idSet.HasEmail }} "traits": { "email": "{{ $idSet.GetEmail }}" }, {{ end }} "ip": "{{ phony "ipv4" }}" }, "timestamp": "2012-12-02T00:30:08.276Z" }

The selectPersonasIdSet template function picks a random synthetic profile from a pool and gives back a random set of identifiers from that profile. Each profile is built such that its identifier sets, all together, fully define the profile when used in events. After all of the identifier sets from a profile are exhausted, the profile is replaced in the pool with a fresh new one.

The target template for sending these events is almost exactly the same as the usual one, except that it uses the event template for Personas track events.

{ "url": "{{ .BaseURL }}/v1/track", "body": "{{ includeEvent "trackPersonas.json" "track" . }}", "method": "POST", "header": { "Authorization": [ "Basic: {{ list (selectWriteKey .WriteKeys "track" .) ":" | join "" | toBytes | base64 }}" ], "Content-Type": [ "application/json" ] } }

With tests using templates like these, we were able to ensure that Segment Twilio Personas was ready for use in the EU. Since then, the team has continued to use Taz in other areas where customized data is called for. This is just one example of where Taz's flexibility led to its use for more specialized testing needs.

Lessons learned

Taz has turned out to be an unexpectedly valuable tool, thanks to several key design decisions which you may find helpful for your own internal tooling.

-

Taz does one thing well: send lots of requests as quickly as possible. Because Taz does not try to achieve more, it's more generally useful, and yields unforeseen synergies with other tools.

-

Reliance on Go templates, while creating some performance issues, benefits us with high levels of flexibility, because we can build new tests without code changes. New users don't need to understand how Taz works internally, just how to build test templates.

-

Building in general support for the most common testing needs, like understanding Twilio Segment events, reduces the effort needed to create new Taz tests and increases the speed and breadth of adoption.

-

Added features which adhere to existing, standardized code patterns, like Vegeta's target concept, get to reuse existing features associated with those patterns with no additional effort.

Taz was initially focused just on testing Regional Twilio Segment in the EU. While it succeeded there, it's been personally gratifying to see Taz succeed beyond that, because we've saved a lot of developer effort and gotten testing performed for features that perhaps couldn't have been tested as thoroughly without it. When you're as thoughtful in designing your internal tools for your internal customers - fellow developers - as you are in designing your product, both your coworkers and your customers win.

The State of Personalization 2023

Our annual look at how attitudes, preferences, and experiences with personalization have evolved over the past year.