This a guest post from Shirley Javier, Product Manager at Taplytics, the mobile optimization platform. Try Taplytics out on the Segment platform today.

Building an effective experiment is one of the most powerful strategies you have at your disposal. So much of your company’s success depends on how you evolve alongside the market—being able to test out what works for your target audience is critical.

But creating effective A/B tests requires a deep understanding of both your product experience and your customer expectations. That understanding comes from a strong base of customer data and team insights. Without those two things, striking a balance between optimizing the customer experience and furthering your business goals is impossible.

Following a/b testing best practices helps you create experiments to improve conversion rates, connect with customers, and build out an engaging experience with your product, without negatively impacting customers or your business along the way. That makes it easy to learn more about what drives user engagement while adding value for your business.

1. Figure out what to test

Useful A/B tests are specific. Before you start building experiments, your team needs to determine what aspects of the product or user experience benefit most from experimentation. Defining the test parameters first helps you move forward, knowing you’ll make a real impact on customers.

Map out the customer journey to help your team get started. A customer journey map enables you to identify areas of improvement based on what aspects of the experience cause the most friction for users. Categorize what happens at each stage of the customer journey into:

-

Activities: what people do at various stages

-

Motivations: why people perform those actions

-

Emotions: how people feel as they perform those actions

-

Barriers: what stops them from taking action

Barriers will be the first thing you want to look at, because they are the most suitable for effective A/B testing. Removing these barriers makes it easier for users to accomplish their tasks, which leads to a better overall product experience. The easiest way to define these elements is to use your customer analytics. This data helps you pinpoint touchpoints along the journey, based on real-world customer experiences.

Understanding the typical paths customers take to learn more about your product, make a purchase, and engage with your team is the best way to identify what aspects of their experience need work.

2. Tie experiments to specific KPIs

Once you have a handle on the aspects of the product/user experience you want to test, define the key performance indicators (KPIs) you’ll use to track and analyze the impact of these experiments. A/B testing is only as powerful as the metrics you use to track your success. Without specific metrics, it’s impossible to categorize your results.

Choosing the right metrics helps set a baseline for your KPIs as well, which you’ll use to track the evolution of subsequent experiments over time. Outlining changes to these metrics make it easy to understand the impact a specific testing variant has on your hypothesis.

Analyzing these KPIs is one of the most high-leverage things you can do to refine the experimentation process because it helps you nail down exactly what works and what doesn’t. Especially when you pair that analysis with a well-defined A/B testing goal, these goals help you connect the impact of each experiment to your overarching business objectives.

The best way to do this is to formulate your testing hypothesis as a SMART goal. These goals are specific, measurable, attainable, relevant, and time-bound, so they provide your team all the context they need to understand results.

3. Leverage good data

Data integrity is a fundamental element of any successful experiment. Without good data, it’s impossible to track the real-world results of your A/B tests. Customer data platforms like Segment help your team build out their experiments with a solid basis of clean and actionable data.

With the Taplytics/Segment integration, you ensure parity between both tools and save your team a lot of time analyzing results. Information automatically syncs between the two platforms, so any data you’ve collected with either tool can be used to build out your experiments.

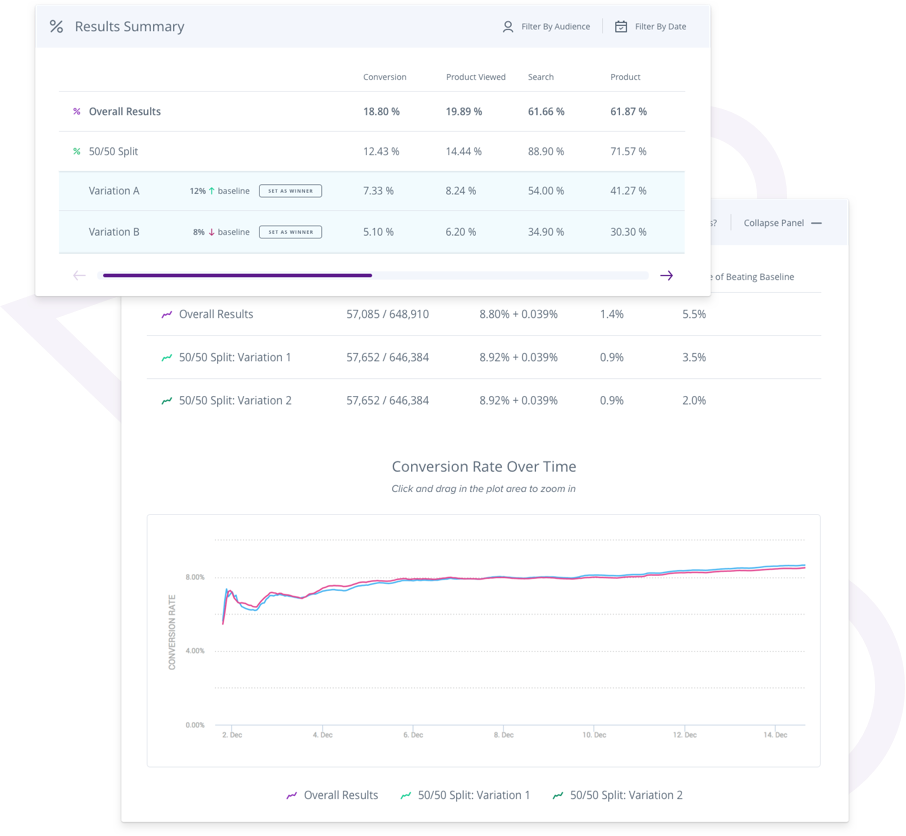

Example conversion rate experiment in Taplytics

Let’s say you’re running an experiment to track the conversion rate for your newsletter subscription landing page. If you have two plans set up, basic and premium, you can use that information to build out your test variants and manage these tests in Taplytics. That helps you narrow down specific metrics and KPIs to track on a per-experiment basis.

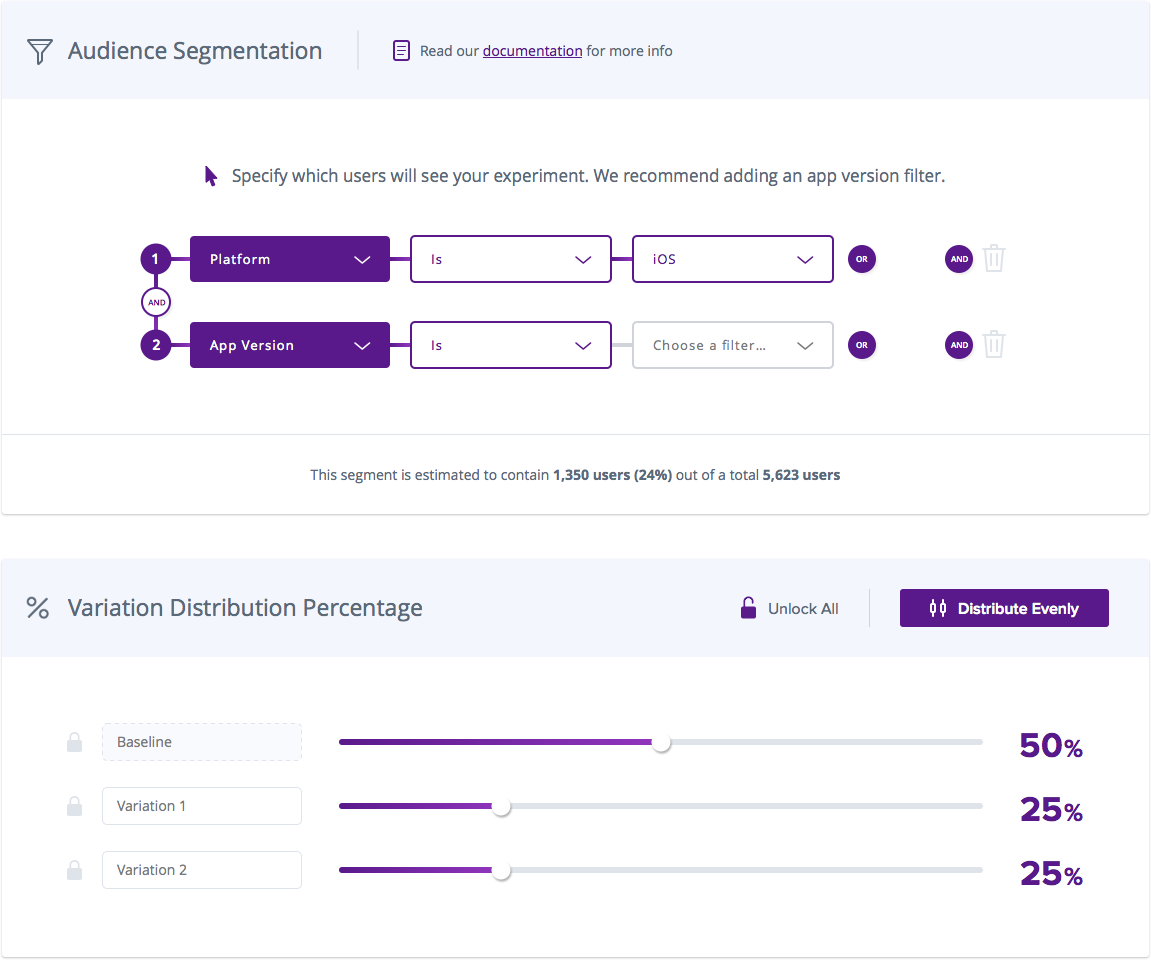

4. Target the right audience

A/B tests need to target a specific set of customers. Proper targeting not only makes it easier to define what changes to make your testing variants, it also gives you the ability to tailor the experience to specific sets of users and their needs.

Using Taplytics, you can easily define custom user attributes based on a variety of characteristics between Taplytics and Segment. As a bi-directional integration, it’s easy to pass these parameters between the two as well. Let’s say you have a subset of users who signed up for an account using the same landing page. By analyzing your customer data in Segment, it’s easy to identify what similarities these people share and how those characteristics impact their website experience.

Using that information, you can create a targeted testing variant for your landing page and serve it directly to website visitors that share these characteristics. A properly targeted A/B test has a better potential for positive results and makes it easy for you to see the impact of your experiments on a deeper level.

Targeting gives your team the context they need to validate certain assumptions about your target audience and what encourages them to take the desired actions on your site.

5. Create unique test variants

A/B testing takes time and resources to set up correctly, so it’s important that each test variant you make has the potential to make a significant impact on user behavior. While minute changes can help you refine experiences over time, more substantial changes make for more straightforward analysis and tracking at scale.

Unique test variants are also easier for users to differentiate. For example, changing the placement or size of a CTA on your site is potentially more impactful than merely changing the shade or color. Keep in mind that any A/B test should only change one aspect of the experience at a time. That’s not to say you can’t have multiple variants, just that it’s essential to keep variations to a single element. Otherwise, it’s difficult to nail down your results.

A/B test variant example via Taplytics

Keeping test variants unique also helps you keep track of which aspects of the experience have the most impact on your customers. Let’s say you’re testing out a transactional push notification. Changing the headline text in each variant is an A/B testing best practice because it gives you an easy method of tracking the impact of different languages on your target audience.

Refining this language over time helps you create a better overall notification. It helps you refine the language you can use on the rest of your site as well.

6. Schedule tests for the right time

A/B tests need time to gather enough data to either prove or disprove your hypothesis—two weeks is the recommended A/B testing best practice. As you build out experimentation processes at your company, include guidelines for both the scheduling and duration of tests. This standardizes workflows for your team and helps them build individual tests more efficiently.

Use customer data to understand the best timing for your target audience. If there’s an upcoming product release, sale, or promotion that will increase website traffic, scheduling your tests to coincide with those times is a great way to increase engagement with specific test variants.

As you schedule these tests, it’s also important to consider what aspects of the experience you plan to change. You don’t want to run into issues by changing primary product functionality during high usage times. And make sure you don’t run concurrent tests on individual aspects of the product or user experience either. This could skew your results and confuse your user base with too many changes in a short period.

7. Understand the statistical significance

Statistical significance tells your team that the outcome of each A/B test is actually a result of the experiment you created, and not just a random shift in customer behavior. These complex calculations look at the experiment’s result and measure how confident you can be that the data was a direct result of whatever you changed during the experiment itself.

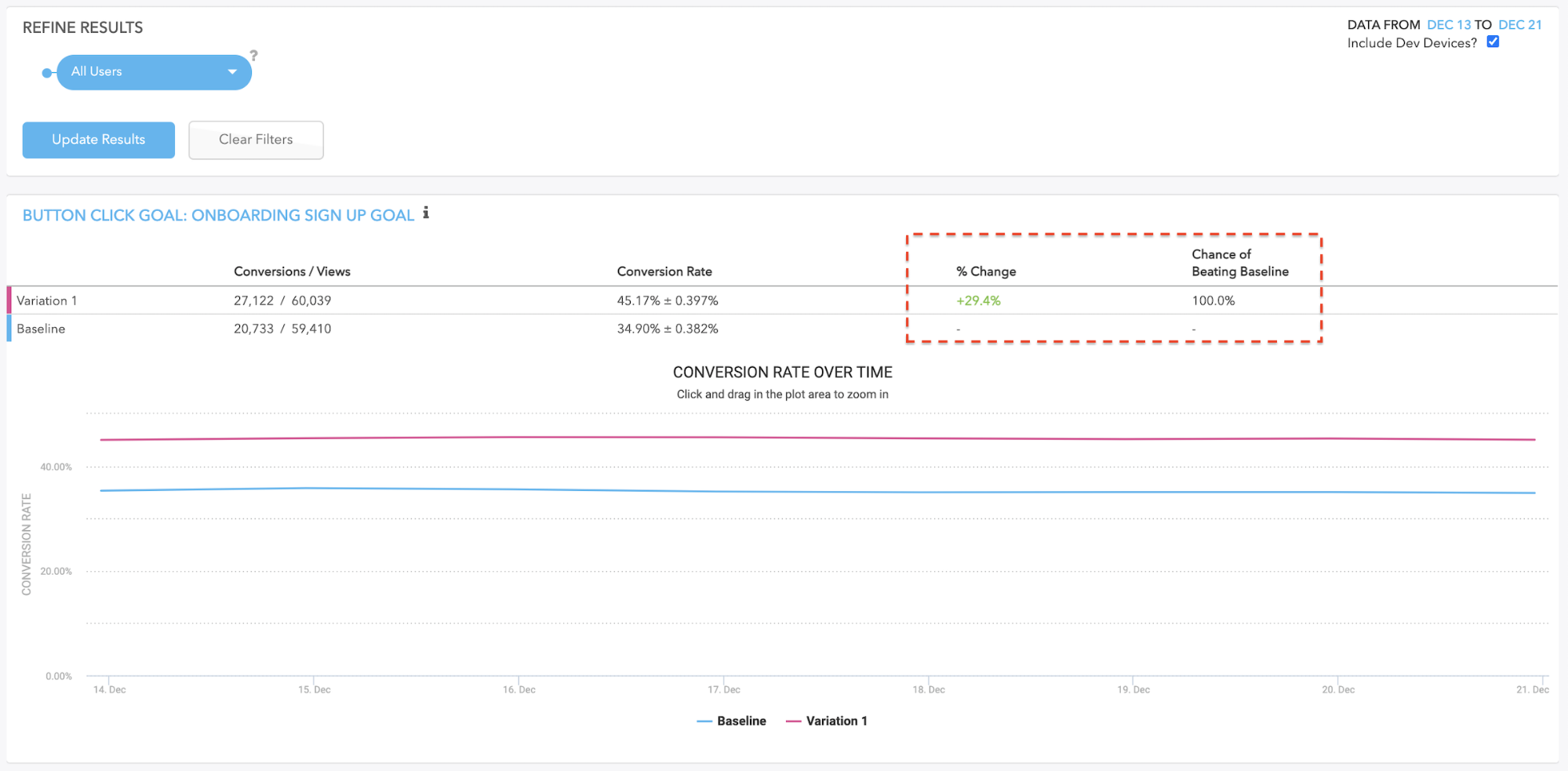

Statistical significance in the Taplytics/Segment integration

Segment’s Taplytics integration calculates statistical significance automatically, to a confidence level of 95%. This takes a lot of the complex mathematical workload off your team and helps them gain a better understanding of the results. By proactively removing any uncertainty in the process, your team can act on the outcomes and make decisions faster.

An A/B testing dashboard keeps track of each experiment’s long-term impact in reference to other tests as well, which helps you refine the way your team builds tests with each iteration.

8. Share results with your team

Tracking the overall impact of various experiments is helpful only if you communicate testing results with your team. Highlighting successes and areas of improvement in this way boosts engagement with the A/B testing process and helps increase visibility into important aspects of the product and user experience.

When you increase visibility into these experiments, it holds the team accountable for the work they do to create and run your A/B tests. That helps everyone involved feel connected to the results and invested in the experiments they create. When you get multiple perspectives on the test results, it also builds a shared sense of commitment to the process and helps you brainstorm more diverse solutions to customer problems.

The bi-directional integration between Taplytics and Segment makes sharing those results easy by housing data on either platform. If you have a dashboard set up in Segment, you can share those results directly, or vice versa. This data parity ensures that, regardless of your team’s preferred tool, all A/B testing results are clear and easy to understand.

9. Apply changes through a phased rollout

Once you’ve run your A/B tests and determined which of the variants is more successful, it’s time to roll out those changes to your entire user base. A phased rollout mitigates the risk of implementing those changes by providing more control for your team.

Phased rollouts are especially important for changes that have a direct impact on the product experience. Let’s say your experiments were designed to make it easier for new customers to move through the onboarding process. Once you’ve determined what changes to make, pushing those updates live to the customer is the crucial next step

Using a phased rollout schedule helps you make these changes slowly for your target audience and decreases the potential strain on your infrastructure as well as your team. Whenever you make a change to core functionality, it’s important that you ensure the smoothest transition possible for your users. And bumps along the way can have a negative impact on your relationship with them.

A/B testing best practices help you build experiments at scale

Designing, implementing, and analyzing your A/B tests is a complex process involving a number of moving parts. As your company grows and your team expands, your approach to experimentation needs to mature as well. Following A/B testing best practices helps you build experiments more efficiently as your company needs evolve and helps you create and analyze experiments that have a real impact on your users as well as your business goals.

The State of Personalization 2023

Our annual look at how attitudes, preferences, and experiences with personalization have evolved over the past year.